Attention Is All You Need facts for kids

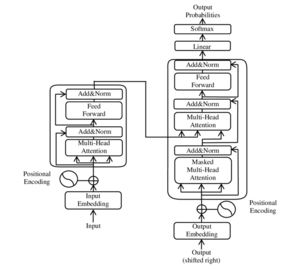

"Attention Is All You Need" is a very important research paper from 2017. Eight scientists at Google wrote it. This paper introduced a new way for computers to learn, called the Transformer. The Transformer uses something called "attention mechanisms." These help the computer focus on the most important parts of information.

Many people see this paper as a key moment for modern artificial intelligence (AI). This is because Transformers became the main design for large language models. These are the AI programs that power tools like those based on GPT.

When the paper was written, the main goal was to make machine translation better. This is when computers translate languages. But the authors also knew their idea could help with other tasks. These include answering questions and creating different types of AI, like multimodal Generative AI. This type of AI can work with text, images, and sounds.

The paper's title is a fun nod to the song "All You Need Is Love" by the Beatles.

As of 2024, this paper has been mentioned by other researchers over 100,000 times. This shows how much it has influenced the field of AI.

Who Wrote This Important Paper?

The eight scientists who wrote "Attention Is All You Need" are:

- Ashish Vaswani

- Noam Shazeer

- Niki Parmar

- Jakob Uszkoreit

- Llion Jones

- Aidan Gomez

- Lukasz Kaiser

- Illia Polosukhin

All eight authors contributed equally to the paper. The order of their names was chosen randomly. A magazine called Wired pointed out that the group was very diverse. Most of the authors were born outside the United States.

By 2023, all eight authors had left Google. Most of them started their own AI companies. Lukasz Kaiser joined OpenAI, another leading AI research company.

See Also

- Transformer (machine learning model)

- Large language model

- Generative artificial intelligence

| Selma Burke |

| Pauline Powell Burns |

| Frederick J. Brown |

| Robert Blackburn |