Cache algorithm facts for kids

A cache algorithm is like a smart manager for a special storage area called a cache. A cache is a small, fast place where computers temporarily store data they use often. This helps them get information much faster than going to the main storage.

When the cache gets full, the cache algorithm decides which old information to remove. This makes space for new, important data. How well an algorithm works is measured in two ways:

- Hit rate: This is how often the computer finds the information it needs right in the cache. A high hit rate means the cache is working well!

- Latency: This is how quickly the computer can get the information from the cache. Lower latency means it's super fast.

Cache algorithms try to find the best balance between a high hit rate and low latency.

Contents

Types of Cache Algorithms

There are many different ways a cache can decide what to keep and what to throw away. Here are some common ones:

Belady's Optimal Algorithm

This algorithm is the most efficient way to manage a cache. It would always remove the information that won't be needed for the longest time in the future. Imagine if your computer could see into the future!

- Why it's special: It's called the "clairvoyant algorithm" because it knows exactly what's coming.

- Why it's not used: In real life, computers can't predict the future. So, this algorithm is mostly used to compare how well other algorithms perform.

Least Recently Used (LRU)

The LRU algorithm is very popular. It works by removing the item that hasn't been used for the longest time.

- How it works: Think of it like a stack of books. The book you just read goes on top. When you need to remove a book, you take the one at the very bottom (the one you read longest ago).

- Tracking usage: To do this, the computer needs to keep track of when each piece of data was last used. This can be a bit complex for the computer to manage perfectly.

- Variations: LRU is actually a family of algorithms, with different versions like 2Q and LRU/K.

Most Recently Used (MRU)

MRU is the opposite of LRU. This algorithm removes the items that were used most recently.

- When it's used: This method is often used in database systems, especially when you're likely to need older information again soon.

Pseudo-LRU (PLRU)

Sometimes, keeping perfect track of "least recently used" is too hard or slow for a computer. That's where PLRU comes in.

- Simpler tracking: PLRU doesn't need to know the exact order of usage. It just needs a little bit of information (one bit) for each item to make a good guess.

- Good enough: It's not as perfect as LRU, but it's much simpler to implement and often good enough for many situations.

Cache Algorithms for Speed

Some computer parts, like the CPU cache, need to be incredibly fast. For these, even PLRU can be too slow.

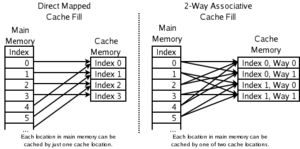

2-way Set Associative Cache

This type of cache is designed for high speed.

- Limited choices: When new data arrives, the computer knows it can only go into one of two specific spots in the cache.

- Discarding: It then checks which of those two spots holds the least recently used item and replaces it. This requires just one bit of information for each pair of spots.

Direct-Mapped Cache

This is the fastest type of CPU cache.

- One spot only: When new data arrives, there's only one specific spot in the cache where it's allowed to go.

- Simple replacement: Whatever was in that spot before is simply thrown out. This is very fast because there's no decision to make.

Least Frequently Used (LFU)

LFU focuses on how often an item is used, not just when it was last used.

- Counting usage: This algorithm counts how many times each piece of data is accessed.

- Discarding: Items that have been used the fewest times are removed first.

Adaptive Replacement Cache (ARC)

ARC is a clever algorithm that tries to get the best of both LRU and LFU.

- Balancing act: It constantly adjusts itself, balancing between removing the least recently used items and the least frequently used items.

- Improved results: This helps it adapt to different patterns of data usage and often leads to better overall performance.

Multi Queue (MQ) Caching Algorithm

This is another advanced caching algorithm designed to improve performance by using multiple queues to manage data.

Other Important Things to Consider

When designing a cache, there are other factors that algorithms might think about:

Cost of Getting Data

Some information takes a long time or a lot of effort to get (like downloading a big file from the internet).

- Keeping expensive items: A smart cache might try to keep these "expensive" items longer, even if they haven't been used recently, because it's costly to get them again.

Size of Data Items

Not all data is the same size.

- Making space: If a cache is full, it might choose to remove one very large item to make space for several smaller, more important ones.

Data That Expires

Some information is only useful for a certain amount of time.

- Time limits: Caches for things like news articles, website pages, or internet addresses (DNS) often have items that expire. The computer will automatically remove these items when they are no longer fresh.

Cache Coherency

Sometimes, the same data is stored in multiple caches at the same time. This happens when different parts of a computer system or different computers need access to the same information.

- Keeping data consistent: Cache coherence is about making sure that all these different caches have the correct and most up-to-date version of the data. If one cache changes the data, all other caches need to know about it so they don't use old information.

Images for kids

See also

In Spanish: Algoritmo de caché para niños

In Spanish: Algoritmo de caché para niños

| Isaac Myers |

| D. Hamilton Jackson |

| A. Philip Randolph |