Cache coherence facts for kids

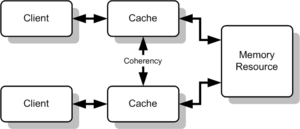

Imagine your computer has a super-fast scratchpad called a cache. It stores copies of data from the main memory to help the computer work faster. But what happens if different parts of your computer, like different CPUs, each have their own cache, and they all have a copy of the same piece of data?

This is where cache coherence comes in! It's a fancy way of saying that all these different caches need to agree on what the correct data is. If one CPU changes a piece of data in its cache, all other caches that have a copy of that data need to know about the change. Otherwise, they might be using old, incorrect information, which could cause big problems for the computer. Cache coherence makes sure all copies of data are consistent and up-to-date.

Contents

What is Cache Coherence?

Cache coherence is about making sure that when different parts of a computer system share data, they all see the most current version. Think of it like this:

- If you write something down, and then you read it right away, you should see what you just wrote.

- If someone else changes that information after you wrote it, the next time you look, you should see their new change, not your old one.

- Only one person can change a specific piece of information at a time. If multiple changes happen, they must happen one after another, in the correct order.

These rules help keep all the data copies in different caches in sync. In real computers, things happen incredibly fast, but there can be tiny delays. Cache coherence systems work hard to manage these delays and keep everything consistent.

How Computers Keep Caches Coherent

Computers use different methods to make sure all caches have the correct and updated data. Here are the main ways:

Directory-Based Coherence

This method uses a special "directory" that keeps track of where every piece of data is cached. When data changes, the directory knows exactly which caches have a copy. It then tells only those specific caches to update their data or get rid of their old copy. This is like a librarian who knows exactly who has checked out which book.

Snooping Coherence

In this method, each cache acts like a "snooper." It constantly watches the main communication lines (called a "bus") to see what other parts of the computer are doing. If a cache sees that another part of the computer is changing data that it also has a copy of, it immediately updates its own copy or marks it as invalid. This is like everyone in a room listening to a conversation and updating their notes if something important changes.

Snarfing Coherence

Snarfing is similar to snooping, but it's more active. When a cache controller "snarfs," it not only watches for changes but also tries to update its own copy of data if another part of the computer modifies it in the main memory.

Comparing Snooping and Directory Methods

Both snooping and directory-based methods help keep caches coherent, but they have differences:

- Snooping is often faster for smaller computer systems. This is because all caches see every data request and can react quickly. However, it doesn't work as well for very large systems because broadcasting every request to many caches uses too much communication space (bandwidth).

- Directory-based systems are usually a bit slower because they need to send messages to the central directory first. But they are much better for very large computer systems (like those with more than 64 processors). This is because messages are sent directly to the specific caches that need to know, rather than broadcasting to everyone.

Images for kids

See also

In Spanish: Coherencia de caché para niños

In Spanish: Coherencia de caché para niños

| Delilah Pierce |

| Gordon Parks |

| Augusta Savage |

| Charles Ethan Porter |