Backpropagation facts for kids

Backpropagation is a clever way to teach neural networks (which are like computer brains) to do tasks much better. This method helps them learn from their mistakes.

The idea of backpropagation was first used for this purpose in 1974. Important scientists like Werbos, Rumelhart, Hinton, and Williams wrote about it. The name "backpropagation" is short for "backward propagation of errors." This means the errors are sent backward through the network to help it learn.

It works especially well for neural networks that send information in one direction, without any loops. It's also great for problems where the computer learns with a "teacher," meaning it gets examples with the correct answers. This is called supervised learning.

How Backpropagation Works

Imagine you're teaching a computer brain to recognize pictures of cats. At first, it might make many mistakes. Backpropagation helps it learn by figuring out how wrong its answers are and then correcting itself. This process is repeated many, many times.

Here's a bit more detail on how it happens:

- Step 1: Measure the mistake. You first need a way to measure how far off the computer's answer is from the real, correct answer. This is done using something called a "loss function." It gives you a number that shows how big the error is. Often, this is done for many examples, and then the average mistake is calculated.

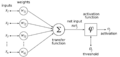

- Step 2: Figure out how to adjust. The computer brain has many internal settings, like adjustable "knobs" or "dials," called parameters (weights and biases). Backpropagation figures out exactly how to turn these knobs to make the mistake smaller. It uses a bit of math called a derivative to understand how each knob affects the overall error. It's like finding the best direction to turn each dial.

- Step 3: Make the adjustments. Once the computer knows how to adjust its internal settings, it changes them. One common way to do this is called gradient descent. It's like slowly moving down a hill to find the lowest point, which in this case means finding the smallest error.

This whole process is repeated over and over again. The computer keeps making predictions, measuring its mistakes, and adjusting its internal settings. It continues until the neural network becomes good enough at its job, meaning its mistakes are very small.

Images for kids

See also

In Spanish: Propagación hacia atrás para niños

In Spanish: Propagación hacia atrás para niños

| Madam C. J. Walker |

| Janet Emerson Bashen |

| Annie Turnbo Malone |

| Maggie L. Walker |