Neural network facts for kids

A neural network, also called an artificial neural network (ANN), is like a computer system designed to work a bit like the human brain. It's made of many small connected units, similar to brain cells, that work together to process information and learn from examples.

Imagine you want to teach a computer to recognize pictures of cats. How would you do it? You could try writing down a list of rules, like "a cat has pointy ears," "a cat has whiskers," etc. But what about cats with folded ears? Or pictures where you can't see the whiskers? This is where neural networks come in handy.

Instead of following strict rules, neural networks learn by looking at lots and lots of examples. They are inspired by how our own brains learn and recognize things.

How do they work?

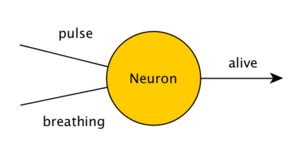

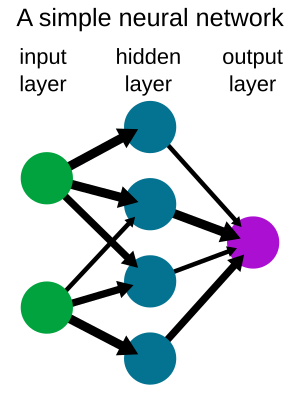

Think of a neural network as having layers of tiny computer units, which we call "artificial neurons." These neurons are connected to each other, like brain cells are connected by synapses.

- Input Layer: This is where the information goes in. If you're showing the network a picture of a cat, the input layer might get information about the colors and shapes of the pixels in the image.

- Hidden Layers: These are layers in the middle where the main processing happens. Information travels from the input layer through these hidden layers. Each connection between neurons has a "weight," which is like a number that tells you how important that connection is.

- Output Layer: This layer gives the final answer. For our cat example, the output layer might have one neuron that lights up if it thinks the picture is a cat, and another that lights up if it thinks it's not a cat.

When information travels through the network, each neuron takes the information it receives from the connections, does a little calculation (using something called an "activation function"), and then sends a new signal to the next layer of neurons. The strength of the signal depends on the weights of the connections.

How do they learn?

Teaching a neural network is called "training." It's like practicing something over and over to get better.

The network starts with random weights on its connections. It doesn't know anything yet.

You show the network an example (like a picture of a cat) and tell it what the correct answer should be (it's a cat). The network processes the information and makes a guess in its output layer.

The network compares its guess to the correct answer. If it was wrong, it calculates how big the "error" is.

The network uses a technique called "backpropagation" to figure out which connections contributed most to the error. It then slightly adjusts the weights of those connections to try and make the error smaller next time. It's like fine-tuning the connections to get closer to the right answer.

The network repeats this process thousands or even millions of times with many different examples. With each example, it adjusts its weights a little more. Slowly, the weights get better and better at helping the network give the correct answers.

Training continues until the network makes very few errors on the examples it has seen. The goal is for the network to learn the general patterns so well that it can also give correct answers for new examples it has never seen before.

There are different ways neural networks learn:

- Supervised Learning: This is like learning with a teacher. You give the network examples with the correct answers already provided (like pictures labeled "cat" or "not cat").

- Unsupervised Learning: Here, you give the network data without telling it the answers. The network tries to find patterns or group the data on its own (like finding similar pictures and putting them into clusters).

- Reinforcement Learning: This is like learning by trial and error, getting rewards for good actions and penalties for bad ones (like teaching a computer to play a video game by rewarding it when it scores points).

Types

Neural networks come in many different shapes and sizes, designed for different tasks:

- Feedforward Networks: These are the simplest type, where information flows only in one direction, from the input layer, through hidden layers, to the output layer.

- Deep Neural Networks: These are feedforward networks with many hidden layers. Having more layers allows them to learn more complex patterns. This is what "deep learning" refers to.

- Convolutional Neural Networks (CNNs): These are especially good at working with images. They have special layers that help them recognize patterns like edges, shapes, and textures, no matter where they appear in the image.

- Recurrent Neural Networks (RNNs): These networks have connections that loop back, allowing them to remember information from previous steps. This makes them great for things like understanding sequences, like words in a sentence or notes in music.

- Transformers: A newer type of network that has become very popular, especially for understanding and generating language. They are very good at paying attention to different parts of the input information.

History

Scientists and mathematicians started thinking about artificial neurons and networks way back in the 1940s and 1950s.

In the late 1950s, Frank Rosenblatt created one of the first working artificial neural networks called the Perceptron. It caused a lot of excitement. However, early networks had limitations, and research slowed down for a while.

A key breakthrough in the 1970s and 1980s was the development of the backpropagation algorithm, which made it much easier to train networks with multiple layers.

In the 2000s and 2010s, with faster computers (especially using graphics cards, or GPUs) and access to huge amounts of data, deep neural networks started achieving amazing results in areas like image recognition and speech recognition. This led to the "deep learning" revolution we see today.

In recent years, new types of networks like Transformers and Diffusion Models have pushed the boundaries even further, leading to powerful AI systems that can generate text, images, and more.

What can they do?

Neural networks are used in tons of cool applications today:

- Recognizing Images: Identifying objects in photos, facial recognition, helping self-driving cars "see."

- Understanding Speech: Voice assistants like Siri and Alexa, transcribing audio.

- Working with Language: Translating languages, writing text, summarizing articles, chatbots.

- Playing Games: AI systems that can beat human champions in games like chess and Go.

- Making Predictions: Forecasting weather, predicting stock prices, recommending movies or products you might like.

- In Medicine: Helping doctors analyze medical images (like X-rays), predicting patient outcomes, assisting in drug discovery.

- Creating Content: Generating realistic images, writing music, helping create video game characters' behaviors.

- In Science: Discovering new materials, simulating complex systems.

- Cybersecurity: Detecting fraud, identifying malicious online activity.

Challenges

While neural networks are powerful, they also have challenges:

- They often need huge amounts of data to learn effectively.

- "Black Box" Problem: Sometimes it's hard to understand exactly why a neural network made a particular decision, because the knowledge is spread across millions of connections and weights.

- If the data used to train a network is unfair or biased (for example, if it mostly contains images of one group of people), the network can learn and repeat those biases. Scientists are working hard to make AI fairer.

- Training very large neural networks requires a lot of computing power.

Despite these challenges, neural networks are an exciting and rapidly developing area of technology that is changing how computers can learn and interact with the world.

| Janet Taylor Pickett |

| Synthia Saint James |

| Howardena Pindell |

| Faith Ringgold |