Perceptron facts for kids

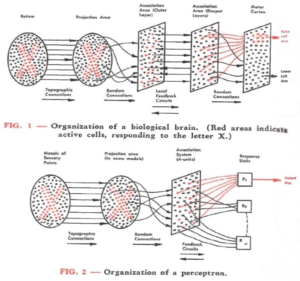

The perceptron is a special computer program that helps computers learn to make simple "yes" or "no" decisions. Think of it like a tiny, basic "brain cell" for a computer. It's used in machine learning to sort things into two groups. For example, it could learn to tell if a picture shows a cat or not. It does this by looking at different features of the input (like numbers representing an image) and using "weights" to decide how important each feature is.

Contents

How the Perceptron Started

The basic idea of an artificial neuron (like a computer brain cell) was first thought up in 1943 by two scientists, Warren McCulloch and Walter Pitts.

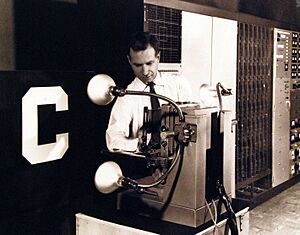

Years later, in 1957, a scientist named Frank Rosenblatt at the Cornell Aeronautical Laboratory started working on the perceptron. He first tested his ideas using a big computer called an IBM 704.

Then, he got money from the United States Office of Naval Research to build a special computer just for the perceptron. This machine was called the Mark I Perceptron. It was first shown to the public on June 23, 1960. This machine was part of a secret project to help people who looked at aerial photos understand what they were seeing.

Rosenblatt wrote a detailed paper about the perceptron in 1958. He explained that his perceptron had three main parts, or "units":

- AI units: These were for "projection," meaning they took in the raw information.

- AII units: These were for "association," where the main processing happened.

- R units: These were for "response," giving the final "yes" or "no" answer.

His project received funding from 1957 all the way to 1970.

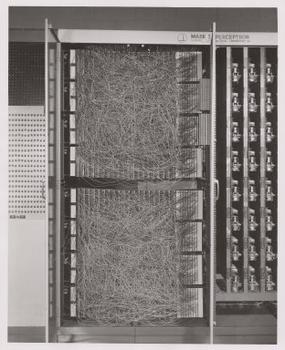

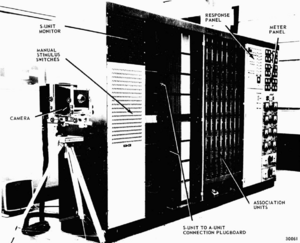

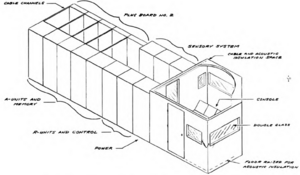

The Mark I Perceptron Machine

The perceptron was designed to be a physical machine, not just a computer program. Even though it was first tested on an IBM computer, it was later built as the Mark I Perceptron. This machine was made specifically for image recognition, meaning it could "see" and identify things in pictures. Today, you can find the Mark I Perceptron at the National Museum of American History in the Smithsonian.

The Mark I Perceptron had three main layers, like different parts of a brain:

- Sensory Units (S-units): This was like the "eyes" of the machine. It had 400 light sensors arranged in a 20x20 grid. Each sensor could connect to many "association units."

- Association Units (A-units): This was a hidden layer with 512 perceptrons. These units did the main "thinking" or processing.

- Response Units (R-units): This was the output layer with eight perceptrons. These units gave the final answer or decision.

Rosenblatt called this three-layered system an alpha-perceptron.

The S-units were connected to the A-units in a random way. This was done to make sure there was no special bias in how the perceptron learned. The connections here were fixed and did not change. Rosenblatt believed that the human eye connected to the brain in a similar random way.

The A-units were connected to the R-units. These connections had adjustable "weights" that were stored in special devices called potentiometers. When the machine learned, small electric motors would change these weights.

From 1960 to 1964, the Central Intelligence Agency (CIA) even studied using the Mark I Perceptron to recognize military targets, like planes and ships, in photos taken from the air.

More Perceptron Ideas (1962)

Rosenblatt wrote a book in 1962 called Principles of Neurodynamics. In this book, he shared his experiments with many different types of perceptron machines.

Some of his ideas included:

- "Cross-coupling": Connections between units in the same layer.

- "Back-coupling": Connections from later layers back to earlier layers.

- Four-layer perceptrons: Machines with more layers that could learn more complex things.

- Time-delays: Adding delays to help perceptrons process information that came in a sequence, like sounds.

- Analyzing audio: Using perceptrons to understand sounds instead of just images.

The Mark I Perceptron machine was moved from Cornell to the Smithsonian Museum in 1967.

The Perceptrons Book (1969)

Even though perceptrons seemed very promising at first, it was soon discovered that simple perceptrons couldn't learn to recognize many types of patterns. This problem caused research in neural networks to slow down for many years. People didn't realize yet that adding more layers to a perceptron (creating a multilayer perceptron) would make it much more powerful.

A single-layer perceptron can only learn patterns that can be separated by a single straight line. Imagine trying to separate red dots from blue dots on a graph with just one line. If the dots are mixed up in a complex way, one line won't be enough.

In 1969, a famous book called Perceptrons was written by Marvin Minsky and Seymour Papert. This book showed that a single-layer perceptron could not solve a problem called the XOR function. The XOR problem is tricky because it requires a more complex way of separating data than a single straight line.

Many people misunderstood the book and thought it meant that *no* type of perceptron, even with multiple layers, could solve complex problems. However, Minsky and Papert actually knew that multi-layer perceptrons *could* solve the XOR problem. Still, this misunderstanding caused a big drop in interest and funding for neural network research. It took about ten more years, until the 1980s, for neural network research to become popular again.

Later Developments

Rosenblatt kept working on perceptrons even as funding decreased. His last big project was a machine called Tobermory, built between 1961 and 1967 for speech recognition. It was so big it filled an entire room! It had four layers and used 12,000 "weights" to learn. However, by the time it was finished, computers could simulate perceptrons much faster than these special-purpose machines. Rosenblatt sadly passed away in a boating accident in 1971.

Later, in 1964, the kernel perceptron algorithm was introduced, which was a more advanced version. Scientists like Yoav Freund and Robert Schapire in 1998, and later Mehryar Mohri and Rostamizadeh in 2013, developed new ideas to make perceptrons even better at learning.

The perceptron is a very simple model of a biological neuron (a real brain cell). While real brain cells are much more complex, research shows that a simple perceptron-like model can still help us understand some basic behaviors of real neurons.

Since 2002, perceptron training has become very popular in the field of natural language processing. This is where computers try to understand human language. Perceptrons are used for tasks like part-of-speech tagging (identifying if a word is a noun, verb, etc.) and syntactic parsing (understanding the grammar of a sentence). They are also used for very large machine learning problems that involve many computers working together.

|

| Victor J. Glover |

| Yvonne Cagle |

| Jeanette Epps |

| Bernard A. Harris Jr. |