Computer vision facts for kids

Computer vision is a super interesting part of computer science that teaches computers to "see" and understand images and videos, much like humans do. It's all about making computers smart enough to do tasks that usually need human eyes.

Imagine a computer looking at a picture or a video. Computer vision helps it figure out what's in the image, where things are, and what's happening. This involves getting the image, making sense of it, and then using that information to make decisions or take action. It's like giving computers their own set of eyes and a brain to understand what they see!

As a science, computer vision explores the ideas behind artificial systems that learn from images. These images can be anything from video clips to pictures from many cameras, or even special scans from medical scanners. As a technology, computer vision uses these ideas to build real systems that can "see."

Some cool things computer vision can do include rebuilding scenes in 3D, spotting events, tracking moving objects, recognizing things, guessing how objects are positioned, learning new things, and even fixing blurry images.

Contents

How Did Computer Vision Start?

Computer vision began in the late 1960s at universities that were leaders in artificial intelligence (AI). The goal was to make computers see like people, hoping this would help robots act smarter. In 1966, some thought it could be done in just a summer project: attach a camera to a computer and have it "describe what it saw."

What made computer vision different from simple digital image processing back then was the aim to understand the 3D world from flat images. Researchers wanted computers to build a full picture of a scene. In the 1970s, many basic computer vision ideas were born. These included finding edges in pictures, labeling lines, understanding 3D shapes, and tracking movement.

The 1980s brought more serious math and measurements to computer vision. Ideas like scale-space (looking at images at different levels of detail) and figuring out shapes from shadows or textures became important. Scientists also realized that many of these math problems could be solved using similar methods.

By the 1990s, some topics became very popular. Understanding how cameras work and how to rebuild 3D scenes from multiple pictures became much better. This led to ways of creating 3D models from many images. Also, methods like graph cuts were used to divide images into different parts. This decade also saw the first practical use of statistical learning to recognize faces, like with Eigenfaces. Towards the end of the 1990s, computer vision and computer graphics started working together more. This led to cool things like changing images, stitching photos together to make panoramas, and early light-field rendering.

What Fields Are Related to Computer Vision?

Computer vision is connected to many other exciting fields:

Artificial Intelligence

Areas of artificial intelligence (AI) help robots plan how to move around and understand their surroundings. A computer vision system can act like the robot's "eyes," giving it high-level information about its environment.

AI and computer vision share topics like pattern recognition and learning. Because of this, computer vision is sometimes seen as a part of AI or the broader field of computer science.

Physics

Most computer vision systems use image sensors that detect light, usually visible or infrared. These sensors are designed using quantum physics. Physics also explains how light interacts with surfaces and how optics (lenses) work in cameras. Even complex sensors need quantum mechanics to fully understand how they form images. Computer vision can also help solve physics problems, like tracking fluid motion.

Neuroscience

Neurobiology is the study of how our brains and eyes work to see. Scientists have spent over a century studying how humans and animals process visual information. This has given us a good, though complex, idea of how "real" vision systems work. This research has inspired a part of computer vision where artificial systems try to copy how biological systems process information. Also, some learning methods in computer vision, like neural networks and deep learning, were inspired by biology.

Some computer vision research is very similar to studying biological vision. Just as AI research is linked to human consciousness, computer vision is linked to how we use stored knowledge to understand what we see. Biological vision studies the natural processes behind seeing in humans and animals. Computer vision, on the other hand, studies the processes used in computer software and hardware for artificial vision. Sharing ideas between these fields has helped both grow.

Signal Processing

Signal processing is another related field. Many ways to process one-variable signals (like sound) can be used for two-variable signals (like images) in computer vision. However, because images are special, computer vision has many unique methods that don't exist for one-variable signals. This makes it a unique part of signal processing.

Mathematics

Many computer vision methods are based on statistics, optimization (finding the best solution), or geometry. A big part of the field is also about making these methods work in real life, combining software and hardware, and making them faster without losing quality.

Other Related Fields

The fields most closely related to computer vision are image processing, image analysis, and machine vision. They often use similar techniques and have overlapping uses. This means they are almost like different names for the same field. However, research groups and companies often present themselves as belonging to one specific field.

- Image processing and image analysis usually focus on 2D images. They deal with changing one image into another, like making it brighter, removing noise, or rotating it. This means they don't necessarily try to understand what's in the image.

- Computer vision includes understanding 3D scenes from 2D images. It tries to rebuild the 3D structure or other information from one or more images. Computer vision often makes assumptions about what's in the scene.

- Machine vision is about using imaging technology for automatic checks, process control, and guiding robots in factories. It focuses on practical uses, mainly in manufacturing. This means it often combines image sensors and control systems to guide a robot. It also emphasizes real-time processing and often works in controlled lighting conditions, which can allow for different algorithms.

- There's also a field called imaging that mainly focuses on creating images, but sometimes also on processing and analyzing them. For example, medical imaging involves a lot of work on analyzing image data for medical uses.

- Finally, pattern recognition uses different methods to find information in signals, often using statistics and artificial neural networks. A big part of this field is applying these methods to image data.

Photogrammetry also overlaps with computer vision, for example, stereophotogrammetry versus stereo computer vision.

What Are Some Uses of Computer Vision?

Computer vision is used in many cool ways, from checking bottles on a factory line to helping robots understand the world. The fields of computer vision and machine vision have a lot in common. Computer vision provides the main technology for analyzing images automatically, which is used in many areas. Machine vision usually means combining this automatic image analysis with other methods for industrial checks and robot guidance.

In many computer vision uses, computers are programmed for a specific task. But now, methods where computers learn are becoming very common. Here are some examples of where computer vision is used:

- Automatic checks: For example, in factories, to inspect products for flaws.

- Helping humans: Like systems that identify different animal species.

- Controlling things: Such as guiding an industrial robot.

- Spotting events: For example, in security cameras or counting people.

- Interacting with computers: As a way for humans to control devices.

- Making models: Like analyzing medical images or creating maps of land.

- Navigation: Helping self-driving cars or robots find their way.

- Organizing information: For example, sorting large collections of images and videos.

Medical Computer Vision

One of the most important uses is in medicine, often called medical image processing. This area focuses on getting information from images to help doctors diagnose patients. These images can be from microscopes, X-rays, angiograms, ultrasound scans, and tomography scans. For example, computer vision can help find tumours or other unhealthy changes. It can also measure organ sizes or blood flow. This area also helps medical research by giving new information, like about brain structure or how well treatments are working. It can also make images clearer for doctors, like reducing noise in X-rays.

Industrial Applications (Machine Vision)

Another big use is in industry, often called machine vision. Here, information is taken from images to help with manufacturing. One example is quality control, where products are automatically checked for defects. Another is measuring the position of parts for a robot arm to pick up. Machine vision is also used a lot in farming to remove bad food items from bulk materials, a process called optical sorting.

Military Uses

Military uses are probably one of the biggest areas for computer vision. Obvious examples include finding enemy soldiers or vehicles and guiding missiles. More advanced missile systems can go to a general area, and then pick a specific target based on images they see there. Modern military ideas, like "battlefield awareness," mean that many sensors, including image sensors, give a lot of information about a combat scene. This helps leaders make smart decisions. Automatic processing helps reduce the huge amount of data and combines information from different sensors to make it more reliable.

Autonomous Vehicles

One of the newer uses is in autonomous vehicles, which include underwater vehicles, land vehicles (like small robots, cars, or trucks), and drones. The level of autonomy can range from fully self-driving vehicles to ones where computer vision helps a driver or pilot. Fully self-driving vehicles often use computer vision to know where they are, to create a map of their surroundings (SLAM), and to spot obstacles. It can also be used to find specific things, like a drone looking for forest fires. Examples of helping systems include obstacle warnings in cars and systems for planes to land by themselves. Several car makers have shown self-driving car systems, but this technology isn't fully ready for everyone yet. There are many military self-driving vehicles, from advanced missiles to drones for scouting or guiding missiles. Space exploration is already using self-driving vehicles with computer vision, like NASA's Mars Exploration Rover and ESA's ExoMars Rover.

Other Areas

- Helping create visual effects for movies and TV, like camera tracking.

- Surveillance systems.

What Are Typical Computer Vision Tasks?

Each of the uses above involves different computer vision tasks. These are specific measurement or processing problems that can be solved in many ways. Here are some common computer vision tasks:

Recognition

The main problem in computer vision is figuring out if an image contains a specific object, feature, or activity. Different types of recognition problems include:

- Object recognition (also called object classification): Recognizing one or more specific objects or types of objects, usually also finding their 2D position in the image or 3D position in the scene. Apps like Blippar and Google Goggles show this.

- Identification: Recognizing a specific item or person. Examples include identifying a person's face or fingerprint, recognizing handwritten numbers, or identifying a specific car.

- Detection: Scanning image data for a specific condition. Examples include finding possible abnormal cells in medical images or spotting a car in an automatic road toll system. Simple and fast detection methods are sometimes used to find small interesting areas in an image, which can then be looked at more closely by more complex techniques.

Today, the best ways to do these tasks use convolutional neural networks. A good example of what they can do is the ImageNet Large Scale Visual Recognition Challenge. This is a test for object classification and detection with millions of images and hundreds of object types. These networks are now almost as good as humans at these tests. However, the best methods still struggle with very small or thin objects, like a tiny ant on a flower stem. They also have trouble with images that have been changed with filters, which is common with modern digital cameras. Humans, on the other hand, rarely have trouble with these. But humans are not good at telling apart very similar things, like different breeds of dogs or types of birds, which these networks can do easily.

Some special tasks based on recognition are:

- Content-based image retrieval: Finding all images in a large group that have specific content. You can search by showing a similar image (e.g., "show me all images like this one") or by typing what you want (e.g., "show me all images with many houses, taken in winter, and no cars").

- Pose estimation: Figuring out an object's position or direction relative to the camera. An example is helping a robot arm pick up objects from a conveyor belt in a factory.

- Optical character recognition (OCR): Identifying letters and numbers in pictures of printed or handwritten text. The goal is usually to turn the text into a format that's easier to edit or search, like ASCII.

- 2D Code reading: Reading codes like data matrix and QR codes.

- Facial recognition

- Shape Recognition Technology (SRT) in people counter systems, which tells human shapes (head and shoulders) apart from other objects.

Scene Reconstruction

Given one or usually more images of a scene, or a video, scene reconstruction aims to create a 3D model of that scene. At its simplest, the model can be just a group of 3D points. More advanced methods create a full 3D surface model. New 3D imaging technologies that don't need movement or scanning, along with new processing methods, are making this field advance quickly. 3D sensors can capture 3D images from many angles. There are now ways to combine multiple 3D images into point clouds and 3D models.

Image Restoration

The goal of image restoration is to remove unwanted things like noise (from the camera sensor, motion blur, etc.) from images. The simplest way to remove noise is using different types of filters, like low-pass filters or median filters. More advanced methods use a model of what local image structures should look like, which helps tell them apart from noise. By first looking at the image for local structures like lines or edges, and then controlling the filtering based on this local information, you usually get better noise removal than with simpler methods.

An example in this field is inpainting, which fills in missing or damaged parts of an image.

How Do Computer Vision Systems Work?

How a computer vision system is set up depends a lot on what it's used for. Some systems are stand-alone apps that solve a specific problem, while others are part of a bigger system that might also control robots, manage databases, or interact with people. The way a system is built also depends on whether its functions are fixed or if it can learn or change while it's working. Many functions are unique to each use. However, there are common steps found in many computer vision systems:

- Image acquisition: A digital image is created by one or more image sensors. These can be light-sensitive cameras, range sensors, tomography devices, radar, or ultrasound cameras. Depending on the sensor, the image data can be a normal 2D picture, a 3D volume, or a sequence of images. The pixel values usually show light intensity (gray or color images), but they can also show things like depth, how much sound or electromagnetic waves are absorbed or reflected, or nuclear magnetic resonance.

- Pre-processing: Before a computer vision method can be used to get information from image data, the data often needs to be prepared. This ensures it meets the method's requirements. Examples include:

* Re-sampling to make sure the image's coordinate system is correct. * Reducing noise so that sensor noise doesn't create false information. * Improving contrast to make sure important information can be seen. * Using scale space to make image structures clearer at the right levels.

- Feature extraction: Image features of different complexities are pulled from the image data. Common examples of these features are:

* Lines, edges, and ridges. * Specific interest points like corners, blobs, or single points. More complex features can be related to texture, shape, or movement.

- Detection/segmentation: At some point, the system decides which image points or areas are important for further processing. Examples include:

* Choosing a specific group of interest points. * Separating one or more image regions that contain a specific object of interest. * Dividing an image into layers, like foreground, groups of objects, single objects, or important parts of objects.

- High-level processing: At this stage, the input is usually a small amount of data, like a set of points or an image area that is thought to contain a specific object. The rest of the processing deals with things like:

* Checking if the data matches what the system expects based on its models and the application. * Estimating specific details, like an object's position or size. * Image recognition: Classifying a detected object into different groups. * Image registration: Comparing and combining two different views of the same object.

- Decision making: Making the final choice needed for the application, for example:

* Pass/fail in automatic inspection. * Match/no-match in recognition. * Flagging something for a human to review in medical, military, security, and recognition uses.

What Hardware Is Used?

There are many types of computer vision systems, but they all have basic parts: a power source, at least one device to capture images (like a camera), a processor, and cables or wireless connections for control and communication. A practical system also needs software and a screen to monitor it. Vision systems for indoor spaces, like most industrial ones, have a lighting system and might be in a controlled environment. Plus, a complete system includes many accessories like camera stands, cables, and connectors.

While old TV and consumer video systems worked at 30 frames per second, new advances in digital signal processing and consumer graphics hardware have made it possible to capture, process, and display images very quickly for real-time systems, sometimes hundreds or thousands of frames per second. For robots, fast, real-time video systems are super important and can often make the processing for certain tasks simpler. When combined with a fast projector, quick image capture allows for 3D measurements and tracking features.

As of 2016, special processors called vision processing units (VPUs) are appearing. They work alongside regular CPUs and GPUs to handle computer vision tasks.

Images for kids

-

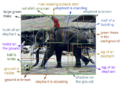

Learning 3D shapes has been a challenging task in computer vision. Recent advances in deep learning has enabled researchers to build models that are able to generate and reconstruct 3D shapes from single or multi-view depth maps or silhouettes seamlessly and efficiently

-

Artist's concept of Curiosity, an example of an uncrewed land-based vehicle. Notice the stereo camera mounted on top of the rover.

-

An 2020 model iPad Pro with a LiDAR sensor

See also

In Spanish: Visión artificial para niños

In Spanish: Visión artificial para niños

| Laphonza Butler |

| Daisy Bates |

| Elizabeth Piper Ensley |