Facial recognition system facts for kids

A facial recognition system is a special computer program that can find and identify human faces in digital pictures or video clips. It works by carefully looking at and measuring unique features on a person's face. This technology is often used to check if someone is who they say they are, like when you unlock your smartphone with your face.

Facial recognition systems started being developed in the 1960s. Since then, they have become much more common, appearing in everyday devices like phones and even in robotics. Because these systems measure parts of a human body, they are called biometrics, just like fingerprint or iris recognition. Even though facial recognition might not be as super accurate as iris or fingerprint scans, it's very popular because you don't have to touch anything to use it. You can find it in advanced human–computer interaction (how people use computers), video cameras that watch public places, and even in systems that help organize images automatically.

Today, governments and private companies all over the world use facial recognition systems. How well they work can be different, and some systems have even been stopped because they weren't effective enough. Using facial recognition has also caused some arguments. People worry that these systems might invade privacy, sometimes make mistakes in identifying people, and could lead to unfair treatment based on gender or racial profiling. These concerns have led to some cities in the United States banning the use of facial recognition systems. Because of these worries, a big company called Meta (which owns Facebook) announced it would stop using its Facebook facial recognition system and delete the face data of over a billion users. This was a huge change in how the technology is used.

Contents

How Facial Recognition Started

Computers started trying to recognize human faces in the 1960s. Early pioneers like Woody Bledsoe, Helen Chan Wolf, and Charles Bisson worked on this. Their first project was called "man-machine" because a human had to help the computer. A person would point out specific spots on a photo of a face, like the middle of the eyes or the hairline, using a special tablet. The computer then used these points to calculate 20 different distances, like the width of the mouth or eyes. This helped build a database of face measurements. The computer could then compare new photos to this database to find possible matches.

In 1970, Takeo Kanade showed off a system that could find facial features like the chin and calculate distances between them all by itself, without human help. Even though it sometimes struggled to identify features perfectly, people became very interested. In 1977, Kanade wrote the first detailed book about facial recognition technology.

In 1993, the Defense Advanced Research Project Agency (DARPA) and the Army Research Laboratory (ARL) started a program called FERET. Their goal was to create "automatic face recognition" that could help security, intelligence, and law enforcement. They tested many systems, and found that some could recognize faces well in clear photos taken in controlled settings. These tests led to three US companies selling automated facial recognition systems.

After the FERET tests, some Department of Motor Vehicles (DMV) offices in states like West Virginia and New Mexico were among the first to use facial recognition. They used it to stop people from getting multiple driver's licenses with different names. Driver's licenses were a common form of photo ID. As DMV offices updated their technology and created digital photo databases, they could use facial recognition to check new license photos against existing ones. This made DMV offices one of the first big places where Americans saw facial recognition being used for identification.

In 1999, Minnesota started using a facial recognition system called FaceIT. It was part of a system that helped police and courts track criminals across the state using mug shots.

Before the 1990s, facial recognition mostly used clear photographic portraits. But in the early 1990s, researchers started focusing on finding faces in images that had other objects. This led to the principle component analysis (PCA) method, also known as Eigenface, developed by Matthew Turk and Alex Pentland. This method helped computers process less data to find a face. Later, in 1997, the Eigenface method was improved with linear discriminant analysis (LDA) to create Fisherfaces, which became very popular.

In the late 1990s, the "Bochum system" became very successful. Developed by Christoph von der Malsburg and his team at the University of Bochum, this system used special filters to record face features and map out the face's structure. By 1997, it was better than most other systems. It could even recognize faces with mustaches, beards, different hairstyles, and glasses. This system was sold commercially as ZN-Face for places like airports.

In 2001, real-time face detection in videos became possible with the Viola–Jones object detection framework. Paul Viola and Michael Jones created AdaBoost, the first system that could find faces in real-time, even from the front. By 2015, this algorithm was used in small devices like handheld phones and embedded systems. This helped facial recognition become useful in many new ways, including in user interfaces and video calls.

Recently, Ukraine has been using facial recognition to identify dead soldiers. The Ukrainian army's IT volunteers have done thousands of searches and identified the families of hundreds of deceased soldiers. They use software from a US company called Clearview AI. This software is usually only for government agencies to help with law enforcement or national security. Clearview AI donated the software to Ukraine.

How Facial Recognition Works

Even though humans can easily recognize faces, it's a tough problem for computers. Facial recognition systems try to identify a 3D human face, which can look different depending on lighting and expressions, from a flat 2D image. To do this, they follow four main steps:

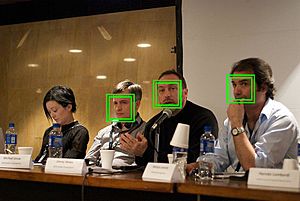

- Face Detection: First, the system finds the face in the image and separates it from the background.

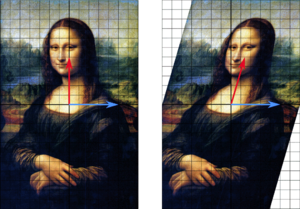

- Alignment: Next, the face image is adjusted. This helps with different face positions, image sizes, and lighting. The goal is to make sure facial features can be found accurately.

- Feature Extraction: Then, the system pinpoints and measures specific facial features like the eyes, nose, and mouth. These measurements create a unique "feature vector" for the face.

- Matching: Finally, this unique feature vector is compared to a database of known faces to find a match.

Different Ways to Recognize Faces

Some facial recognition programs find faces by looking for special "landmarks" or features. For example, a program might look at the position, size, or shape of the eyes, nose, cheekbones, and jaw. These features are then used to search for other images with similar features.

Other programs prepare a collection of face images and then shrink the face data, keeping only the useful parts for recognition. A new image is then compared with this prepared face data. One early successful system used a method called "template matching" on important facial features, creating a compact way to represent a face.

Generally, recognition programs can be split into two main types:

- Geometric: This type looks at specific, unique features.

- Photometric: This type uses statistics to turn an image into numbers and compares these numbers to templates to reduce differences.

Modern facial recognition systems increasingly use machine learning techniques, like deep learning, to get better at recognizing faces.

Recognizing Faces from Far Away

When faces in pictures are very small, like from CCTV cameras, it's hard for facial recognition programs to work. To help with this, a technique called "face hallucination" is used. This technique makes low-resolution face images look clearer. It uses machine learning to fill in details, even if parts of the face are hidden by things like sunglasses. This helps the facial recognition system work better with images that aren't perfect.

3D Face Recognition

Three-dimensional face recognition uses special 3D sensors to capture information about the shape of a face. This information helps identify unique features on the face's surface, like the shape of the eye sockets, nose, and chin.

One big advantage of 3D face recognition is that it's not affected by changes in lighting, unlike other methods. It can also identify a face from different angles, even a side view. Having 3D data points makes face recognition much more accurate. Researchers are developing new ways to capture 3D face images, like using three cameras pointing at different angles to track a person's face in real-time.

Using Thermal Cameras

Another way to get face data is by using thermal cameras. These cameras detect heat, so they only see the shape of the head and ignore things like glasses, hats, or makeup. Unlike regular cameras, thermal cameras can capture face images even in the dark without needing a flash, which keeps the camera's position hidden. However, there aren't many databases of thermal face images yet.

In 2018, scientists at the U.S. Army Research Laboratory (ARL) created a way to match thermal camera images with regular camera images. This "cross-spectrum synthesis" method combines information from different parts of the face to create a single image. This technique improved the accuracy of matching thermal images to visible ones by a lot.

Where Facial Recognition is Used

Checking Your ID

Facial recognition is increasingly used for checking IDs. Many companies now offer these services to banks and other online businesses. It's used as a way to prove who you are on many devices. For example, Android 4.0 phones added facial recognition to unlock devices using the front camera. Microsoft added face recognition login to its Xbox 360 and Windows 10 (which needs a special camera). In 2017, Apple's iPhone X introduced Face ID, which uses an infrared system to recognize your face.

Face ID

Apple's Face ID on the iPhone X replaced the Touch ID fingerprint system. Face ID has a special sensor with two parts: one that projects over 30,000 invisible infrared dots onto your face, and another that reads this pattern. This pattern is sent to a secure part of the phone's central processing unit (CPU) to confirm it's you.

Apple cannot access your facial pattern. The system won't work if your eyes are closed, to prevent someone from unlocking your phone while you're asleep. The technology learns as your appearance changes, so it works with hats, scarves, glasses, beards, and makeup. It also works in the dark because it has a "Flood Illuminator" that shines invisible infrared light on your face to read the 30,000 points.

Government Services

In some countries, facial recognition is being used for government services.

- India: Some officials have said facial recognition might be used with Aadhaar (a national ID system) to check identities for vaccines. However, human rights groups have raised concerns, saying that using an error-prone system without proper laws could prevent people from getting important services. Facial recognition is also being tested to verify pensioners' identities for digital life certificates. Critics argue this is happening without proper data protection laws.

- Police departments in various Indian states are already using facial recognition systems to identify criminals. There are concerns that these systems, which are not always accurate, could lead to false identifications and privacy issues.

Security Services

Facial recognition is widely used in security.

- Australia, New Zealand, Canada: Border control systems like SmartGate use facial recognition to compare a traveler's face with the photo on their e-passport.

- United Kingdom: Police have been testing live facial recognition at public events since 2015. In 2020, a court ruled that the way South Wales Police used the system in 2017 and 2018 violated human rights.

- United States: The U.S. Department of State has one of the world's largest face recognition systems, with millions of photos, often from driver's licenses. The FBI uses it as an investigation tool. Since 2018, U.S. Customs and Border Protection has used "biometric face scanners" at airports for international flights. The American Civil Liberties Union (ACLU) is against this program, worrying it could be used for widespread surveillance.

- China: The Skynet Project, started in 2006, has deployed millions of cameras, many with real-time facial recognition, across the country for surveillance. Police have used smart glasses with facial recognition to identify suspects. Facial recognition is also used in many public places like railway stations and tourist attractions. In 2019, a professor sued a safari park for using facial recognition on its customers, which was reportedly the first such case in China.

- Latin America: In the 2000 Mexican presidential election, facial recognition helped prevent voter fraud. Public transport buses in Colombia use it to identify wanted passengers. Airports in Panama use it to find individuals sought by police or Interpol. Brazil used facial recognition goggles at the 2014 FIFA World Cup and systems at the 2016 Summer Olympics.

- European Union: Police forces in at least 21 EU countries use or plan to use facial recognition.

- South Africa: In 2016, Johannesburg announced it was using smart CCTV cameras with facial recognition.

In Stores

Some retail companies have started using facial recognition systems.

- United States: The US firm 3VR (now Identiv) offered facial recognition to retailers as early as 2007, promising benefits like reducing customer wait times and helping employees greet customers by name. In 2020, Reuters reported that the pharmacy chain Rite Aid had used facial recognition systems in some stores. Rite Aid said it stopped using the software. Other US retailers like The Home Depot, Menards, Walmart, and 7-Eleven have also tested or used this technology. Concerns were raised that Rite Aid's systems were more often in areas with many people of color, leading to worries about racial profiling.

Other Uses

- At the Super Bowl XXXV in 2001, police used facial recognition software to look for potential criminals.

- Photo management software uses it to identify people in pictures, making it easier to search photos by person or suggest sharing them.

- In 2018, the pop star Taylor Swift secretly used facial recognition at a concert. A camera hidden in a kiosk scanned concert-goers to look for known stalkers.

- In 2019, Manchester City football club planned to use facial recognition for faster entry into their stadium. Civil rights groups warned against this, saying it could lead to mass surveillance.

- During the COVID-19 pandemic, some American football stadiums and Disney's Magic Kingdom tested facial recognition for "touchless" entry.

Good and Bad Sides of Facial Recognition

Compared to Other Biometrics

In 2006, tests showed that new facial recognition programs were much more accurate than older ones. Some could even identify identical twins and were better than humans at recognizing faces.

One big plus of facial recognition is that it can identify many people at once without them even knowing. Systems in airports or public places can spot individuals in a crowd. However, compared to other biometric methods, facial recognition might not always be the most reliable. Things like lighting, facial expressions, and noise in the image can affect how well it works. Facial recognition systems sometimes make more mistakes (false positives or false negatives) than other biometric systems. This has raised questions about how effective it is for security in places like train stations and airports.

What Makes it Weak

- Viewing Angle: It's harder for systems to recognize faces from the side.

- Low Resolution: Blurry or low-quality images, common in surveillance, are difficult to recognize.

- Facial Expressions: A big smile can make the system less effective. Some countries, like Canada, require neutral expressions for passport photos.

- Gender Bias: Facial recognition systems have been criticized for assuming a binary gender (male or female). They are often accurate for cisgender people but can get confused or fail to identify transgender and non-binary people correctly. This can be harmful by ignoring or invalidating a person's gender identity or gender expression.

Not Always Effective

Some early systems had disappointing results. For example, a system in the London Borough of Newham reportedly never recognized a single criminal, even though criminals in its database lived there. An experiment in Tampa in 2002 also had poor results. A system at Boston's Logan Airport was shut down in 2003 after failing to make any matches for two years.

While companies might claim nearly 100% accuracy, these studies often use small groups of people. In real-world use, where there are many more faces, the accuracy can be lower. When facial recognition isn't perfectly accurate, it creates a list of possible matches. A human operator then has to pick the correct person from this list, and studies show they only pick the right one about half the time. This can lead to the wrong person being targeted.

Bans on Facial Recognition

In May 2019, San Francisco, California became the first major US city to ban facial recognition software for police and other local government agencies. This ban requires agencies to get approval and publicly share how they plan to use any new surveillance technology. In June 2019, Somerville, Massachusetts, became the first city on the East Coast to ban face surveillance software for government use. Oakland, California also banned it in July 2019.

The American Civil Liberties Union (ACLU) has supported these bans and campaigns for more transparency in surveillance technology.

In January 2020, the European Union briefly suggested banning facial recognition in public spaces but then quickly dropped the idea.

During the George Floyd protests, the use of facial recognition by city government was banned in Boston, Massachusetts. As of June 2020, municipal use has been banned in several other US cities, including:

- Berkeley, California

- Oakland, California

- Boston, Massachusetts

- Brookline, Massachusetts

- Cambridge, Massachusetts

- Northampton, Massachusetts

- Springfield, Massachusetts

- Somerville, Massachusetts

- Portland, Oregon

A group of human rights organizations called "Reclaim Your Face" launched in October 2020. They are calling for a ban on facial recognition and started a European Citizens' Initiative in February 2021, asking the European Commission to strictly control how biometric surveillance technologies are used.

Recognizing Emotions

In the past, people believed that facial expressions showed a person's true feelings. In the 1960s and 1970s, psychologists studied human emotions and how they are expressed. Since then, research on automatic emotion recognition has focused on facial expressions and speech, which are key ways humans show emotions. In the 1970s, the Facial Action Coding System (FACS) was created to categorize the physical expressions of emotions. Its creator, Paul Ekman, believes there are six universal emotions that can be seen in facial expressions.

By 2016, facial feature emotion recognition programs started moving out of university labs and into commercial products. For example, Facebook bought FacioMetrics, and Apple Inc. bought Emotient, both companies focused on facial emotion recognition. By the end of 2016, companies selling facial recognition systems started offering to include emotion recognition features.

Ways to Avoid Facial Recognition

In January 2013, Japanese researchers created "privacy visor" glasses that use invisible infrared light to make a face unrecognizable to facial recognition software. Newer versions use special materials and patterns to block or bounce back light, confusing the technology.

Other methods to protect against facial recognition include specific haircuts and makeup patterns that prevent the algorithms from detecting a face. This is sometimes called computer vision dazzle. Interestingly, the makeup styles worn by Juggalos can also help block facial recognition.

Facial masks worn to protect against viruses can also reduce the accuracy of facial recognition systems. A 2020 study found that systems had a much higher failure rate when trying to identify people wearing masks. For example, Apple Pay's facial recognition works through many things like heavy makeup or beards, but it often fails with masks.

Images for kids

-

Flight boarding gate with "biometric face scanners" at Hartsfield–Jackson Atlanta International Airport.

See also

In Spanish: Sistema de reconocimiento facial para niños

In Spanish: Sistema de reconocimiento facial para niños

| Roy Wilkins |

| John Lewis |

| Linda Carol Brown |