Information entropy facts for kids

Information entropy is a big idea from information theory. It helps us measure how much new information there is in an event. Think of it this way: if you're very sure about something happening, there's not much new information when it does. But if you're unsure, and something surprising happens, that's a lot of new information!

The more uncertain an event is, the more information it can give us. This idea was first thought up by a smart mathematician named Claude Shannon.

You can think of information like this:

- Information is what we learn.

- Entropy is how much uncertainty there is.

When we get information, it helps reduce our uncertainty.

Information entropy is used in many cool areas. For example, it helps computers compress files so they take up less space. It's also used in cryptography (making secret codes) and even in machine learning, where computers learn from data.

Contents

What is Information Gain?

Information gain measures how much a new piece of information helps us make a decision or predict something. It tells us how much our uncertainty goes down after we learn something new.

Let's use a coin flip as an example. If you flip a fair coin, there's a 50-50 chance of getting heads or tails. This situation has the highest possible entropy (or uncertainty) because you can't guess the outcome better than 50%. There's no "information gain" before the flip, because neither side is more likely.

But what if you had a special coin that always landed on heads? If someone told you it was heads, that wouldn't be new information. Your uncertainty was already zero, so there's no information gain. The entropy in this case is zero.

Understanding Entropy with Examples

Imagine someone tells you something you already know. For example, "The sky is blue." You already knew that! So, the information you get is very small. It's not helpful to be told something you already know. This kind of information has very low entropy. It doesn't reduce your uncertainty at all.

Now, imagine you are told something completely new and surprising. Maybe you learn about a new discovery in space, or a secret about an ancient civilization. This information would be very valuable to you because you didn't know it before. You would learn a lot! This kind of information has high entropy. It greatly reduces your uncertainty about that topic.

Images for kids

-

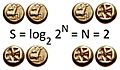

Two bits of entropy: When you flip two fair coins, there are four possible outcomes (HH, HT, TH, TT). This means there are two bits of entropy, because 2 to the power of 2 equals 4. Information entropy is like the average amount of new information an event gives us, considering all the possible things that could happen.

Related pages

See also

In Spanish: Entropía (información) para niños

In Spanish: Entropía (información) para niños

| DeHart Hubbard |

| Wilma Rudolph |

| Jesse Owens |

| Jackie Joyner-Kersee |

| Major Taylor |