Operant conditioning facts for kids

Operant conditioning is a type of learning. It's about how people or animals change their actions. This change happens because of what happens after they do something.

Imagine you do something, and then something good or bad happens. You learn from that. If something good happens, you're more likely to do it again. If something bad happens, you're less likely to do it.

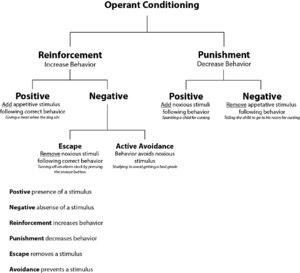

There are three main ideas in operant conditioning:

- Reinforcement: This is when a good thing happens after an action. It makes the action happen more often.

- Punishment: This is when a bad thing happens after an action. It makes the action happen less often.

- Extinction: This is when nothing happens after an action. If there are no results, the action will happen less often over time.

Contents

How Actions Change: Reinforcement and Punishment

In operant conditioning, we talk about "positive" and "negative." These words don't mean good or bad.

- Positive means something is added.

- Negative means something is taken away.

Let's look at the four ways actions can change:

Positive Reinforcement: Adding a Reward

This happens when a reward is added after an action. This makes the action happen more often.

- Example: A rat in a Skinner box presses a lever. If food (a reward) is given, the rat will press the lever more often.

Negative Reinforcement: Taking Away Something Unpleasant

This happens when something unpleasant is taken away after an action. This also makes the action happen more often.

- Example: In a Skinner box, there might be a loud noise. If the rat presses a lever and the noise stops, the rat will press the lever more often to make the noise go away.

Positive Punishment: Adding Something Unpleasant

This happens when something unpleasant is added after an action. This makes the action happen less often.

- Example: If a child misbehaves and gets a time-out (something unpleasant added), they might do that action less often.

Negative Punishment: Taking Away a Reward

This happens when something good is taken away after an action. This makes the action happen less often.

- Example: If a child misbehaves and their favorite toy is taken away (a reward removed), they might do that action less often.

The idea of operant conditioning was first explored by Edward Thorndike. Later, B. F. Skinner studied it in much more detail.

Operant conditioning is different from Pavlov's classical conditioning. Operant conditioning is about actions we choose to do. Classical conditioning is about training a reflex, like blinking or salivating.

Early Ideas: Thorndike's Law of Effect

Operant conditioning was first studied by Edward Thorndike (1874–1949). He called it instrumental learning. Thorndike watched cats trying to escape from special "puzzle boxes." At first, the cats took a long time to figure out how to get out. But the more they tried, the faster they escaped. They learned which actions worked.

Thorndike came up with his law of effect. This law says that actions followed by good results tend to be repeated. Actions that lead to bad results are less likely to be repeated. Simply put, some results make an action stronger, and some make it weaker.

Skinner's Detailed Studies

B. F. Skinner (1904–1990) took Thorndike's ideas further. He created the operant conditioning chamber, also known as a "Skinner box." This allowed him to carefully measure how often an animal performed an action, like pressing a lever.

Key Ideas in Operant Conditioning

There are several important principles in operant conditioning:

Learning in Different Situations

- Discrimination: This is when an action happens only in certain situations. For example, a dog might only sit when you say "sit" and not when you say "kit."

- Generalization: This is when an action happens in similar situations. A child might learn to say "doggy" for their dog, and then say "doggy" for other dogs they see.

- Context: Learning often depends on the situation. An action might only happen when a specific signal or cue is present.

When Reinforcement Stops: Extinction

- Extinction: If an action that was once rewarded stops getting any reinforcement, it will happen less often. For example, if a dog used to get a treat for rolling over, but now never does, it might stop rolling over.

How Often Rewards Are Given: Schedules of Reinforcement

The timing of rewards is very important. How often and when an action is rewarded affects how strong the learning is.

- Fixed Interval Schedule: A reward is given after a set amount of time, if the action is done. For example, getting paid every two weeks.

- Variable Interval Schedule: A reward is given after an average amount of time, but the exact time changes. For example, checking your email for new messages.

- Fixed Ratio Schedule: A reward is given after a specific number of actions. For example, getting a free coffee after buying ten.

- Variable Ratio Schedule: A reward is given after an average number of actions, but the exact number changes. This is like playing a slot machine, where you don't know when you'll win.

Images for kids

See also

In Spanish: Condicionamiento operante para niños

In Spanish: Condicionamiento operante para niños

| Janet Taylor Pickett |

| Synthia Saint James |

| Howardena Pindell |

| Faith Ringgold |