Optimal control facts for kids

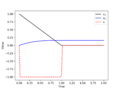

Optimal control theory is a part of mathematics that helps us find the very best way to do something. Imagine you want to achieve a goal, like getting somewhere as fast as possible or using the least amount of fuel. Optimal control theory gives us the tools to figure out the perfect plan to reach that goal.

It works with systems that change over time, like a car moving or a robot performing a task. These systems can be described using functions, which are like mathematical rules. The main idea is to find the right actions or "controls" that will make a certain function as small as possible (minimize) or as large as possible (maximize) over a period of time.

For example, if you want to save fuel, you'd want to minimize the function that represents fuel consumption. If you want to get somewhere quickly, you'd want to minimize the function that represents travel time.

Contents

What Are We Trying to Figure Out?

When we look at an optimal control problem, we often ask a few important questions:

- Can we actually find a solution that works?

- What rules or conditions must our solution follow to be the best?

- If our solution follows these rules, does that guarantee it's truly the best one?

Sometimes, there are also limits on the system. For instance, a car can only go so fast, or a robot can only lift a certain weight. These are called "state restrictions," and the system must always stay within these limits.

Who Started Optimal Control Theory?

Most of the main ideas for optimal control theory came from two brilliant mathematicians:

- Lev Pontryagin from the Soviet Union

- Richard Bellman from the United States

They did a lot of the early work that helped shape this field into what it is today.

A Simple Example

Let's think about a driver who wants to go from point A to point B. Their goal is to get there in the shortest time possible. This is a perfect example of an optimal control problem.

- There might be several different roads or routes the driver can take.

- Each road has a speed limit, which is a "state restriction" on how fast the car can go.

- The driver needs to choose the best route and the best speed at each moment to minimize the total travel time.

Optimal control theory helps figure out the exact path and speed changes the driver should make to achieve their goal most efficiently. It's used in many real-world situations, from guiding rockets to controlling robots and even managing power grids.

Images for kids

See also

In Spanish: Control óptimo para niños

In Spanish: Control óptimo para niños

| Frances Mary Albrier |

| Whitney Young |

| Muhammad Ali |