Data compression facts for kids

Imagine you have a really big file on your computer, like a long video or a huge picture. Data compression is like packing that file into a smaller box so it takes up less space. This makes it easier to store the file or send it over the internet. It's also called source coding or bit-rate reduction because it reduces the number of "bits" (tiny pieces of information) needed to represent the data.

There are two main ways to compress data:

- Lossless compression: This is like packing a suitcase perfectly. Nothing is left out, and you can unpack everything exactly as it was. No information is lost.

- Lossy compression: This is like packing a suitcase but deciding you don't need every single item. You throw out some less important things to make the suitcase much smaller. Some information is lost, but usually, it's information you won't really miss.

A device or program that compresses data is called an encoder. The one that unpacks or decompresses it is called a decoder.

When you compress data, there's often a trade-off. You can make the file very small, but it might take a lot of computer power and time to compress or decompress it. Or, you can compress it quickly, but the file might not be as small.

Contents

Lossless Compression

Lossless compression works by finding and removing repeated information in data. Think of it like this: if you have a picture with a big blue sky, instead of saving "blue pixel, blue pixel, blue pixel" a thousand times, the computer can just say "1000 blue pixels." This saves a lot of space!

One of the most famous types of lossless compression is called the Lempel–Ziv (LZ) method.

- Lempel–Ziv–Welch (LZW) is a popular version of LZ. It's used in GIF images and programs like PKZIP.

- DEFLATE is another LZ method that's good at decompressing quickly and making files small. It's used in PNG images.

These methods often create a kind of "dictionary" of repeated patterns in the data. Instead of writing the pattern, they just write a short code from the dictionary.

More advanced lossless methods use probabilistic models. This means they try to guess what data comes next based on what's already there.

- Arithmetic coding is a modern technique that uses math to get even better compression. It's used in some video standards like H.264/MPEG-4 AVC.

When you use software to compress files (like zipping them), you can often choose a "dictionary size." A bigger dictionary can compress files more, especially if they have lots of repeating patterns, but it needs more computer memory.

Lossy Compression

Lossy compression became very popular in the early 1990s, especially for digital images. With lossy compression, some information is removed because it's considered "unnecessary" or "less important." This makes the file much smaller, but you can't get the exact original back.

The trick with lossy compression is to remove information that people won't easily notice is gone.

- For images, our eyes are more sensitive to changes in brightness than in color. So, JPEG image compression works by slightly rounding off less important color details.

- For sound, our ears can't hear all sounds equally well. MP3 audio compression uses psychoacoustics to remove sounds that are too quiet or are hidden by louder sounds.

Most lossy compression uses a math trick called the discrete cosine transform (DCT). It was invented in the 1970s. DCT is used in many popular formats:

Lossy compression is used everywhere:

- Digital cameras use it to store more photos.

- DVDs, Blu-ray discs, and streaming video services use it to fit movies.

- Internet telephony (like voice calls over the internet) uses it for speech.

One downside of lossy compression is generation loss. If you compress a file, then decompress it, then compress it again, you lose more information each time. It's like making a copy of a copy – the quality gets worse.

How Compression Works (The Basics)

The idea behind compression comes from information theory, a field started by Claude Shannon in the 1940s. It's all about how much information is in something and how to represent it efficiently.

Machine Learning and Compression

Computers can learn to compress data better! There's a strong connection between machine learning (where computers learn from data) and compression.

- A computer program that can predict what comes next in a sequence of data can also be very good at compressing it.

- Some advanced AI models, like Large language models (LLMs), are surprisingly good at compressing data, even outperforming traditional methods for some types of images and audio. For example, DeepMind's Chinchilla 70B model showed impressive compression for images and audio.

Unsupervised machine learning methods, like k-means clustering, can also be used. This technique groups similar pieces of data together and then represents the whole group with just one "average" point. This is useful for making large datasets smaller, especially in image and signal processing.

Data Differencing

Think of data compression as a special way of finding differences. If you have two versions of a file, data differencing finds only the changes between them. This "difference" file is much smaller than saving both full files. Compression is like finding the difference between a file and "nothing" – the compressed file is just the "difference" from an empty space.

Uses of Data Compression

Image Compression

Image compression makes picture files smaller.

- Early methods like Shannon–Fano coding (1940s) and Huffman coding (1950) were important for lossless compression.

- The discrete cosine transform (DCT), developed in the 1970s, became the basis for JPEG. JPEG was released in 1992 and quickly became the most common image format because it made image files much smaller with only a small loss in quality. This helped digital cameras and photos become so popular.

- Lempel–Ziv–Welch (LZW) is a lossless method from 1984, used in GIF images (1987).

- DEFLATE (1996) is used in PNG images, which are also lossless.

- JPEG 2000 (2000) uses a different math technique called discrete wavelet transform (DWT) and is used for things like digital cinema.

Audio Compression

Audio data compression makes sound files smaller, so they take up less space and can be sent faster. Audio compression programs are called audio codecs.

- Both lossy and lossless methods reduce repeated information.

- Lossy audio compression is very common for music. Formats like Vorbis and MP3 use psychoacoustics to remove sounds that are hard for humans to hear. This makes files much, much smaller. For example, a CD can hold about an hour of uncompressed music, but 7 hours of music compressed as MP3 at a medium quality.

- Lossless audio compression creates a perfect copy of the original sound. The files are still smaller than uncompressed ones (about half the size), but not as small as lossy files. These are good for archiving or professional audio work. Examples include FLAC and Apple Lossless.

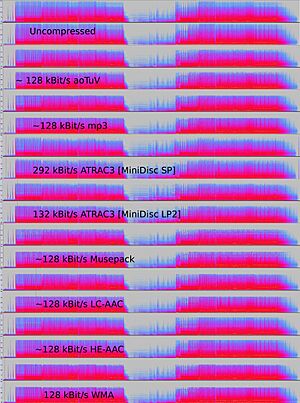

Lossy Audio Compression

Lossy audio compression is used in many places, from MP3 players to streaming media on the internet and digital radio. It achieves very high compression by getting rid of sounds that the human auditory system (ears and brain) can't easily perceive. This includes very high frequencies or quiet sounds that are played at the same time as much louder sounds.

When you edit a lossily compressed audio file, it's best to start with the original uncompressed file if possible. If you keep compressing and decompressing a lossy file, the quality will get worse each time. However, lossy formats like MP3 are very popular because they make files tiny (5-20% of the original size), and the quality is usually good enough for listening.

How Lossy Audio Works

Most lossy audio compression algorithms use math to change the sound signal into a "frequency domain." This helps them figure out which sounds are most important to our ears. They use psychoacoustic models, which are like maps of how our ears and brain hear. These models help decide which sounds can be removed or made less accurate without us noticing much.

Some special types of lossy compressors, like those used for speech, use a "source-based" approach. They create a model of the human voice and then just send the changing settings for that model, rather than the full sound. This is why phone calls can use very little data.

Sometimes, there's a small delay (called latency) when compressing and decompressing audio. This happens because the computer needs to analyze a small chunk of sound before it can process it. For phone calls, low latency is important so there isn't a noticeable delay in conversation.

Speech Encoding

Speech encoding is a special type of audio compression just for human voices. Our voices don't use the full range of sounds that music does, so speech can be compressed very well.

- It often only encodes sounds that a single human voice can make.

- It throws away more data, keeping just enough to make the voice clear and understandable, not necessarily the full range of human hearing.

Early methods like A-law algorithm and μ-law algorithm were used for speech.

History of Audio Compression

- Early research on audio compression happened at Bell Labs in the 1950s.

- Perceptual coding, which uses how humans hear, was first used for speech in the 1960s.

- The discrete cosine transform (DCT), developed in 1974, was key. A modified version of DCT (MDCT) is used in modern formats like MP3 and AAC.

- The world's first commercial audio compression system for radio stations, called Audicom, was developed in Argentina in the 1980s. The inventor, Oscar Bonello, made his work public, which helped the technology spread worldwide.

Video Compression

Uncompressed video files are huge! Video compression is essential for watching movies online, on DVDs, or on TV.

- Lossless video compression can make files 5 to 12 times smaller.

- Lossy video compression, like H.264, can make files 20 to 200 times smaller!

The two most important techniques for video compression are:

- DCT (Discrete Cosine Transform): Similar to image compression, it works on blocks of pixels.

- Motion compensation (MC): This is a clever trick for video.

Most video formats (like H.26x and MPEG) use both DCT and motion compensation. Video codecs often work with audio compression to combine sound and video into one file.

How Video Encoding Works

Video is basically a series of still pictures (frames). Video compression tries to reduce repeated information both within a single frame (spatial redundancy) and between different frames (temporal redundancy).

- Inter-frame coding: This is very important for video. Instead of saving every frame completely, the system compares one frame to the next. If nothing has moved in a certain area, it just tells the computer to copy that part from the previous frame. If something has moved, it might just record how it shifted. This saves a lot of data.

- Intra-frame coding: This compresses each frame on its own, like a still image. It's simpler and used in camcorders for easier editing.

Video compression also uses lossy compression by taking advantage of how our eyes see. For example, our eyes are less sensitive to small color differences than to brightness changes. So, the compression can average colors in similar areas, just like JPEG images.

History of Video Compression

- The DCT, developed in 1974, was crucial for video compression.

- H.261, released in 1988, was the first video format to use DCT.

- The MPEG standards became very popular:

* MPEG-1 (1991) was for VHS-quality video. * MPEG-2 (1994) became the standard for DVDs and SD digital television. * MPEG-4 (1999) followed.

- H.264/MPEG-4 AVC (2003) is a very important modern standard. It's used for Blu-ray Discs, streaming services like YouTube and Netflix, and HDTV broadcasts.

Genetics Compression

This is a newer area of compression that focuses on making genetic data (like DNA sequences) smaller. These algorithms use special methods designed for this type of data. For example, HAPZIPPER (2012) could compress genetic data much more than general compression tools. Other algorithms like DNAZip and GenomeZip (2009, 2013) can compress human genomes into very small files.

The Future of Compression

Experts believe that the world's stored data could still be compressed much more, even with the compression methods we have today. This means there's still a lot of potential to make files even smaller!

See also

In Spanish: Compresión de datos para niños

In Spanish: Compresión de datos para niños

- HTTP compression

- Kolmogorov complexity

- Minimum description length

- Modulo-N code

- Motion coding

- Range coding

- Set redundancy compression

- Sub-band coding

- Universal code (data compression)

- Vector quantization

| Audre Lorde |

| John Berry Meachum |

| Ferdinand Lee Barnett |