Entropy facts for kids

Entropy is a way to measure how much energy in something can't be used to do helpful work. It also tells us how many different ways the tiny particles (like atoms) inside something can be arranged.

Think of entropy as a measure of how mixed up or messy things are. The higher the entropy, the more uncertain we are about where each tiny particle is, because there are so many possible places they could be. A rule in physics says that it takes effort or "work" to make something less messy (lower entropy). Without that effort, things naturally tend to get messier and more disorganized over time.

The word entropy first came from studying heat and energy between 1850 and 1900. The ideas from studying entropy, especially about how to calculate probabilities, are now used in many different areas. These include understanding how information works (called information theory) and in the science of chemistry.

Entropy is a way to measure what the second law of thermodynamics describes: how energy spreads out until it's evenly mixed. The meaning of entropy can be a bit different depending on the field of study:

- Information entropy: This measures how much information is in a message. It's especially useful when there's "noise" or interference that can mess up the data.

- Thermodynamic entropy: This is part of the science of heat energy. It measures how organized or disorganized the energy is inside a group of atoms or molecules.

Contents

What is Entropy?

Entropy helps us understand why things tend to get messy. Imagine your room: if you don't put in effort to clean it, it naturally gets more disorganized over time. This is a bit like how entropy works in the universe. Energy and particles tend to spread out and become more mixed up.

Entropy and Disorder

When we talk about entropy, we often think about "disorder" or "randomness." A system with high entropy is very disorganized. For example, a broken glass has higher entropy than a whole glass. The pieces are scattered, and there are many ways they could be arranged.

Energy and Work

Entropy is also about energy that can't be used. Not all energy in a system is available to do something useful, like power a machine. The energy that's "locked away" and can't be used for work is related to entropy. As entropy increases, more energy becomes unavailable for work.

History of Entropy

The idea of entropy was developed by scientists who were studying heat engines. They wanted to understand how heat could be turned into useful work.

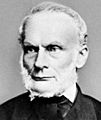

Rudolf Clausius

A German physicist named Rudolf Clausius (1822–1888) was one of the first to introduce the concept of entropy. He studied how heat moves and changes. He realized that heat always flows from warmer places to cooler places, and this process increases the overall entropy of the universe.

Types of Entropy

While the basic idea of entropy is about disorder and energy spreading, it's used in different ways in different scientific fields.

Information Entropy

This type of entropy is used in computer science and communication. It measures the amount of uncertainty or "surprise" in a message. If a message is very predictable, it has low information entropy. If it's full of new and unexpected information, it has high information entropy. This helps engineers design better ways to send data.

Thermodynamic Entropy

This is the original type of entropy, used in physics and chemistry. It describes how energy is distributed in a system. For example, when ice melts into water, its thermodynamic entropy increases because the water molecules are more free to move around and are less organized than in solid ice.

Entropy in Everyday Life

You can see entropy at work all around you!

- Melting Ice: An ice cube (ordered) melts into water (less ordered). The water molecules are more spread out, increasing entropy.

- Mixing Colors: If you mix red and blue paint, they become purple. It's very hard to separate them back into pure red and blue. The mixed paint has higher entropy.

- Your Room: As mentioned, your room naturally gets messy if you don't clean it. This is a simple example of entropy increasing.

Images for kids

See also

In Spanish: Entropía para niños

In Spanish: Entropía para niños

| Charles R. Drew |

| Benjamin Banneker |

| Jane C. Wright |

| Roger Arliner Young |