Foundation model facts for kids

A foundation model is a special type of machine learning or deep learning computer program. Think of it like a super-smart brain that has learned a lot from huge amounts of information. Because it's trained on such a wide variety of data, it can be used for many different tasks. These models have changed the world of artificial intelligence (AI), powering cool tools like ChatGPT that can create new things.

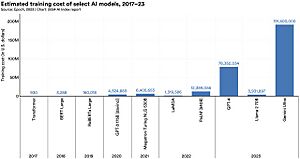

Foundation models are like all-around tools that can help with many different jobs. Building these models can be very expensive, sometimes costing millions of dollars. This money pays for the massive amounts of data and computer power needed. But once a foundation model is built, it's much cheaper to use it or adapt it for a specific task.

Some early examples of foundation models are language models (LMs) like OpenAI's GPT series and Google's BERT. These models work with text. But foundation models can also work with other types of information. For example, DALL-E and Flamingo create images, MusicGen makes music, and RT-2 helps control robots. Foundation models are a big step in AI, now being built for things like astronomy, medicine, music, coding, and math.

Contents

What are Foundation Models?

The Stanford Institute for Human-Centered Artificial Intelligence (HAI) first used the term "foundation model" in 2021. They meant any model trained on lots of data, often learning on its own, that can be changed or "fine-tuned" for many different tasks.

Governments are also starting to define foundation models as they create rules for AI.

- In the United States, one definition says a foundation model is an AI model trained on broad data, usually learns on its own, has billions of parts (called parameters), and can be used in many situations.

- In the European Union, their AI Act defines a foundation model as an AI model trained on lots of data, designed to create general outputs, and can be changed for many different jobs.

Even though these definitions have small differences, they all agree on one main thing: foundation models are trained on a wide range of data and can be used for many different things.

How Foundation Models Developed

Foundation models use older computer science ideas like deep neural networks and transfer learning. What makes them special is the huge amount of money, data, and computer power put into them. They are a new way of building AI, where one general model can be used as a base for many different projects, instead of making a new model for every single task.

The journey to foundation models started with earlier AI advancements. Before, AI models needed exact instructions for each task. But in the 1990s, machine learning models came along. These models could figure out tasks on their own if given enough data. This was the first big step towards modern foundation models.

The next major step was around 2010 with deep learning. With bigger datasets and more advanced neural networks, AI models became much better. A famous example is AlexNet, which won a big image recognition contest in 2012. It showed that deep learning could work well on large, general datasets. Also, new software tools like Pytorch and Tensorflow made it easier to build and scale these deep learning systems.

Foundation models really started to appear in the late 2010s with models like ELMo, GPT, BERT, and GPT-2. These language models showed how powerful it was to train on massive amounts of internet data. They learned by predicting the next word in a text, rather than needing someone to label all the data.

Many things helped foundation models grow:

- Faster computers and special hardware (like NVIDIA GPUs).

- New neural network designs (like the Transformer).

- Using more training data with less human supervision.

Some important foundation models include: GPT, BERT, GPT-2, T5, GPT-3, CLIP, DALL-E, Stable Diffusion, GPT-4, LLaMA, LLaMA 2, and Mistral. Each of these models brought new abilities, especially in creating new content like text or images.

The year 2022 was very important. When Stable Diffusion (for images) and ChatGPT (for text) were released, foundation models and generative AI became widely known to the public. Later, models like LLaMA, Llama 2, and Mistral in 2023 led to more discussions about how these powerful models should be shared, with many supporting "open" foundation models.

Related AI Ideas

Very Powerful AI Models

Some very advanced foundation models are called "frontier models." These models are so powerful that they could potentially cause serious problems if used incorrectly or accidentally. Because they are so strong, they need to be handled with great care. As foundation models get better, many future models might be considered frontier models.

It's hard to say exactly which models are "frontier models" because what counts as "dangerous" can be different for different people. However, some ideas for very powerful abilities that need careful handling include:

- Creating harmful substances or tools.

- Making very convincing fake information that can spread easily.

- Having strong abilities to hack computer systems.

- Being able to avoid human control in tricky ways.

Because these models can do new and unexpected things, it's hard to make rules for them. New powerful abilities can appear even after the models are released. If a frontier model is shared openly online, it can spread very quickly, making it even harder to manage.

AI for Many Uses

Because foundation models can be used for so many different things, they are sometimes called examples of "general-purpose AI." Governments, like the European Parliament, see these new general-purpose AI technologies as shaping the whole AI world. These systems often show up in everyday tools like ChatGPT or DALL-E.

Governments are making it a high priority to create rules for general-purpose AI, including foundation models. These systems are often very large, complex, and can have unexpected effects, which might lead to problems. They also greatly influence other applications built on top of them, making rules even more important.

How Foundation Models Work

Building the Model

For a foundation model to be useful for many tasks, it needs to learn a lot from its training data. This means using special computer designs that can handle huge amounts of information efficiently. The Transformer design is currently the most popular choice for building foundation models that work with different types of data.

Training the Model

Foundation models learn by following a "training objective," which is like a goal. This goal helps the model update its internal settings based on what it predicts from the training data. For example, language models often learn by trying to predict the next word in a sentence. Image models might learn by comparing different versions of an image or by learning to "clean up" a noisy image.

The training goals for foundation models help them learn general skills from the data. Since these models are designed for many tasks, their training goals should help them solve a wide range of problems in their area. Also, the training needs to be efficient and work well with very large models and lots of computer power.

Data for Training

Foundation models need a lot of data. The general rule is "the more data, the better." More data usually leads to better performance. However, managing huge datasets can be tricky. It's hard to make sure the data is good quality, follows rules, and doesn't have private information. Foundation models often use data scraped from the public internet. This data is plentiful, but it needs careful checking to remove biased, repeated, or harmful content.

Training foundation models can sometimes risk people's privacy if private data is accidentally used or shared. Even if no private data is leaked, the model might learn behaviors that could cause security problems. Data quality is also a big concern because internet data can contain unfair or harmful material. Once models are used, bad behavior can still appear from small parts of the data.

Computer Systems Needed

The large size of foundation models also creates challenges for the computer systems they run on. Most foundation models are too big to fit into one computer's memory, and training them needs a huge amount of expensive computer power. This problem is expected to grow as models get even bigger. Because of this, researchers are looking for ways to make models smaller without losing their power.

Special computer chips called GPUs are often used for machine learning because they have lots of memory and power. Training a typical foundation model needs many GPUs working together very fast. Getting enough powerful GPUs is a challenge for many developers. Larger models need more computer power, but sometimes they are less efficient. Since training is slow and costly, only a few big companies can afford to build the largest, most advanced foundation models.

How Models Grow and Improve

The accuracy and abilities of foundation models often improve in a predictable way as the model size and training data increase. Scientists have found "scaling laws" that show how resources (data, model size, computer use) relate to a model's abilities. This means that if you add more data or make the model bigger, its performance usually gets better in a predictable way.

Adapting Models for Tasks

Foundation models are made to be used for many purposes. To use them for a specific task, they need some "adaptation." At the very least, they need to be told what task to do. But often, they perform even better if they are more deeply adapted to a specific area.

There are different ways to adapt a foundation model, like giving it specific instructions or training it a little more on new data. These methods offer different balances between how much it costs to adapt the model and how specialized it becomes. Foundation models can be very large, so adapting the whole model can be expensive. Sometimes, developers only adapt small parts of the model to save time and money. For very specific tasks, there might not be enough data available to adapt the model well. In these cases, data might need to be labeled by hand, which can be costly.

Checking How Models Perform

Checking how foundation models perform is a very important part of building them. This helps track progress and sets standards for future models. People who use these models rely on these checks to understand how the models behave. Usually, foundation models are compared using standard tests like MMLU and HumanEval. Since foundation models can do many things, there are also "meta-benchmarks" that combine different tests.

Proper checks look at both how well a foundation model works for many different applications and its direct abilities. To make sure these checks are fair, some systems consider all the resources used for adaptation, which helps everyone understand the models better.

Foundation models play a special role in the AI world, connecting many different technologies. Training a foundation model needs several things: data, computer power, people, hardware, and code. Foundation models often need huge amounts of data and computer power. Because it costs a lot to develop foundation models but less to adapt them, a few AI companies now build these models for others to use. These companies often get their data from specialized providers and their computer power from big cloud services like Amazon Web Services, Google Cloud, and Microsoft Azure.

The company building the foundation model then uses this data and computer power to train the model. Once the model is built, less data and human effort are needed. In this process, hardware and computer power are the most important and also the hardest to get. To train bigger and more complex AI, enough computer power is key. However, this power is often controlled by a few companies, which most foundation model developers rely on. This means the process of making foundation models is very focused around these providers. Computer power is also very expensive; in 2023, AI companies spent over 80% of their money on computer resources.

Foundation models need a lot of general data to work. Early models used data scraped from parts of the internet. As models get bigger, they need even more internet data, which increases the chance of including biased or harmful information. This bad data can unfairly affect certain groups of people.

To fix the problem of low-quality data from unsupervised training, some developers use people to manually filter the data. This work is often outsourced to reduce costs, with some workers earning very little money.

Once built, the foundation model is usually put online by the developer or another organization. Then, other people can create applications based on the foundation model, either by fine-tuning it or using it for completely new purposes. This allows one foundation model to reach and help many people.

How Models are Released

After a foundation model is built, it can be shared in different ways. The way it's released depends on what it is, who can use it, how access might change, and the rules for using it. All these things affect how the model will be used by others. The two most common ways to release foundation models are through APIs or direct downloads.

When a model is released through an API, users can send requests to the model and get answers, but they can't directly see or change the model itself. On the other hand, a model could be directly downloadable, allowing users to access and change it. Both of these are often called "open releases." The exact meaning of an open release can be debated, but generally accepted rules are provided by the Open Source Initiative.

Some open foundation models include: PaLM 2, Llama 2, and Mistral. While open foundation models can help research and development move faster, they can also be misused. Anyone can download open foundation models, and very powerful ones could be changed to cause harm, either on purpose or by accident.

In a "closed release," the foundation model is not available to the public. It's only used inside the organization that built it. These releases are considered safer, but they don't offer much help to the research community or the public.

Some foundation models, like Google DeepMind's Flamingo, are completely closed, meaning only the developers can use them. Others, like OpenAI's GPT-4, have limited access, meaning the public can use them but can't see how they work inside. And some, like Meta's Llama 2, are open, meaning their core parts are widely available for others to change and study.

| Charles R. Drew |

| Benjamin Banneker |

| Jane C. Wright |

| Roger Arliner Young |