Transformer (deep learning architecture) facts for kids

A transformer is a special type of deep learning computer program. It was created by Google in 2017. Transformers are really good at understanding and creating human language, like the words you're reading right now!

Imagine you have a sentence. A transformer breaks this sentence into small pieces called "tokens" (like words or parts of words). Then, it turns each token into a special number code. What makes transformers so clever is how they pay "attention" to different parts of the sentence. This helps them understand how words relate to each other, even if they are far apart in a long sentence.

Because transformers don't need to process words one by one in a strict order, they can learn much faster than older types of computer programs. This speed has made them super popular for building large language models (LLMs). These are the huge AI models that can chat with you, write stories, or answer questions.

Today, transformers are used in many cool ways:

- Understanding and creating language (like ChatGPT).

- Helping computers "see" and understand images.

- Processing sounds.

- Even playing chess at a grandmaster level!

They have also helped create famous AI systems like generative pre-trained transformers (GPTs) and BERT.

Contents

How Transformers Started

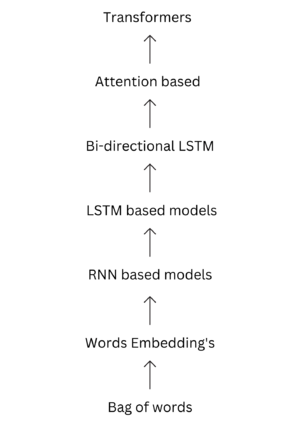

Computers have been trying to understand language for a long time. Here's a quick look at how we got to transformers:

- 1990: Early computer networks called "Elman networks" could turn words into number codes. This helped them predict what might come next in a sentence. But they struggled with words that had many meanings.

- 1992: A scientist named Jürgen Schmidhuber worked on "fast weight controllers." He later said this was an early idea for how computers could "pay attention."

- 1993: IBM used early models to help translate languages.

- 2012: A system called AlexNet showed how powerful large computer networks could be for understanding images. This encouraged scientists to build bigger networks for language too.

- 2014: Scientists created "seq2seq" models for translation. These models used two parts: an "encoder" to understand the input sentence and a "decoder" to create the translated sentence. They used a type of network called long short-term memory (LSTM).

- 2014: Another big step was adding an "attention mechanism" to these translation models. This helped the computer focus on the most important parts of the sentence when translating.

- 2016: Google Translate started using these new "neural network" methods, which were much better than older ways.

- 2017: The big breakthrough! The "Attention is all you need" paper introduced the original transformer model. It removed the slow, step-by-step parts of older networks and used a faster "attention" method that could work on all parts of a sentence at once. This made training much quicker!

- 2018: The BERT model came out, using only the "encoder" part of the transformer. It was great at understanding context.

- 2020: Transformers started being used for images (vision transformers) and speech, doing better than older methods.

- 2023: Huge models like GPT-3 and other GPT models (like ChatGPT) became very popular, showing what transformers could do.

- 2024: Transformers even reached grandmaster level in chess, just by looking at the board!

What Came Before Transformers?

Before transformers, computer programs that handled sequences (like sentences) often used "recurrent neural networks." These networks processed information one step at a time. Imagine reading a very long book and trying to remember every detail from the beginning to the end. It's hard! These networks had a similar problem, called the "vanishing-gradient problem," which made it hard for them to remember things from far back in a sequence.

Then came LSTM in 1995. LSTMs were much better at remembering things over long sequences. For a long time, LSTMs were the best way to handle sequences.

However, LSTMs still had one big problem: they couldn't work on all parts of a sentence at the same time. They had to go word by word. This made them slow, especially when using powerful computer chips like GPUs.

How Attention Helped

In 2014, the "attention mechanism" was added to these older models. Imagine you're translating a sentence from English to French. When you translate a word, you don't just look at that one word; you look at other words in the sentence that give it meaning. The attention mechanism allowed the computer to do something similar. It could "look back" at all the input words to decide how to translate the current word.

But even with attention, these models were still slow because they had to process words one after another.

Then, in 2017, the transformer came along. It completely changed the game by using "self-attention." This meant the computer could look at *all* the words in a sentence at the same time and figure out how they relate to each other. This made training much, much faster because it could use powerful GPUs to work on everything in parallel.

Training Transformers

Training a transformer is like teaching a very smart student. It involves two main steps:

- Pretraining: First, the transformer learns from a huge amount of data without specific instructions. It might read billions of sentences from the internet (like Wikipedia or Common Crawl). During this stage, it learns general language patterns. For example, it might learn to fill in missing words in a sentence, like:

* `Thank you <X> me to your party <Y> week.` -> `for inviting` and `last`

- Fine-tuning: After pretraining, the transformer is given specific tasks with labeled examples. This is like giving the student practice problems. For example, it might learn to:

* Translate languages: `translate English to German: That is good.` -> `Das ist gut.` * Answer questions. * Summarize documents. * Figure out if a sentence sounds natural or not.

This two-step process helps transformers become very good at many different language tasks.

What Transformers Can Do

Transformers have changed how computers work with language and beyond. Here are some cool things they can do:

- Translate languages: Like Google Translate, but even better.

- Summarize documents: Read a long article and give you the main points.

- Generate text: Write stories, poems, emails, or even computer code!

- Identify names: Find names of people, places, or organizations in text.

- Understand biology: Analyze DNA or protein sequences.

- Understand videos: Figure out what's happening in a video.

- Predict protein shapes: Help scientists understand how proteins fold, which is important for medicine.

For example, a special transformer called Ithaca can read old Greek writings, even if parts are missing. It can guess the missing letters, where the writing came from, and how old it is!

Where to Find Transformers

You can find transformer models in popular computer programming tools like TensorFlow and PyTorch.

There's also a special library called Transformers by Hugging Face. It provides many ready-to-use transformer models that people can use for their own projects.

How Transformers Are Built

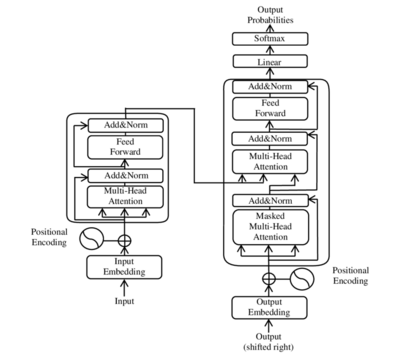

All transformers have some key parts:

- Tokenizers: These break down text into small pieces (tokens).

- Embedding Layer: This turns the tokens and their positions in the sentence into number codes that the computer can understand.

- Transformer Layers: These are the main "thinking" parts. They process the number codes over and over, pulling out more and more meaning from the language. These layers have "attention" parts and "feed-forward" parts.

- (Optional) Un-embedding Layer: This turns the final number codes back into words or probabilities of words.

There are two main types of transformer layers: encoders** and **decoders.

- Encoder-only models: Like BERT, these are great at understanding text. They read the whole sentence at once.

- Decoder-only models: Like GPT models, these are good at generating text, one word at a time. They only "see" the words they've already generated.

- Encoder-decoder models: The original transformer used both. The encoder understands the input (like an English sentence), and the decoder creates the output (like a French translation).

Input: Getting Words Ready

First, your words are broken into tokens. Then, each token gets a special number code. To help the transformer understand the order of words, it also adds "positional information" to these codes. This tells the transformer where each word is in the sentence.

The "Attention" Idea

The most important part of a transformer is its "attention" mechanism. Imagine you have a sentence, and you want to understand the word "bank." Does it mean a river bank or a money bank? The transformer looks at all the other words in the sentence to figure it out.

It does this by creating three special number codes for each word:

- A query code (what am I looking for?).

- A key code (what do I have?).

- A value code (what information does this word carry?).

The transformer then compares the "query" of one word with the "keys" of all other words. If a query and a key match well, it means those words are related. The transformer then uses the "value" of the related words to understand the original word better. This happens for every word in the sentence, all at the same time!

Many "Attention Heads"

A transformer doesn't just have one "attention" process; it has many, called "attention heads." Each head learns to focus on different kinds of relationships between words. For example, one head might focus on verbs and the words they act upon. Another might focus on words that are close together. By having many heads, the transformer gets a much richer understanding of the sentence.

Masked Attention

When a transformer is generating text (like a GPT model), it needs to predict the next word. It shouldn't "cheat" by looking at words that haven't been generated yet. So, a "mask" is used to hide future words from the transformer's view. This makes sure it generates text one word at a time, just like a human would.

Positional Encoding: Knowing Where Words Are

Since the attention mechanism processes all words at once, the transformer needs a way to know the order of words. That's where "positional encoding" comes in. It adds special numerical information to each word's code that tells the transformer its position in the sentence. This helps the transformer understand if a word comes at the beginning, middle, or end, or how far apart two words are.

Newer Transformer Ideas

Scientists are always working to make transformers even better and faster.

Faster Ways to Work

- FlashAttention: This is a clever way to make the attention part of transformers run much faster on special computer chips (GPUs). It helps move data around more efficiently.

- Multi-Query Attention: This change helps transformers generate text faster, especially when many people are using them at the same time.

- Caching: When a transformer generates text word by word, it often re-calculates things it already figured out. "KV caching" saves these calculations so they don't have to be done again, making text generation much quicker.

- Speculative Decoding: Imagine you're trying to guess the next few words in a sentence. This method uses a smaller, faster model to make a few guesses. Then, the main, bigger transformer quickly checks if those guesses are correct. If they are, it saves a lot of time!

Handling Different Types of Data

Transformers were first made for text, but now they can handle all sorts of data:

- Vision transformers: These break images into small patches and treat them like words. This lets transformers "see" and understand pictures.

- Speech transformers: These turn spoken words into sound patterns, which are then treated like images or text. This helps computers understand what you say.

- Multi-modal transformers: Some new transformers can understand many types of data at once, like text, images, and sounds, all together!

Learn More

- Perceiver

- BERT (language model)

- GPT-3

- GPT-4

- ChatGPT

- Vision transformer

- BLOOM (language model)

| Mary Eliza Mahoney |

| Susie King Taylor |

| Ida Gray |

| Eliza Ann Grier |