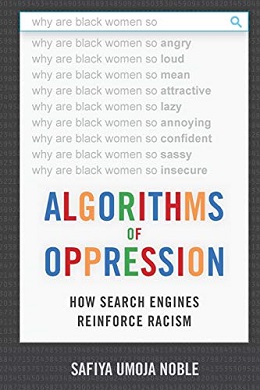

Algorithms of Oppression facts for kids

First edition

|

|

| Author | Safiya Noble |

|---|---|

| Country | United States |

| Language | English |

| Subject | Racism, algorithms |

| Genre | Non-fiction |

| Published | February 2018 |

| Publisher | NYU Press |

| Pages | 256 pp |

| ISBN | 978-1-4798-4994-9 (Hardcover) |

Algorithms of Oppression: How Search Engines Reinforce Racism is a book from 2018 by Safiya Umoja Noble. It explores how search engines, like Google, can sometimes show unfair or biased results. The book looks at how these digital tools affect people and society.

About the Author and Book

Safiya Noble studied sociology in college during the 1990s. She then worked in advertising and marketing for 15 years. Later, she earned a master's degree in library and information science.

The idea for her book came to her in 2011. She noticed that when she searched for "black girls" on Google, the results were often negative. Her master's paper in 2012 was about this topic. She even thought of the title "Algorithms of Oppression" back then.

Noble became a professor at University of California, Los Angeles in 2014. In 2017, she wrote an article about unfair search results. Her book was published on February 20, 2018.

What the Book Is About

Algorithms of Oppression is based on over six years of research. It looks at Google search algorithms from 2009 to 2015. The book discusses how search engines can show unfair results.

Noble argues that search algorithms are not always neutral. They can reflect and even make worse the unfair ideas that already exist in society. She explains that search engines often favor "whiteness." This means they show positive results when people search for "white" keywords. But for terms like "Asian," "Hispanic," or "Black," the results can be different.

A main example in the book is the difference between searching for "Black girls" versus "white girls." The results for "Black girls" often showed harmful stereotypes. These algorithms can negatively affect women of color and other groups. They can also lead to unfair profiling and wrong information for all internet users.

Noble uses a "Black intersectional feminist" approach. This means she looks at how race and gender together affect people's experiences. She shows how racism can get into the very code of algorithms. This can happen in search engines, facial recognition, and even medical programs. She argues that many new technologies, despite seeming modern, can actually continue old unfairness.

Main Ideas in the Chapters

Chapter 1: How Search Suggestions Can Be Harmful

In Chapter 1, Safiya Noble talks about Google's auto-suggestion feature. This feature tries to guess what you are typing. Noble explains how these suggestions can sometimes be upsetting or unfair.

She also discusses how Google deals with harmful search results. For example, when "Jew" was searched, many anti-Semitic pages appeared. Google said it was not responsible for these results. They suggested people use "Jews" or "Jewish people" instead. Google usually only removes pages if they are against the law.

Noble also looks at Google's advertising tool, AdWords. This tool lets anyone advertise on Google search pages. Ads are ranked by how relevant they are to a search. But the more money an advertiser spends, the higher their ad might appear. This means controversial topics can sometimes show up first if someone spends a lot to promote them.

Chapter 2: Google's Role in Unfairness

In Chapter 2, Noble explains that Google has made racism worse and often denies it. Google tends to blame the people who create the content or those who search for it.

Noble argues that Google's design and structure often favor white people and men. She found that searches for "black girls" often showed stereotypes. Google's algorithm creates categories based on its own ideas. Noble says Google hides behind its algorithm, even though it can continue unfairness.

Chapter 3: Spreading Misleading Stories

Chapter 3 discusses how Google's search engine can combine different sources to create harmful stories about minority groups. Noble gives an example of searching for "black on white crimes." She found that the results often came from conservative sources that twisted information. These sources showed racist and anti-Black information.

Chapter 4: Control Over Your Identity Online

In Chapter 4, Noble talks about how Google has strong control over a person's online identity. She explains that what's on the internet can stay there forever. This can affect a person's future. She compares privacy laws in the U.S. to those in Europe. European citizens have a "right to be forgotten," meaning they can ask for their data to be deleted.

Noble points out that this lack of privacy can affect women and people of color more. Google says it protects our data, but it doesn't always address what happens when you want your information removed.

Chapter 5: Unfairness Beyond Google

Chapter 5 expands the discussion beyond Google. Noble argues that other trusted information sources, like the Library of Congress, can also promote unfair ideas. She says they often favor whiteness and traditional ways of thinking.

She shares a story about students who fought for two years to change the Library of Congress's term "illegal aliens" to "noncitizen." Noble stresses that misrepresentation and wrong classifications cause problems. She shows how technology and society are deeply connected, and how many digital search engines can continue unfairness.

Chapter 6: Finding Solutions

In Chapter 6, Safiya Noble suggests ways to fix the problem of algorithmic bias. She believes that governments should create policies to reduce Google's "information monopoly." This would help regulate how search engines filter results. She says governments and big companies have the most responsibility to fix these problems.

Noble also disagrees with the idea that more women and minorities in tech will solve everything. She calls this idea "too relaxed." She says it puts the blame on individuals, who have less power than big media companies. She uses the example of a Black hairdresser whose business faced problems because of biased advertising on a review site.

She ends the chapter by calling on government groups like the Federal Communications Commission (FCC) to "regulate decency." This means limiting racist, homophobic, or prejudiced content online. She urges people to avoid "colorblind" ideas about race, which ignore the struggles of minority groups. She also warns that "big-data optimism" often overlooks how big data can harm minority communities more.

Conclusion

In Algorithms of Oppression, Safiya Noble looks at how our Google searches affect society and politics. She challenges the idea that the internet is completely fair or "post-racial." Each chapter explores different ways search engines can create unfair biases. By explaining important ideas clearly, Algorithms of Oppression is a book that many different people can understand, not just experts.

| Roy Wilkins |

| John Lewis |

| Linda Carol Brown |