IBM Watson facts for kids

|

|

| Operators | IBM |

|---|---|

| Location | Thomas J. Watson Research Center, New York, USA |

| Architecture | 2,880 POWER7 processor threads |

| Memory | 16 terabytes of RAM |

| Speed | 80 teraFLOPS |

IBM Watson is a special computer system created by IBM. It was designed to understand and answer questions asked in everyday language, just like humans speak. This project was part of IBM's DeepQA research, led by David Ferrucci. The system was named after Thomas J. Watson, who founded IBM and was its first CEO.

Watson first became famous for playing on the TV quiz show Jeopardy!. In 2011, it competed against two of the show's greatest champions, Brad Rutter and Ken Jennings. Watson won the competition, taking home the top prize of US$1 million.

After its success on Jeopardy!, IBM started looking for ways to use Watson in the real world. In 2013, Watson's first commercial use was to help doctors make decisions about treating lung cancer at Memorial Sloan Kettering Cancer Center in New York City.

How IBM Watson Works

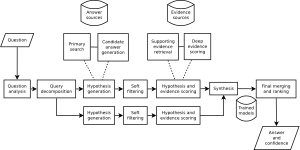

Watson was built as a question answering (QA) computer system. It uses advanced technologies like natural language processing (understanding human language), information retrieval (finding information), knowledge representation (organizing facts), automated reasoning (making logical conclusions), and machine learning (learning from data). These technologies help Watson answer questions on many different topics.

IBM explained that Watson uses over 100 different methods to understand language. It finds information, creates possible answers, looks for proof, and then ranks the best answers.

Over the years, Watson has been updated. It now uses new ways to work, like being available through IBM Cloud. It also uses improved machine learning and faster computer parts. This makes it easier for developers and researchers to use Watson's abilities.

Watson's Software

Watson runs on IBM's DeepQA software. It also uses a framework called Apache UIMA, which helps manage unstructured information. The system's programs are written in languages like Java, C++, and Prolog. It operates on the SUSE Linux Enterprise Server 11 system. It uses Apache Hadoop to spread out its computing tasks.

Besides the DeepQA system, Watson has other smart parts. For example, one part figures out how much to bet in Final Jeopardy!. It bases this on how sure Watson is about the answer and the scores of the other players. Another part uses a math rule called Bayes' theorem to guess where the Daily Double might be. This helps Watson decide how much to wager.

Watson's Hardware

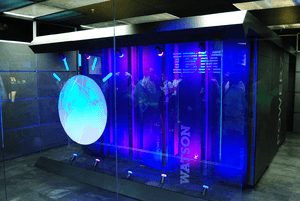

Watson is built to handle a lot of work very quickly. It uses many POWER7 processors working together. This setup helps Watson create ideas, gather lots of evidence, and analyze data. The system uses 90 IBM Power 750 servers. Each server has a 3.5 GHz POWER7 eight-core processor.

In total, Watson uses 2,880 POWER7 processor threads. It also has 16 terabytes of RAM (memory). This allows Watson to process huge amounts of information very fast. For the Jeopardy! game, all the information was stored in Watson's RAM. This was because data on hard drives would be too slow for the competition.

How Watson Plays Jeopardy!

Watson breaks down questions into keywords and phrases. It then looks for related information. Watson's main strength is its ability to quickly run many language analysis programs at the same time. The more programs that find the same answer, the more confident Watson is that it's correct. Once Watson has a few possible answers, it checks them against its knowledge to see if they make sense.

Watson vs. Human Players

Watson's way of working gives it both advantages and disadvantages against human Jeopardy! players. Watson is very good at finding related terms for clues. However, it sometimes struggles to fully understand the context of a clue. Watson can read, analyze, and learn from human language. This helps it make decisions that seem human-like.

Human players often buzz in faster than Watson, especially for short clues. Watson's programming stops it from buzzing in before it is sure of its answer. But once Watson has an answer, its reaction time on the buzzer is usually faster than humans. It also isn't affected by human players' mind games.

In practice games, humans had a few seconds while Watson processed the clue. During this time, Watson also had to decide if it was confident enough to buzz. The electronic parts that control Watson's buzzer are very fast. This made Watson's reaction time quicker than the human players. Watson's voice was created from recordings by actor Jeff Woodman.

The Jeopardy! show used different ways to tell Watson and the humans when to buzz. Humans saw a light, which took them a fraction of a second to notice. Watson received an electronic signal. It could activate its buzzer in about eight milliseconds. Humans tried to guess when the light would appear. But their timing was usually not as precise as Watson's.

Watson's History

How Watson Was Developed

After its Deep Blue computer beat chess champion Garry Kasparov in 1997, IBM looked for a new challenge. In 2004, an IBM manager named Charles Lickel saw people watching Jeopardy! intently. Ken Jennings was on his long winning streak. Lickel thought Jeopardy! could be a good challenge for IBM. In 2005, Paul Horn, an IBM executive, supported the idea.

At first, researchers thought playing Jeopardy! would be too hard. It was much more complex than chess. But David Ferrucci took on the challenge. An earlier system, Piquant, only got about 35% of clues right and took minutes to answer. For Jeopardy!, Watson needed to answer in seconds.

In 2006, Watson was tested with 500 old Jeopardy! clues. It only got about 15% correct. The IBM team was given three to five years and 15 people to improve Watson. By 2008, Watson could compete with Jeopardy! champions. By February 2010, Watson was regularly beating human contestants.

During the game, Watson had access to 200 million pages of information. This included the entire 2011 Wikipedia. But it was not connected to the internet. For each clue, Watson showed its three most likely answers on screen. Watson was better at buzzing in than its human opponents. But it struggled with short clues that had only a few words.

Many universities and students helped IBM develop Watson. These included Rensselaer Polytechnic Institute, Carnegie Mellon University, and Massachusetts Institute of Technology.

Watson on Jeopardy!

Getting Ready for the Show

In 2008, IBM talked to Jeopardy! producer Harry Friedman. They discussed Watson playing against Ken Jennings and Brad Rutter. The show's producers agreed. There were some disagreements between IBM and Jeopardy! staff. IBM worried the writers might make clues that tricked Watson. To solve this, a third party chose clues randomly from old, unaired shows.

Jeopardy! staff also worried about Watson's buzzing speed. Watson originally signaled electronically. But the show asked it to physically press a button, like humans. Even with a robotic "finger," Watson was still faster. Ken Jennings said Watson could buzz in "every single time with little or no variation."

IBM built a practice set to look like the Jeopardy! stage. They held about 100 test games. Watson won 65% of these games.

For the TV show, Watson was shown as a glowing blue globe. This was inspired by IBM's "smarter planet" symbol. The globe had "threads" of thought. Ken Jennings noted there were 42 threads, a joke referencing the number 42 from The Hitchhiker's Guide to the Galaxy.

A practice match was filmed on January 13, 2011. The official matches were filmed on January 14, 2011. Everyone kept the results secret until the show aired in February.

Practice Match

In a practice match for the press on January 13, 2011, Watson won a 15-question round. Watson scored $4,400. Ken Jennings had $3,400, and Brad Rutter had $1,200. Jennings and Watson were tied before the last question. No one answered incorrectly in this practice round.

First Official Match

The first part of the match aired on February 14, 2011. The second part aired on February 15, 2011. Brad Rutter won the draw to choose the first category. Watson answered the second clue correctly. It then chose the fourth clue in the first category. This was a plan to find the Daily Double quickly. Watson's guess for the Daily Double was correct. At the end of the first round, Watson and Rutter were tied with $5,000. Jennings had $2,000.

Watson had some interesting moments. Once, it repeated an incorrect answer that Jennings had given. Watson didn't know Jennings had already said it. In another case, Watson answered "What is a leg?" for a clue about George Eyser. Jennings had incorrectly said "What is: he only had one hand?" Watson's answer was first accepted, but then changed to incorrect. Watson also made complex bets on the Daily Doubles.

Watson took a big lead in Double Jeopardy! It answered both Daily Doubles correctly.

However, in the Final Jeopardy! round, Watson was the only one to miss the clue. The category was U.S. Cities. The clue was about airports named after a World War II hero and a World War II battle. Rutter and Jennings correctly answered Chicago. Watson's answer was "What is Toronto?????" The question marks showed it wasn't confident. Experts later explained that Watson might not have fully understood the clue. Watson's second choice was Chicago, but both Toronto and Chicago were low confidence answers. Watson only bet $947 on this question.

The game ended with Jennings at $4,800, Rutter at $10,400, and Watson with $35,734.

Second Official Match

During the introduction, the host, Alex Trebek, joked about Watson's Toronto answer. An IBM engineer even wore a Toronto Blue Jays jacket to the second match.

In the first round, Jennings finally found a Daily Double. Watson answered one Daily Double incorrectly for the first time in Double Jeopardy! After the first round, Watson was in second place briefly. But the final result was a victory for Watson. Watson scored $77,147. Jennings scored $24,000, and Rutter scored $21,600.

Final Results

Watson won first place and $1 million. Ken Jennings won second place and $300,000. Brad Rutter won third place and $200,000. IBM donated all of Watson's winnings to charity. Half went to World Vision and half to World Community Grid. Jennings and Rutter also donated half of their winnings to their chosen charities.

After the match, Ken Jennings wrote an article. He joked that he and Brad Rutter were the first "knowledge-industry workers" to lose their jobs to thinking machines. He suggested that Watson-like software could automate jobs in areas like medical diagnosis and tech support.

Match Against US Congress Members

On February 28, 2011, Watson played an exhibition match against members of the United States House of Representatives. In the first round, Rush D. Holt, Jr. (a former Jeopardy! contestant) was leading. However, when all matches were combined, Watson won with $40,300. The congressional players combined scored $30,000.

IBM said that the technology behind Watson was a big step forward in computing. They believed it could help governments make better decisions and serve citizens.

What Watson Was Used For

After its success on Jeopardy!, IBM tried to use Watson in many different areas. These included education, weather forecasting, and helping with cancer treatment.

From 2012 through the late 2010s, Watson's technology was used to create applications. Many of these projects were later stopped. Watson was used to help with:

- Diagnosing cancer and planning treatments.

- Helping with retail shopping.

- Buying medical equipment.

- Creating cooking recipes.

- Saving water.

- Managing hotels.

- Analyzing human genes.

- Developing and identifying music.

- Forecasting weather.

- Selling ads with weather forecasts.

- Tutoring students.

- Preparing taxes.

In 2021, a reporter from The New York Times explained that IBM's early focus on very big and difficult projects caused problems.

The company’s missteps with Watson began with its early emphasis on big and difficult initiatives intended to generate both acclaim and sizable revenue for the company, according to many of the more than a dozen current and former IBM managers and scientists interviewed for this article. Several of those people asked not to be named because they had not been authorized to speak or still had business ties to IBM.

In 2023, Mac Schwerin wrote in The Atlantic that IBM's leaders didn't fully understand the technology.

But the suits in charge went after the bigger and more technically challenging game of feeding the machine entirely different types of material. They viewed Watson as a generational meal ticket.

In the end, IBM's big plans for Watson didn't quite happen as expected. Watson was very good at specific tasks, like understanding language for trivia games. But it was harder to use it for general business problems. The way Watson was marketed also made people expect too much from it. This led to challenges for Watson in the business world.

Between 2019 and 2023, IBM started focusing on a new project called WatsonX. This is different from the original Watson. It aims for more specific, industry-focused technology within IBM's cloud computing plans IBM Watsonx.

Watson in Healthcare

IBM's Watson was used to look at large amounts of medical data. It helped doctors with diagnoses and cancer treatment plans. When a doctor asked Watson a question, the system would analyze the patient's information. It would then compare this data with many sources. These sources included treatment guidelines, patient records, and research papers. Finally, Watson would suggest possible treatment options.

IBM said Watson could use a wide range of information. However, company leaders later said that not enough data was available.

Watson was not involved in actually diagnosing patients. Instead, it helped doctors choose the best treatment options for patients who already had a diagnosis. One study looked at 1,000 difficult patient cases. Watson's recommendations matched those of human doctors in 99% of cases.

IBM worked with hospitals like the Cleveland Clinic and Memorial Sloan Kettering Cancer Center. In 2011, IBM partnered with WellPoint (now Anthem). This partnership used Watson to suggest treatment options to doctors. In 2013, Watson was first used commercially for lung cancer treatment decisions. The Cleveland Clinic partnership aimed to improve Watson's medical knowledge. However, a program at the MD Anderson Cancer Center, started in 2013, did not meet its goals. It was stopped after $65 million was invested.

In 2016, IBM launched "IBM Watson for Oncology." This product gave doctors and patients personalized cancer care options. IBM also worked with Manipal Hospitals in India to offer Watson's help online.

IBM faced challenges in the healthcare market. In 2022, IBM announced it was selling its Watson Health unit. This showed a big change in IBM's approach to healthcare.

IBM Watson Group

On January 9, 2014, IBM announced a new business unit for Watson. This unit, called IBM Watson Group, was based in New York City. It employed 2,000 people. IBM invested $1 billion to start this division. The Watson Group developed new cloud-based services. These included Watson Discovery Advisor, Watson Engagement Advisor, and Watson Explorer.

Watson Discovery Advisor focused on research in areas like medicine and publishing. Watson Engagement Advisor helped with self-service applications. Watson Explorer helped users find and share data insights. IBM also started a $100 million fund to encourage new applications for "cognitive" systems. IBM said the cloud-based Watson was 24 times faster than before. Its physical size also shrank by 90%.

In 2017, IBM and MIT started a new research project in artificial intelligence. IBM invested $240 million to create the MIT–IBM Watson AI Lab. This lab brings together researchers to advance AI. Their projects include computer vision and understanding language. They also work on making sure AI systems are fair and secure.

See also

In Spanish: Watson (inteligencia artificial) para niños

In Spanish: Watson (inteligencia artificial) para niños

- Artificial intelligence

- Blue Gene

- IBM Watsonx

- Commonsense knowledge (artificial intelligence)

- Glossary of artificial intelligence

- Artificial general intelligence

- Tech companies in the New York metropolitan area

- Wolfram Alpha