Maximum likelihood estimation facts for kids

In statistics, Maximum Likelihood Estimation (often called MLE) is a smart way to figure out the best settings (called parameters) for a probability distribution model. You use this method when you have some data you've observed, and you want to find the model parameters that make your observed data look the most likely.

Imagine you have a set of data, like the heights of students in a class. You might think these heights follow a certain pattern, like a Normal distribution (a bell curve). MLE helps you find the exact average height and how spread out the heights are (the variance) that best fit your actual student height data.

This method is popular because it's both easy to understand and very flexible. It's a powerful tool in statistical inference, which is all about making educated guesses and predictions from data. If the math involved is smooth enough (differentiable), you can use calculus to find the exact best values.

Contents

What is Maximum Likelihood Estimation?

Maximum Likelihood Estimation helps us find the best "settings" for a statistical model. These settings are called parameters. The goal is to make the data we observed seem as likely as possible, given our chosen model.

How it Works

Imagine you have some observations, like the results of an experiment. We think these observations come from a certain type of probability distribution. This distribution has some unknown parameters. MLE's job is to find the values for these parameters that make your observed data most probable.

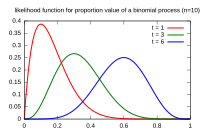

We write these parameters as a group, like a list of numbers. The "likelihood function" is a special formula that tells us how likely our observed data is for different sets of parameter values.

- The Goal: Find the parameter values that make the likelihood function as big as possible.

- The Estimate: The specific parameter values that maximize this function are called the "maximum likelihood estimate." It's like finding the sweet spot where your model best explains your data.

Using Logarithms to Simplify

Sometimes, the likelihood function can be complicated to work with directly. So, statisticians often use the natural logarithm of the likelihood function. This is called the "log-likelihood."

Why use the logarithm? Because the logarithm is a "monotonic function." This means that if one number is bigger than another, its logarithm will also be bigger. So, finding the maximum of the log-likelihood gives you the exact same parameter values as finding the maximum of the original likelihood function. It just makes the math easier!

If the log-likelihood function is smooth, you can use calculus (finding derivatives and setting them to zero) to find the best parameter values. For some models, you can solve these equations directly. But often, you need to use computer programs to find the answer step-by-step.

Important Features of MLE

Maximum Likelihood Estimators have several cool features, especially when you have a lot of data.

Consistency

Consistency means that if you collect more and more data, your MLE estimate will get closer and closer to the true, unknown parameter value. It's like aiming at a target: the more shots you take, the more likely your average shot will be right in the middle.

- What it means: If your data truly comes from the model you're using, and you have enough observations, MLE can find the true parameter value very accurately.

- In simple terms: The more data you have, the more reliable your MLE results become.

Functional Invariance

This is a neat property! If you find the MLE for a parameter, and then you want to know the MLE for a transformation of that parameter (like if you found the MLE for the average height in inches, and now you want it in centimeters), you just apply the same transformation to your MLE.

- Example: If you find the best estimate for `p` (probability of heads for a coin), and you want the best estimate for `1-p` (probability of tails), you just take `1 - (your best p estimate)`. It's that simple!

Efficiency

When you have a very large amount of data, MLEs are "efficient." This means they are among the best possible estimators. They achieve the lowest possible mean squared error (a measure of how far off your estimates are on average) compared to other consistent estimators.

- Simply put: With lots of data, MLE gives you the most precise estimate possible.

Connection to Bayesian Inference

MLE is closely related to another statistical method called Maximum a posteriori (MAP) estimation, which is part of Bayesian inference. If you assume that all possible parameter values are equally likely before you see any data (this is called a "uniform prior distribution"), then the MLE is exactly the same as the MAP estimate.

Examples of MLE in Action

Biased Coin Example

Let's say you have a coin, and you want to know if it's fair or "biased" (meaning it lands on heads more or less often than tails). You want to find the probability `p` of getting a head.

You toss the coin 80 times and get 49 heads and 31 tails.

- Scenario 1: Finite Choices

Imagine you know the coin must be one of three types: * Coin A: `p` = 1/3 (heads 1 out of 3 times) * Coin B: `p` = 1/2 (heads 1 out of 2 times - a fair coin) * Coin C: `p` = 2/3 (heads 2 out of 3 times)

You calculate how likely your 49 heads and 31 tails would be for each coin: * For Coin A (`p`=1/3): Very unlikely (probability close to 0) * For Coin B (`p`=1/2): Unlikely (probability around 0.012) * For Coin C (`p`=2/3): Most likely (probability around 0.054)

Since Coin C makes your observed results most probable, the MLE for `p` is 2/3.

- Scenario 2: Any Probability is Possible

Now, what if `p` could be any value between 0 and 1? You use a formula that tells you the likelihood of getting 49 heads in 80 tosses for any `p`. By using calculus (or by trying many values), you find that the likelihood is highest when `p` is exactly 49/80.

So, the Maximum Likelihood Estimate for the probability of heads is 49/80. This makes sense: it's simply the number of heads divided by the total number of tosses!

Normal Distribution Example

Let's say you have a list of numbers, like the heights of students. You think these heights follow a Normal distribution (a bell curve). This distribution has two parameters:

- `μ` (mu): The average height.

- `σ²` (sigma squared): How spread out the heights are (the variance).

MLE helps you find the best `μ` and `σ²` values for your data.

1. Finding the Average (μ): The MLE for the average height `μ` turns out to be the simple sample mean of your data (just add all heights and divide by the number of students). This estimate is "unbiased," meaning it's correct on average. 2. Finding the Spread (σ²): The MLE for the variance `σ²` is calculated by taking the average of how far each data point is from the mean, squared. Interestingly, this estimate is slightly "biased" for small datasets, meaning it tends to be a little off. However, for large datasets, it becomes very accurate.

When Computers Help

Sometimes, the equations to find the maximum likelihood estimates are too complex to solve by hand. In these cases, we use computers and special "iterative procedures." These procedures start with an educated guess for the parameters and then slowly adjust them, step by step, to get closer and closer to the true maximum likelihood values.

- Gradient Descent: This method uses the "slope" of the likelihood function to figure out which way to move to reach the peak.

- Newton-Raphson Method: This is a more advanced method that uses not just the slope but also how the slope is changing (the curve of the function) to find the peak faster.

These computer methods are super helpful for complex models, but it's always good to double-check that the computer found the actual maximum and not just a small bump on the way.

History of MLE

The idea behind Maximum Likelihood Estimation has been around for a long time, with early users like Carl Friedrich Gauss and Pierre-Simon Laplace. However, it became widely known and used thanks to Ronald Fisher between 1912 and 1922. He strongly recommended it and studied its properties in detail.

Later, in 1938, Samuel S. Wilks provided a mathematical proof (now called Wilks' theorem) that showed how reliable MLE is, especially with large amounts of data. This theorem helps statisticians understand how accurate their estimates are and create "confidence regions" around them, showing a range where the true parameter values are likely to be.

See also

In Spanish: Máxima verosimilitud para niños

In Spanish: Máxima verosimilitud para niños

- Akaike information criterion: A way to compare different statistical models.

- Fisher information: Helps understand how much information your data gives you about the parameters.

- Mean squared error: A way to measure how good an estimate is.

| Jewel Prestage |

| Ella Baker |

| Fannie Lou Hamer |