Thread (computing) facts for kids

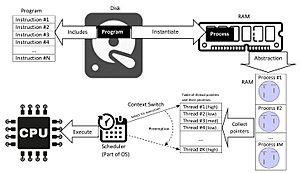

In computer science, a thread is like a tiny list of instructions that a computer can follow. Think of it as a small, independent task within a bigger program. An operating system has a special part called a scheduler. This scheduler helps manage these threads.

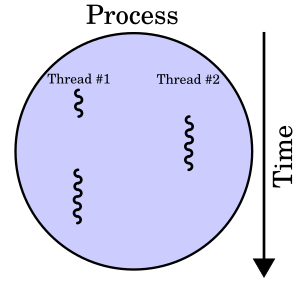

Threads are often part of a larger process. Imagine a process as a big project, and threads are the smaller tasks needed to complete that project. Multiple threads in one process can work at the same time. They share resources like computer memory. Different processes, however, usually do not share these resources.

What Are Threads?

Threads are a way for a computer program to do several things at once. This is called multithreading. It helps programs run faster and smoother. For example, a video game might use one thread to draw the graphics. Another thread could handle player controls. A third thread might manage the game's sound.

How Threads Work

When a program starts, it usually has at least one thread. This is the main thread. Other threads can be created as needed. These threads can run at the same time. They share the program's code and its data. This makes it easy for them to work together.

History of Threads

Threads first appeared in computer systems a long time ago. They were called "tasks" in a system called OS/360 in 1967. The idea of threads became much more popular in the early 2000s. This was when computers started having multiple processor cores. To use these multiple cores well, programs needed to run tasks at the same time. Threads became a great way to do this.

Understanding Related Concepts

To really get what threads are, it helps to know about some other computer terms. These include processes, kernel threads, user threads, and fibers.

What is a Process?

A process is a running program. It's like a complete, independent job for the computer. Each process has its own dedicated resources. These resources include its own memory space and file access. Processes are "heavyweight." This means creating, stopping, or switching between them takes a lot of computer power. They are kept separate from each other. This separation helps keep programs stable. If one process crashes, it usually doesn't affect others.

What is a Kernel Thread?

A kernel thread is a basic unit of work that the computer's operating system (the kernel) knows about. Every process has at least one kernel thread. If a process has many kernel threads, they all share the same memory and files. Kernel threads are "lightweight" compared to processes. They are cheaper to create and switch between. They only need their own small memory space (called a stack) and a few other details.

What is a User Thread?

User threads are managed by the program itself, not directly by the operating system. Think of them as "mini-threads" within a kernel thread. The operating system doesn't even know they exist. Switching between user threads is very fast. This is because the computer doesn't need to ask the operating system for help.

However, if a user thread needs to wait for something, like reading a file, the whole program might stop. This is because the operating system only sees the main kernel thread. A common solution is to make sure these waiting tasks don't block the whole program.

What are Fibers?

Fibers are even lighter than user threads. They are very simple tasks that work together. A running fiber must "yield" or give up control to let another fiber run. This makes them easy to set up. Fibers can run within any thread in the same process. They let programs manage their own tasks very efficiently.

Threads Versus Processes

Threads and processes are both ways to run tasks on a computer. But they have some key differences:

- Processes are usually independent. Threads are parts of a process.

- Processes carry a lot of information. Threads share most of their process's information.

- Processes have separate memory areas. Threads share the same memory area.

- Processes talk to each other using special computer methods. Threads can easily share data because they are in the same memory.

- Switching between threads in the same process is faster than switching between processes.

Using threads can save computer resources. They also make it easier for different parts of a program to share information. However, if one thread has a problem, it can crash the whole process. This is because they share the same memory.

How Threads Are Scheduled

The operating system decides which thread runs and when. This is called scheduling.

Preemptive Versus Cooperative Scheduling

Operating systems schedule threads in two main ways:

- Preemptive scheduling: The operating system decides when to pause one thread and start another. It can interrupt a thread at any time. This gives the operating system fine control.

- Cooperative scheduling: Threads decide when to give up control. They must "cooperate" and let other threads run. If a thread gets stuck or doesn't give up control, other threads might not run.

Single Versus Multi-Processor Systems

Years ago, most computers had only one main processor. Threads were still useful because switching between them was faster than switching between whole programs.

Today, most computers have multiple processors or "cores." This means many threads can truly run at the same time. Each core can handle a separate thread. This makes programs much faster.

Different Threading Models

There are different ways to connect user threads to kernel threads.

1:1 Model (Kernel-Level Threading)

In this model, each user thread you create has its own kernel thread. It's like a direct match. The operating system knows about and manages every single thread. This is simple and common in systems like Windows and Linux.

M:1 Model (User-Level Threading)

Here, many user threads are managed by just one kernel thread. The operating system only sees that one kernel thread. Switching between user threads is super fast because the operating system isn't involved. But if that one kernel thread gets stuck (like waiting for a file), all the user threads linked to it also stop. This model can't use multiple processor cores.

M:N Model (Hybrid Threading)

This model is a mix of the 1:1 and M:1 models. It maps many user threads to a smaller number of kernel threads. It tries to get the best of both worlds. It can use multiple processor cores. It also allows for fast switching between user threads. However, it's more complex to set up.

Single-Threaded Versus Multithreaded Programs

Programs can be designed to use one thread or many threads.

Single-Threaded Programs

A single-threaded program does one thing at a time. It processes commands one after another. If a single-threaded program starts a long task, the whole program might seem to freeze. This is because it can't do anything else until that task is finished.

Multithreaded Programs

Multithreading means a program has multiple threads running at the same time. These threads share the program's resources. They can work independently. This makes programs more responsive. For example, a web browser can load a webpage in one thread. At the same time, it can play an animation in another thread.

Multithreading is great for using computers with multiple cores. Tasks can be split into smaller parts. Each part can run on a different core. This makes the program run much faster.

Threads and Data Sharing

Threads in the same program share the same memory space. This is good for sharing data easily. But it can also cause problems. Imagine two threads trying to change the same piece of data at the exact same time. This can lead to errors called "race conditions." These bugs are very hard to find and fix.

To prevent this, programmers use special tools called synchronization primitives. These tools, like mutexes, act like locks. They make sure only one thread can access a piece of data at a time. This keeps the data safe and correct.

Thread Pools

A common way to use threads is with a thread pool. This is like having a team of workers ready to go. A set number of threads are created when the program starts. When a new task comes in, one of these waiting threads picks it up. Once the task is done, the thread goes back to waiting for another job. This avoids the time and effort of creating and destroying threads for every small task.

Pros and Cons of Multithreaded Programs

Multithreaded programs have some great benefits:

- Responsiveness: Programs can stay active and respond to you. Even if a long task is running in the background, the program won't freeze.

- Parallelization: They can use multiple processor cores. This means tasks can truly run side-by-side, making the program much faster.

However, there are also challenges:

- Complexity: Managing shared data between threads can be tricky. It's easy to create bugs like race conditions or deadlocks. A deadlock is when two threads are waiting for each other, and neither can move forward.

- Testing: Multithreaded programs can be hard to test. Bugs might only show up sometimes, making them hard to find.

- Synchronization Costs: Using locks and other synchronization tools can slow things down. If threads are constantly waiting for each other, the benefits of multithreading can be lost.

Programming Language Support

Many programming languages help developers use threads.

- Languages like C and C++ often let programmers use the operating system's direct threading tools. There's a standard called POSIX Threads (Pthreads) that many systems use.

- Higher-level languages like Java and Python make threading easier. They hide the complex details of how threads work on different computer systems.

- Some languages, like certain versions of Python, have a "global interpreter lock" (GIL). This lock means that even with multiple threads, only one thread can truly run at a time on a single processor core. This limits how much faster the program can get.

Images for kids

See also

In Spanish: Hilo (informática) para niños

In Spanish: Hilo (informática) para niños

- Computer multitasking

- Multi-core (computing)

- Multithreading (computer hardware)

- Thread pool pattern

- Thread safety

| James Van Der Zee |

| Alma Thomas |

| Ellis Wilson |

| Margaret Taylor-Burroughs |