DeepSeek facts for kids

|

|

|

Native name

|

杭州深度求索人工智能基础技术研究有限公司

|

|---|---|

| Private | |

| Industry | Information technology Artificial intelligence |

| Founded | 17 July 2023 |

| Founder |

|

| Headquarters | Hangzhou, Zhejiang, China |

|

Key people

|

|

| Owner | High-Flyer |

|

Number of employees

|

160 (2025) |

DeepSeek is a Chinese company that creates artificial intelligence (AI) programs. It is known for making large language models (LLMs). These are special computer programs that can understand and create human-like text. DeepSeek is based in Hangzhou, Zhejiang, China. It is owned by a Chinese investment company called High-Flyer.

DeepSeek was started in July 2023 by Liang Wenfeng. He is also the CEO of High-Flyer. In January 2025, DeepSeek launched its own chatbot, also called DeepSeek. This chatbot uses their powerful DeepSeek-R1 model.

The DeepSeek-R1 model works as well as other top AI models like OpenAI's GPT-4. But it costs much less to train. For example, DeepSeek said it trained its V3 model for about US$6 million. This is much less than the US$100 million it cost to train OpenAI's GPT-4 in 2023. DeepSeek also used much less computing power. This success has surprised many people in the AI world.

DeepSeek shares its models as "open weight." This means that the main parts of the model are openly available. However, there are some rules about how they can be used. The company hires smart AI researchers from top universities. They also hire people from other fields to make their AI models even better.

DeepSeek made its training cheaper by using special methods. They also trained their models even when it was hard to get advanced AI chips in China. They used less powerful chips and fewer of them. This breakthrough caused big changes in the industry. It even affected companies like Nvidia, which makes AI chips.

Contents

How DeepSeek Started

From Trading to AI (2016–2023)

In February 2016, Liang Wenfeng helped start High-Flyer. He was interested in AI and had been trading stocks since 2008. High-Flyer began using AI for stock trading in October 2016. By the end of 2017, most of its trading was done by AI.

Liang built High-Flyer as a company that uses AI to trade stocks. By 2021, the company used AI for all its trading. They often used special chips made by Nvidia.

In 2019, High-Flyer built its first big computer system, called Fire-Flyer. It cost about 200 million yuan. This system had 1,100 special computer chips called GPUs. It was used for about 1.5 years.

By 2021, Liang started buying many Nvidia GPUs for a new AI project. He got 10,000 Nvidia A100 GPUs before the United States limited chip sales to China. A new computer system, Fire-Flyer 2, began being built in 2021. It had a budget of 1 billion yuan.

In 2022, Fire-Flyer 2 was used almost all the time. About 27% of its power was used for science projects outside the company.

On April 14, 2023, High-Flyer announced a new lab to research artificial general intelligence (AGI). This lab would work on AI tools not related to trading. Two months later, on July 17, 2023, this lab became a separate company called DeepSeek. High-Flyer was its main investor.

New AI Models (2023–Present)

DeepSeek released its first model, DeepSeek Coder, on November 2, 2023. This was followed by the DeepSeek-LLM series on November 29, 2023. In January 2024, they released DeepSeek-MoE models. In April, they released DeepSeek-Math models.

DeepSeek-V2 came out in May 2024. A month later, the DeepSeek-Coder V2 series was released. In September 2024, DeepSeek V2.5 was introduced and updated in December. A preview of DeepSeek-R1-Lite became available in November 2024. In December, DeepSeek-V3-Base and DeepSeek-V3 (a chat model) were released.

On January 20, 2025, DeepSeek launched its DeepSeek chatbot. It uses the DeepSeek-R1 model and is free for phones. By January 27, DeepSeek became the most downloaded free app on the iOS App Store in the United States. This caused Nvidia's stock price to drop.

On March 24, 2025, DeepSeek released DeepSeek-V3-0324. On May 28, 2025, DeepSeek released DeepSeek-R1-0528. This model was noted for following official Chinese rules more closely in its answers.

How DeepSeek Works

DeepSeek is based in Hangzhou, China. It is owned and funded by High-Flyer. Liang Wenfeng, who helped start the company, is the CEO. As of May 2024, Liang owned most of DeepSeek.

Company Goals

DeepSeek says it focuses on research and does not plan to sell its products right away. This approach also helps it avoid some Chinese AI rules for products used by people.

DeepSeek hires many new university graduates. They also hire people without computer science degrees. This helps them get different kinds of knowledge for their AI models, like in poetry or advanced math.

DeepSeek's AI Models

DeepSeek's models are "open weight." This means their main parts are shared, but they are not fully open source software.

DeepSeek Coder

DeepSeek Coder is a group of eight models. Four are for basic use, and four are trained to follow instructions. All of them can understand long pieces of text. The models can be used for "open and responsible" purposes.

These models were trained on a lot of information. This included computer code, English text about code, and Chinese text. They were trained using powerful Nvidia GPUs.

DeepSeek-LLM

The DeepSeek-LLM series came out in November 2023. It has models with different sizes. DeepSeek said these models performed better than other open-source AI models at the time.

These models were similar to the Llama series. They were trained on huge amounts of English and Chinese text from the internet. Chat versions of these models were also released. They were trained to have conversations.

MoE Models

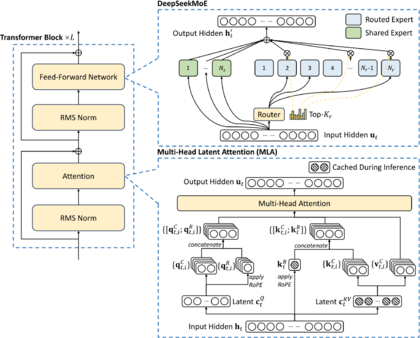

DeepSeek-MoE models came out in January 2024. They use a special method called "mixture of experts" (MoE). This helps them work well while using less computing power.

Math Models

DeepSeek-Math includes three models: Base, Instruct, and RL. These models were trained to solve math problems. They learned from many math questions and step-by-step solutions.

V2 Models

In May 2024, DeepSeek released the DeepSeek-V2 series. This series includes base models and chatbots. These models were trained on a huge amount of text, including more Chinese than English. They can also understand very long texts.

The V2 models use new ways of working. They have a special attention method and use the "mixture of experts" idea. The Financial Times reported that these models were cheaper to use than others.

V3 Models

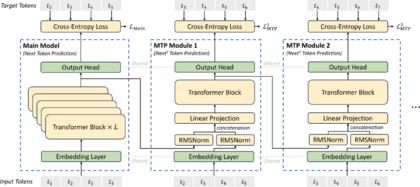

DeepSeek-V3-Base and DeepSeek-V3 (a chat model) use a similar design to V2. They can also predict multiple words at once, which makes them faster.

These models were trained on even more text, including a lot of math and programming information. They were also trained to be helpful and safe. DeepSeek released its DeepSeek-V3-0324 model on March 24, 2025.

DeepSeek worked hard to make its models run very efficiently. They used special computer math to save power. They also made sure different parts of the computer system worked together smoothly.

The cost of training DeepSeek-V3 was about US$5.576 million. This cost has been discussed, as it might not include all expenses. Tests show that V3 works better than some other models and as well as top models like GPT-4o.

R1 Models

In January 2025, DeepSeek released the DeepSeek-R1 model. DeepSeek-R1-Lite-Preview was trained for thinking, math, and solving problems quickly. DeepSeek said it was better than OpenAI o1 on some math tests. However, another report said o1 was faster.

DeepSeek-R1 and DeepSeek-R1-Zero are based on the DeepSeek-V3 model. DeepSeek-R1-Distill models were made from other AI models. They were then trained using information created by R1.

DeepSeek-R1-Zero was trained using only rule-based rewards. This means it was rewarded for correct answers and good formatting. R1-Zero sometimes had problems with reading and mixing languages. R1 was trained to fix these issues and improve its thinking.

There were reports that R2, the next version, was planned for May 2025. But on May 28, 2025, R1 was updated instead. As of early July, R2 had not been released yet.

Why DeepSeek is Important

DeepSeek's success against bigger companies has been called "upending AI." This means it has changed the AI world a lot.

The DeepSeek-R1 model works as well as other top AI models like OpenAI's GPT-4o. But it costs much less to train. DeepSeek said it trained its V3 model for about US$6 million. This is much less than the US$100 million it cost to train OpenAI's GPT-4 in 2023. It also used much less computing power than Meta's LLaMA 3.1.

After the R1 model came out in January 2025, many investors thought there would be a "price war" in the AI industry. DeepSeek was called the "Pinduoduo of AI" because of its low prices. Other big Chinese tech companies like ByteDance, Tencent, and Alibaba also lowered their AI model prices. Even with its low prices, DeepSeek was making money, unlike some of its rivals.

See also

In Spanish: DeepSeek para niños

In Spanish: DeepSeek para niños

- Artificial intelligence industry in China

- List of large language models

| Delilah Pierce |

| Gordon Parks |

| Augusta Savage |

| Charles Ethan Porter |