Web crawler facts for kids

A web crawler, also called a spider or spiderbot, is a special computer program. It automatically explores the World Wide Web by following links from one page to another. Think of it like a robot that browses the internet all day, every day.

These crawlers are mostly used by search engines like Google or Bing. Their main job is to find new web pages and updates to existing ones. They copy these pages so the search engine can organize them. This organization, called Web indexing, helps you find what you're looking for quickly when you type something into a search bar.

Crawlers need computer resources from the websites they visit. Because they visit so many sites, they have rules to be "polite" and not overload any single website. Websites can also use a special file called `robots.txt` to tell crawlers which parts of their site they should or should not visit.

The internet is huge! Even the biggest crawlers can't index every single page. But they do a great job, which is why you get relevant search results almost instantly today. Besides helping search engines, crawlers can also check if links work or if a website's code is correct. They can even collect specific information from websites, a process called web scraping.

Contents

How Web Crawlers Work

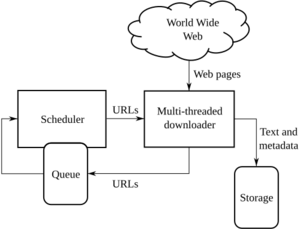

A web crawler starts with a list of web addresses, like a starting point. These first addresses are called the seeds.

Exploring the Web

As the crawler visits these seed addresses, it looks for all the hyperlinks on those pages. It then adds these new links to a special list called the crawl frontier. The crawler keeps visiting new links from this list, going deeper and deeper into the web.

If the crawler is saving websites for an archive, it copies and saves the information as it goes. These saved copies are like "snapshots" of the web pages at a certain time. They are stored in a place called a repository. This repository holds the most recent version of each web page the crawler finds.

Dealing with Lots of Pages

There are so many web pages that a crawler can only download a limited number at a time. So, crawlers need to decide which pages are most important to visit next. Also, web pages change often. A page might have been updated or even deleted since the crawler last visited it.

Sometimes, websites create many different web addresses that all show the same content. This can happen with online photo galleries, for example, where different settings might create unique URLs for the same pictures. Crawlers need to be smart to avoid downloading the same content many times. They try to find the unique content without getting stuck in endless combinations of addresses.

Crawler Rules and Policies

A web crawler's behavior is guided by several important rules, or "policies."

What to Download

This rule helps the crawler decide which pages to download. Since the web is so vast, even big search engines only cover a part of it. A crawler wants to download the most important and relevant pages first.

The "importance" of a page can depend on its quality, how many other pages link to it, or even its web address. For example, a crawler for a specific country might only focus on websites from that country. It's tricky because the crawler doesn't know all the web pages out there when it starts.

Some crawlers try to find pages that are similar to a specific topic. These are called focused crawlers or topical crawlers. For example, an academic crawler might focus on finding free research papers in PDF format.

Avoiding Duplicate Content

Crawlers often use a process called URL normalization. This means they change and standardize web addresses to make sure they don't visit the same page more than once if it has slightly different URLs. For example, they might change all letters in a URL to lowercase or remove extra symbols.

Finding Hidden Pages

Some crawlers are designed to find pages that are not easily linked from other parts of a website. A path-ascending crawler, for instance, will try to visit every part of a web address. If it finds `http://example.org/folder/page.html`, it will also try to visit `/folder/` and `/`. This helps it find pages that might be "hidden" deeper in a site.

When to Re-visit Pages

The internet is always changing. Pages are created, updated, or deleted all the time. This rule helps the crawler decide when to check pages again for changes.

Search engines want their copies of web pages to be as fresh as possible.

- Freshness tells if the copy the crawler has is still accurate.

- Age tells how old the copy is since the page was last changed.

Crawlers try to keep the average freshness high or the average age low. They often re-visit pages that change more frequently, but they also need to make sure they don't ignore pages that change less often.

Being Polite to Websites

Crawlers can download data much faster than people. If a crawler sends too many requests to a website too quickly, it can slow down or even crash the website's server. This is why "politeness" is very important.

The `robots.txt` file is a common way for website owners to tell crawlers which parts of their site they should not access. Some search engines also use a "Crawl-delay:" setting in this file. This tells the crawler how many seconds to wait between requests to that website. This helps prevent the crawler from overwhelming the server.

It's a balance: crawlers need to work fast to keep up with the changing web, but they also need to be careful not to cause problems for websites.

Working Together

A parallel crawler is a crawler that runs many processes at the same time. This helps it download pages much faster. These parallel processes need to work together to avoid downloading the same page multiple times.

Crawler Security and Identification

While most website owners want their pages indexed by search engines, web crawling can sometimes lead to problems. If a search engine accidentally indexes private information or pages that show security weaknesses, it could cause a data breach. Website owners can use `robots.txt` to block crawlers from private or sensitive parts of their sites.

Crawlers usually identify themselves to websites using a "User-agent" field in their requests. This is like a name tag that tells the website who is visiting. Website owners can check their server logs to see which crawlers have visited and how often. This also helps them contact the crawler's owner if there's a problem, like the crawler getting stuck or overloading the server.

Exploring the Deep Web

A huge amount of information on the internet is in the Deep Web. These are pages that aren't easily found by regular crawlers because they are often hidden behind search forms or databases. For example, when you search for a book in a library's online catalog, the results page is part of the Deep Web.

Special techniques are used to crawl the Deep Web. One method is called screen scraping, where software automatically fills out web forms and collects the results. This helps crawlers find information that traditional methods might miss. Pages built with AJAX (a technology that makes web pages more interactive) can also be tricky for crawlers, but search engines like Google have found ways to index them.

Different Types of Crawlers

There are different kinds of web crawlers, depending on who uses them and what they are designed to do.

Visual vs. Programmatic Crawlers

- Programmatic crawlers need someone to write computer code to tell them exactly what to do.

- Visual crawlers are easier to use. You can "teach" them what data to collect by simply highlighting information on a web page in your browser. This makes it easier for people without coding skills to use them.

Famous Web Crawlers

Many well-known companies have their own web crawlers:

- Applebot is Apple's crawler, used for things like Siri.

- Bingbot is Microsoft's crawler for its Bing search engine.

- Baiduspider is Baidu's crawler, a popular search engine in China.

- DuckDuckBot is DuckDuckGo's crawler.

- Googlebot is Google's famous crawler. It's a very complex system that helps Google index billions of pages.

There are also many open-source crawlers that anyone can use and modify, like Apache Nutch or Scrapy. These are often used by researchers or developers to build their own search tools.

See also

In Spanish: Araña web para niños

In Spanish: Araña web para niños

- Automatic indexing

- Web archiving

- Webgraph

- Website mirroring software

- Web scraping

Further reading

- Cho, Junghoo, "Web Crawling Project", UCLA Computer Science Department.

- A History of Search Engines, from Wiley

- WIVET is a benchmarking project by OWASP, which aims to measure if a web crawler can identify all the hyperlinks in a target website.

- Shestakov, Denis, "Current Challenges in Web Crawling" and "Intelligent Web Crawling", slides for tutorials given at ICWE'13 and WI-IAT'13.

| Mary Eliza Mahoney |

| Susie King Taylor |

| Ida Gray |

| Eliza Ann Grier |