Microarchitecture facts for kids

Microarchitecture (sometimes shortened to µarch or uarch) is like the secret blueprint of a computer's brain. It describes all the tiny electrical parts and how they work together to make the computer run. Think of it as the detailed design of a car's engine, showing every gear and wire.

While people in schools and universities might call it computer organization, those who build computers usually say microarchitecture. It works hand-in-hand with something called the instruction set architecture (ISA). Together, these two ideas make up the whole field of computer architecture.

Contents

What is Microarchitecture?

Microarchitecture is all about the hidden parts of a computer's central processing unit (CPU). It shows how the CPU is built inside and how its different pieces connect. This is different from the instruction set architecture (ISA), which is like the language a programmer uses to tell the computer what to do.

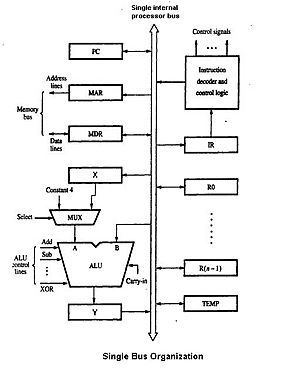

Imagine the ISA is a list of commands, like "add these two numbers" or "save this information." The microarchitecture is the actual machinery inside the CPU that carries out those commands. It includes everything from tiny logic gates (which are like tiny switches) to bigger parts like ALUs (which do math) and FPUs (which handle complex numbers).

Here are some key ideas about microarchitecture:

- A single microarchitecture can be used to run many different instruction sets. This is like having one engine design that can be used in different car models.

- Two computers might have the same microarchitecture but be built with totally different physical parts.

- Computers with different microarchitectures can still run the same programs. Newer microarchitectures often make computers faster and more powerful.

Simple Descriptions

Sometimes, when people talk about microarchitecture, especially in marketing, they give a very simple overview. They might mention things like how wide the data "highway" (bus) is, or what types of parts handle different tasks. They might also show how the CPU processes instructions in steps, like an assembly line.

How Microarchitecture Works

Modern computers use a special design called a pipelined datapath. This is like an assembly line for instructions. Instead of finishing one instruction completely before starting the next, the pipeline lets many instructions be worked on at the same time, but at different stages.

Think of it like this:

- Stage 1: Fetch – The CPU gets the next instruction.

- Stage 2: Decode – The CPU figures out what the instruction means.

- Stage 3: Execute – The CPU performs the instruction.

- Stage 4: Write Back – The CPU saves the results.

Some designs might have more stages, like a "memory access" stage. Designing these pipelines is a big part of microarchitecture.

Other important parts of microarchitecture include:

- Execution Units: These are the parts that actually do the work. They include arithmetic logic units (ALUs) for math, floating point units (FPUs) for complex calculations, and units for loading and storing data.

- Memory: How much memory the system has, how fast it is, and how it's connected are all microarchitectural choices.

- Peripherals: Deciding whether to include other parts like memory controllers is also part of the design.

When designing microarchitecture, engineers think about many things beyond just making it fast. They also consider:

- Cost: How much will it cost to make?

- Power: How much electricity will it use?

- Complexity: How difficult will it be to build?

- Manufacturing: Can it be easily mass-produced?

- Debugging: Can we find and fix problems easily?

Key Microarchitecture Ideas

All CPUs follow a basic set of steps to run programs:

- Read an instruction and understand it.

- Find any data needed for that instruction.

- Process the instruction.

- Write down the results.

A big challenge is that the computer's memory (where instructions and data are stored) is much slower than the CPU itself. This can cause delays, or "stalls," while the CPU waits for data. A lot of effort goes into making designs that avoid these delays. The goal is to run more instructions at the same time, making the computer faster.

In the past, these advanced techniques were only found in huge, expensive computers. But as technology improved, more and more of these ideas could be built into a single small chip.

Instruction Pipelining

One of the first and most powerful ways to make CPUs faster is using the instruction pipeline. Older CPUs would finish one instruction completely before starting the next. This meant many parts of the CPU were just sitting idle.

Pipelines fix this by letting different parts of many instructions be processed at the same time. For example, while one instruction is being executed, the next one can be decoded, and another one can be fetched. This makes the CPU seem much faster because it's constantly working on something.

Cache Memory

As chips got more complex, designers added more cache memory right onto the CPU chip. Cache is super-fast memory that the CPU can access very quickly. If the data the CPU needs is in the cache, it gets it almost instantly. If not, the CPU has to wait for it to be read from slower main memory.

More cache generally means more speed. Cache and pipelines work perfectly together. Cache allows the pipeline to run at a much faster speed than if it had to wait for slower main memory.

Branch Prediction and Speculative Execution

Sometimes, a program has a "branch" instruction, which means the computer has to decide which path of code to follow next. This can cause delays because the CPU has to wait to know which path to take.

To avoid these delays, CPUs use branch prediction. This is where the hardware tries to guess which path the program will take. If the guess is right, the CPU can start getting instructions for that path early. Speculative execution takes this a step further: the CPU actually starts running the code on the guessed path, even before it knows for sure if the guess was correct. If the guess was wrong, it just throws away the work and starts over.

Out-of-Order Execution

Even with cache, the CPU might still have to wait for data. Out-of-order execution helps with this. If one instruction is waiting for data, the CPU can go ahead and process another instruction that is ready to go. Then, it puts the results back in the correct order so it looks like everything happened in the original sequence.

Superscalar Processors

As chips got even more powerful, designers found ways to make CPUs process multiple instructions at the exact same time. This is what superscalar processors do. They have multiple "execution units" (like ALUs) that can work in parallel.

Modern CPUs often have several units for different tasks, like two units for loading data, one for storing, and multiple units for math. The CPU's "instruction issue logic" is very smart; it reads many instructions and sends them to any available execution unit.

Register Renaming

Register renaming is a clever trick to avoid unnecessary delays. Sometimes, different parts of a program might want to use the same temporary storage spot (called a "register"). If they both need it, one has to wait for the other. Register renaming gives each part its own temporary spot, allowing them to work at the same time.

Multiprocessing and Multithreading

Because CPUs are getting faster than memory, and because making single, super-fast CPUs uses a lot of power, newer computers are focusing on running many things at once. This is sometimes called "throughput computing".

One way to do this is with multiprocessing, where a computer has several CPUs working together. In the past, this was only for huge, expensive computers, but now even personal computers can have multiple CPUs.

Another way is with multi-core processors, where several CPUs are built onto the same single chip. This is common in many computers today.

Multithreading is another technique. If a CPU has to wait for data from slow memory, instead of doing nothing, it can quickly switch to working on another program or part of a program that is ready. This doesn't make one program faster, but it makes the whole computer system more efficient because the CPU is always busy.

A more advanced version is simultaneous multithreading. This allows superscalar CPUs to run instructions from different programs at the exact same time, in the same cycle.

See also

- Microprocessor

- Microcontroller

- Multi-core processor

- Digital signal processor

- Instruction-level parallelism (ILP)

In Spanish: Microarquitectura para niños

| Kyle Baker |

| Joseph Yoakum |

| Laura Wheeler Waring |

| Henry Ossawa Tanner |