Timnit Gebru facts for kids

Quick facts for kids

Timnit Gebru

|

|

|---|---|

Timnit Gebru in 2018

|

|

| Born |

Timnit W. Gebru

1982/1983 (age 42–43) Addis Ababa, Ethiopia

|

| Alma mater | Stanford University |

| Known for | Algorithmic bias Stochastic parrots |

| Scientific career | |

| Fields | Fairness in machine learning |

| Institutions |

|

| Thesis | Visual computational sociology: computer vision methods and challenges (2017) |

| Doctoral advisor | Fei-Fei Li |

Timnit W. Gebru (born in 1982 or 1983) is a brilliant computer scientist from Ethiopia. She is known for her important work in artificial intelligence (AI). She especially focuses on making sure AI systems are fair and don't have biases. She also studies data mining.

Timnit Gebru co-founded Black in AI, a group that helps more Black people get involved in AI research and development. She also started her own research center, the Distributed Artificial Intelligence Research Institute (DAIR).

In December 2020, Timnit left her job at Google. She was a leader on the Ethical Artificial Intelligence Team there. This happened after a disagreement about a research paper she co-wrote. The paper discussed the potential risks of very large AI language models.

Timnit Gebru is widely recognized for her knowledge in AI ethics. She was named one of the World's 50 Greatest Leaders by Fortune magazine. Nature magazine also listed her as one of ten people who shaped science in 2021. In 2022, Time magazine included her among the world's most influential people.

Contents

Growing Up and Learning

Timnit Gebru grew up in Addis Ababa, Ethiopia. Her father, an electrical engineer, passed away when she was five. Her mother, an economist, raised her. Both of her parents were from Eritrea.

When Timnit was 15, she moved from Ethiopia to the United States for safety. This was during a difficult time between Eritrea and Ethiopia. She first lived briefly in Ireland before getting political asylum in the U.S. She described this experience as challenging.

Timnit settled in Somerville, Massachusetts, to attend high school. There, she sometimes faced unfair treatment. For example, some teachers did not allow her to take certain advanced classes, even though she was a very good student.

After high school, an event helped Timnit decide to focus on ethics in technology. A friend of hers was hurt, and when Timnit called the police, her friend was arrested instead. This made Timnit realize that unfairness can happen within systems. It inspired her to work on making technology more just.

In 2001, Timnit was accepted into Stanford University. She earned her bachelor's and master's degrees in electrical engineering. Later, in 2017, she completed her PhD in computer vision. Her advisor during her PhD program was Fei-Fei Li.

Timnit presented her PhD research at a competition in 2017. She won, which led to many collaborations with other experts.

In 2016 and 2018, while studying for her PhD, Timnit returned to Ethiopia. She helped with a programming program called AddisCoder.

During her PhD, Timnit also wrote a paper about her worries for the future of AI. She pointed out the dangers of not having enough different people working in the field. She felt that some parts of the culture were not welcoming to everyone. She also noted how human biases could show up in machine learning systems.

Timnit's Career Journey

Early Work at Apple

Timnit Gebru started as an intern at Apple while at Stanford. She worked on parts for audio devices. The next year, she was offered a full-time job. Her manager at Apple described her as "fearless."

During her time at Apple, Timnit became more interested in creating software. She focused on computer vision that could detect human shapes. She also helped develop special algorithms for the first iPad. At the time, she didn't think about how this technology could be used for surveillance. She just found it technically interesting.

Years later, Timnit shared that she had also experienced difficulties at Apple. She believed that big companies should be more open about how they treat their employees.

Research at Stanford and Microsoft

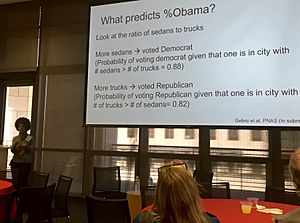

In 2013, Timnit joined Fei-Fei Li's lab at Stanford. There, she combined deep learning with Google Street View images. She used this to estimate information about neighborhoods in the United States. Her research showed that things like voting patterns, income, and education could be guessed by looking at the types of cars people drove.

In 2015, Timnit attended a major AI conference called Neural Information Processing Systems (NIPS). She noticed that very few Black researchers were there. The next year, she was the only Black woman among 8,500 attendees. Because of this, she and her colleague Rediet Abebe started Black in AI. This group helps increase the presence and support of Black professionals in artificial intelligence.

In 2017, Timnit became a researcher at Microsoft. She worked in their Fairness, Accountability, Transparency, and Ethics in AI (FATE) lab. She spoke about how a lack of diversity can create biases in AI systems.

While at Microsoft, Timnit co-wrote an important paper called Gender Shades. This paper looked at facial recognition software. It found that some systems were much less accurate at recognizing Black women compared to White men. For example, one system was 35% less likely to recognize Black women.

Working on AI Ethics at Google

Timnit Gebru joined Google in 2018. She co-led a team focused on the ethics of artificial intelligence with Margaret Mitchell. Her team studied how AI impacts society. They worked to make technology better for everyone.

In 2019, Timnit and other AI researchers asked Amazon to stop selling its facial recognition technology to police. They believed it was unfair to women and people of color. Timnit also said in an interview that facial recognition is too risky for law enforcement right now.

Leaving Google

In 2020, Timnit and five other authors wrote a paper titled "On the Dangers of Stochastic Parrots: Can Language Models Be Too Big? 🦜". This paper explored the potential problems with very large AI language models. These problems included their large environmental footprint, high costs, and how they might show unfairness or spread wrong information.

There was a disagreement with Google management about this paper. Google asked her to either take the paper back or remove the names of Google employees from it. Timnit asked for more information about this request. She then left Google in December 2020.

After her departure, many people, including Google employees and other experts, showed support for Timnit. Sundar Pichai, the CEO of Google's parent company, started an investigation into the situation. Google later announced changes to how it handles research papers on sensitive topics. They also changed how they manage employee departures.

Starting Her Own Research Institute

In June 2021, Timnit Gebru announced she was starting her own research institute. On December 2, 2021, she launched the Distributed Artificial Intelligence Research Institute (DAIR). This institute focuses on how AI affects groups of people who are often overlooked. It pays special attention to Africa and African immigrants in the United States. One of DAIR's first projects uses AI to study satellite images of towns in South Africa. This helps understand the lasting effects of past unfair systems.

Timnit Gebru, along with Émile P. Torres, also created the term TESCREAL. This term describes a group of ideas about the future of technology. Timnit believes some of these ideas, especially about very advanced AI, can be harmful if not thought about carefully. She argues that focusing too much on building super-intelligent AI (called AGI) can be unsafe. She thinks we should instead focus on making current AI fair and safe.

Awards and Special Recognition

Timnit Gebru, along with Joy Buolamwini and Inioluwa Deborah Raji, won an AI Innovations Award in 2019. They received it for their research that showed how unfairness can exist in facial recognition technology.

In 2021, Fortune magazine named Timnit one of the world's 50 greatest leaders. The scientific journal Nature also included her in a list of ten scientists who made important contributions to science that year.

Time magazine recognized Timnit as one of the most influential people of 2022.

In 2023, the Carnegie Corporation of New York honored her with a Great Immigrants Award. This award recognized her important work in ethical artificial intelligence.

In November 2023, the BBC included her on its 100 Women list. This list features inspiring and influential women from around the world.

Important Writings

- Visual computational sociology: computer vision methods and challenges

See also

In Spanish: Timnit Gebru para niños

In Spanish: Timnit Gebru para niños

- Coded Bias

- Claire Stapleton

- Meredith Whittaker

- Sophie Zhang