History of supercomputing facts for kids

A supercomputer is a computer that is much faster and more powerful than a regular computer. These amazing machines can do billions or even trillions of calculations every second! They are used for very complex tasks, like predicting the weather, designing new medicines, or understanding how the universe works.

The idea of "supercomputing" first came up in the 1920s. Early supercomputers, like the IBM NORC in 1954, were considered super-fast for their time. The CDC 6600, released in 1964, is often called the first true supercomputer.

In the 1980s, supercomputers usually had only a few main parts that did the work, called processors. But by the 1990s, machines with thousands of processors started to appear. These new designs helped supercomputers become much, much faster. By the year 2000, supercomputers were using thousands of "off-the-shelf" processors, similar to those in your home computer. They broke through the teraflop barrier, meaning they could do a trillion calculations per second!

The first ten years of the 21st century saw even more amazing progress. Supercomputers with over 60,000 processors were built. These machines reached petaflop performance levels, meaning they could do a quadrillion (a thousand trillion) calculations per second!

Contents

Early Supercomputers: The 1950s and 1960s

The term "Super Computing" was first used in a newspaper called the New York World in 1929. It described large, special machines IBM made for Columbia University to help with calculations.

In 1957, some engineers left a company called Sperry Corporation. They started a new company called Control Data Corporation (CDC) in Minneapolis, Minnesota. Seymour Cray, a brilliant computer designer, joined them a year later.

In 1960, Cray finished the CDC 1604. This was one of the first successful computers to use transistors. Transistors made computers smaller, faster, and more reliable than older ones that used vacuum tubes. At the time, the CDC 1604 was the fastest computer in the world.

Around 1960, Seymour Cray decided to build a computer that would be much faster than any other. After four years of hard work with his team, Cray completed the CDC 6600 in 1964. He used new silicon transistors, which were better than older types. To make it super fast, the parts had to be packed very closely together. This caused a lot of heat, so they had to invent a special cooling system!

The CDC 6600 was three times faster than the previous record holder, the IBM 7030 Stretch. It could do up to three million calculations per second (three megaFLOPS). People called it a supercomputer, and it created a whole new market for these powerful machines. About 200 of them were sold, each costing $9 million!

The 6600 got its speed by letting smaller parts of the computer handle simple tasks. This freed up the main brain, called the CPU (Central Processing Unit), to focus on the really important calculations. In 1968, Cray built the CDC 7600, which was even faster. It could do 3.6 times more calculations per second than the 6600.

Meanwhile, in the United Kingdom, a team at Manchester University started working on a computer called MUSE in 1956. Their goal was to build a computer that could do one million instructions per second.

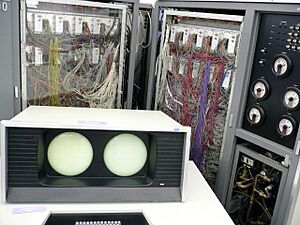

In 1958, a company called Ferranti joined the project. The computer was renamed Atlas. The first Atlas was ready on December 7, 1962. This was almost three years before Cray's CDC 6600! The Atlas was considered the most powerful computer in the world at that time. It was so important that people said if Atlas stopped working, half of the UK's computer power would be lost.

The Atlas computer was a pioneer in many ways. It introduced virtual memory, which allowed the computer to use its main memory and a slower drum memory together to act like one big memory. It also had the Atlas Supervisor, which many people consider the first modern operating system.

The Cray Era: Mid-1970s and 1980s

Four years after leaving CDC, Seymour Cray created the Cray-1 in 1976. This supercomputer became one of the most successful in history. The Cray-1 was a vector processor, which means it was very good at doing the same calculation on many pieces of data at once. It also had a cool feature called "chaining." This allowed the computer to use results from one calculation immediately in the next, making it even faster.

In 1982, the Cray X-MP was released. It was even better at chaining and could do many calculations at the same time. By 1983, Cray and Control Data were the top supercomputer makers. Even though IBM was a huge computer company, it couldn't make a supercomputer that was as successful.

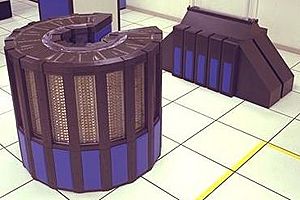

The Cray-2 came out in 1985. This computer had four processors and was cooled by being completely submerged in a special liquid called Fluorinert. It was the first supercomputer to break the gigaflop barrier, meaning it could do 1.9 billion calculations per second! The Cray-2 was a completely new design, great for problems needing lots of memory.

Developing the software for these supercomputers was very expensive. In the 1980s, the cost of software at Cray became as high as the cost of the hardware. This led to a shift from Cray's own operating system to UNICOS, which was based on Unix.

The Cray Y-MP, released in 1988, was an improved version of the X-MP. It could have eight processors, each doing 333 million calculations per second. Seymour Cray later tried to use new materials called gallium arsenide for his next computer, the Cray-3, but it didn't work out. He sadly passed away in 1996 before he could finish his work on a new type of supercomputer that used many processors working together.

Massive Processing: The 1990s

The Cray-2 from the 1980s had only eight processors. But in the 1990s, supercomputers started to appear with thousands of processors! Also, Japanese companies began building their own supercomputers, some inspired by the Cray-1.

In 1989, NEC Corporation announced the SX-3/44R. A year later, a model with four processors became the fastest in the world. However, in 1994, Fujitsu's Numerical Wind Tunnel supercomputer took the top spot. It used 166 processors and could do 1.7 billion calculations per second per processor. In 1996, the Hitachi SR2201 achieved 600 billion calculations per second by using 2048 processors connected in a special way.

Around the same time, the Intel Paragon could have between 1000 and 4000 processors. It was ranked the fastest in the world in 1993. The Paragon connected its processors using a fast two-dimensional mesh, allowing them to work on different parts of a problem and communicate with each other. By 1995, Cray was also making supercomputers with many processors, like the Cray T3E, which had over 2,000 processors.

The design of the Paragon led to the Intel ASCI Red supercomputer in the United States. This machine was the fastest in the world at the end of the 20th century. It also used a mesh design with over 9,000 computing parts. What's cool is that it used regular Pentium Pro processors, just like those found in everyday personal computers! ASCI Red was the first system to break the 1 teraflop barrier in 1996, eventually reaching 2 teraflops (two trillion calculations per second).

Supercomputing in the 21st Century

The first ten years of the 21st century saw huge progress in supercomputing. Supercomputers became much more efficient, meaning they used less power for the amount of work they did. For example, the Cray C90 used 500 kilowatts of power in 1991. By 2003, the ASCI Q used 3,000 kilowatts but was 2,000 times faster!

In 2004, the Earth Simulator supercomputer, built by NEC in Japan, reached 35.9 teraflops. It used 640 parts, each with eight special processors. To give you an idea, a single NVidia RTX 3090 graphics card today can do about the same amount of work!

The IBM Blue Gene supercomputer design became very popular. Many supercomputers on the TOP500 list (a list of the world's fastest supercomputers) used this design. The Blue Gene approach focuses on using many processors that don't use a lot of power. This means they can be cooled by air, and you can use a huge number of them, sometimes over 60,000!

China has made very fast progress in supercomputing. In 2003, China was 51st on the TOP500 list. By 2010, they had the fastest supercomputer in the world, the 2.5 petaflop Tianhe-I!

In July 2011, Japan's 8.1 petaflop K computer became the fastest. It used over 60,000 processors. The fact that the K computer was 60 times faster than the Earth Simulator (which was once the fastest) shows how quickly supercomputing technology is growing worldwide!

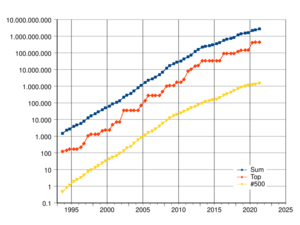

By 2018, Summit in the USA became the world's most powerful supercomputer, reaching 200 petaFLOPS. In 2020, Japan took the top spot again with the Fugaku supercomputer, which could do 442 petaFLOPS. And in 2022, the Frontier supercomputer in the USA became the first to break the exaflop barrier, reaching 1.1 exaFLOPS (a billion billion calculations per second)!

Fastest Supercomputers Through the Years

This table shows the supercomputers that have been at the very top of the Top500 list since 1993. The "Peak speed" is how many calculations they could do at their fastest.

| Year | Supercomputer | Peak speed (Rmax) |

Power efficiency (GFLOPS per Watt) |

Location |

|---|---|---|---|---|

| 1993 | Fujitsu Numerical Wind Tunnel | 124.50 GFLOPS | National Aerospace Laboratory, Tokyo, Japan | |

| 1993 | Intel Paragon XP/S 140 | 143.40 GFLOPS | DoE-Sandia National Laboratories, New Mexico, USA | |

| 1994 | Fujitsu Numerical Wind Tunnel | 170.40 GFLOPS | National Aerospace Laboratory, Tokyo, Japan | |

| 1996 | Hitachi SR2201/1024 | 220.40 GFLOPS | University of Tokyo, Japan | |

| Hitachi CP-PACS/2048 | 368.20 GFLOPS | University of Tsukuba, Tsukuba, Japan | ||

| 1997 | Intel ASCI Red/9152 | 1.338 TFLOPS | DoE-Sandia National Laboratories, New Mexico, USA | |

| 1999 | Intel ASCI Red/9632 | 2.3796 TFLOPS | ||

| 2000 | IBM ASCI White | 7.226 TFLOPS | DoE-Lawrence Livermore National Laboratory, California, USA | |

| 2002 | NEC Earth Simulator | 35.860 TFLOPS | Earth Simulator Center, Yokohama, Japan | |

| 2004 | IBM Blue Gene/L | 70.720 TFLOPS | DoE/IBM Rochester, Minnesota, USA | |

| 2005 | 136.800 TFLOPS | DoE/U.S. National Nuclear Security Administration, Lawrence Livermore National Laboratory, California, USA |

||

| 280.600 TFLOPS | ||||

| 2007 | 478.200 TFLOPS | |||

| 2008 | IBM Roadrunner | 1.026 PFLOPS | DoE-Los Alamos National Laboratory, New Mexico, USA | |

| 1.105 PFLOPS | 0.445 | |||

| 2009 | Cray Jaguar | 1.759 PFLOPS | DoE-Oak Ridge National Laboratory, Tennessee, USA | |

| 2010 | Tianhe-IA | 2.566 PFLOPS | 0.635 | National Supercomputing Center, Tianjin, China |

| 2011 | Fujitsu K computer | 10.510 PFLOPS | 0.825 | Riken, Kobe, Japan |

| 2012 | IBM Sequoia | 16.320 PFLOPS | Lawrence Livermore National Laboratory, California, USA | |

| 2012 | Cray Titan | 17.590 PFLOPS | Oak Ridge National Laboratory, Tennessee, USA | |

| 2013 | NUDT Tianhe-2 | 33.860 PFLOPS | 2.215 | Guangzhou, China |

| 2016 | Sunway TaihuLight | 93.010 PFLOPS | 6.051 | Wuxi, China |

| 2018 | IBM Summit | 122.300 PFLOPS | 14.668 | DoE-Oak Ridge National Laboratory, Tennessee, USA |

| 2020 | Fugaku | 415.530 PFLOPS | 15.418 | Riken, Kobe, Japan |

| 2022 | Frontier | 1.1 EFLOPS | 52.227 | Oak Ridge Leadership Computing Facility, USA |

| late-2022 (planned) | Aurora | >2 EFLOPS (theoretical peak) | Intel, Cray, Argonne National Laboratory, USA | |

| 2022 | AI Research SuperCluster | 5 AI EFLOPS | Meta Platforms, USA |

| Victor J. Glover |

| Yvonne Cagle |

| Jeanette Epps |

| Bernard A. Harris Jr. |