Global catastrophic risk facts for kids

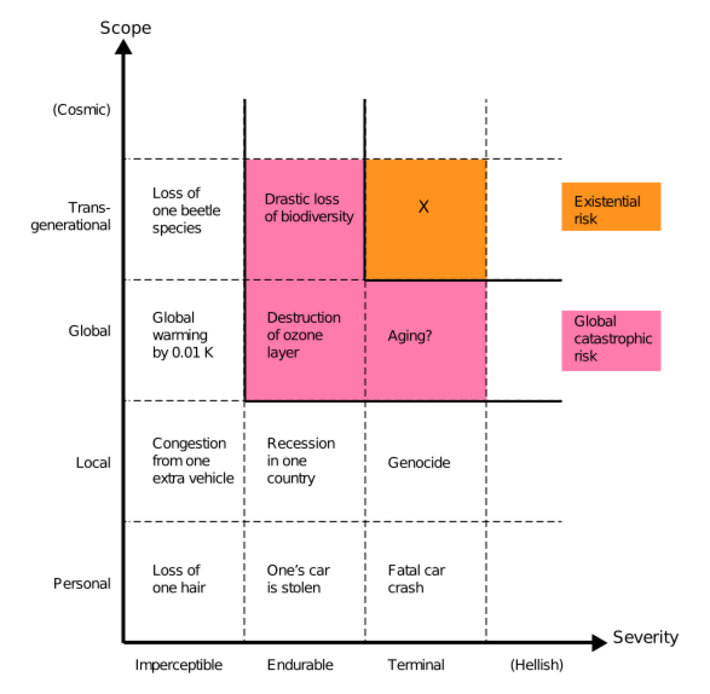

A global catastrophic risk or doomsday scenario is a possible event that could seriously harm people all over the world. It might even damage or destroy modern society. An event that could cause human extinction (when all humans die out) or permanently stop humanity's future is called an "existential risk".

For the last twenty years, many groups and universities have started to study these big risks. They look for ways to prevent them or lessen their impact.

Contents

Understanding Global Catastrophic Risks

What are Global Catastrophic Risks?

A global catastrophic risk is a danger that could cause "serious damage to human well-being on a global scale." It's a big problem that affects the whole world.

Humans have faced huge disasters before. Some, like the Black Death plague, killed many people (about a third of Europe's population). But these were mostly local. Others, like the 1918 influenza pandemic (flu), spread globally but were not as severe. They killed about 3-6% of the world's population. Most global catastrophic risks would not kill everyone. Even if they did, nature and humanity would likely recover over time.

What are Existential Risks?

Existential risks are even more serious. They are "risks that threaten the destruction of humanity's long-term potential." If an existential risk happened, it would either wipe out humanity completely or trap us in a terrible, unchangeable state. These risks are not just global; they are also final and permanent. This means there's no coming back, and it affects all future generations.

Beyond Extinction: Other Existential Dangers

While extinction is the clearest existential risk, there are other ways humanity's future could be destroyed. These include a total and unrecoverable collapse of civilization. Imagine if society fell apart so badly that it could never rebuild. This would be an existential catastrophe, even if some people survived.

Another danger is an unrecoverable dystopia. This is a future where humanity is stuck under a terrible, controlling government with no hope of freedom. Think of the book Nineteen Eighty-Four. Before such a disaster, humanity has many bright futures to choose from. After it, humanity is trapped forever in a bad state.

Possible Sources of Global Risks

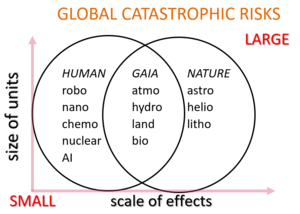

Global catastrophic risks can come from two main places: things caused by humans (anthropogenic) or things that happen naturally (non-anthropogenic).

Natural Risks

Natural risks are not caused by people. They include:

- An asteroid or comet hitting Earth.

- A supervolcanic eruption.

- A natural pandemic (a worldwide disease outbreak).

- A deadly gamma-ray burst from space.

- A geomagnetic storm from the Sun that could destroy electronics.

- Natural long-term climate change.

- Hostile extraterrestrial life (aliens).

- The Sun turning into a red giant star and swallowing Earth (billions of years from now).

Human-Caused Risks

Human-caused risks come from our actions. They include dangers from technology, how we govern ourselves, and climate change.

- Technology risks:

* Artificial intelligence (AI) that doesn't share human goals. * Dangers from biotechnology (like creating new diseases). * Problems with nanotechnology (tiny machines).

- Governance risks:

* Not having good global governance (how countries work together). * This can lead to global war and nuclear holocaust. * Biological warfare and bioterrorism using genetically modified organisms. * Cyberwarfare and cyberterrorism that destroy important systems like the electrical grid. * Radiological warfare using dirty bombs.

- Other human-caused risks:

* Climate change. * Environmental degradation (damaging the environment). * Extinction of species. * Famine (widespread hunger) due to unfair food distribution. * Human overpopulation or underpopulation. * Crop failures and non-sustainable agriculture.

Challenges in Studying These Risks

Studying global catastrophic risks is hard because we can't experiment with them. For example, we can't test a nuclear war. Also, many risks change quickly as technology and world politics change. It's also tough to predict the future far ahead, especially for human-caused risks that depend on complex human systems.

No Past Examples

Humanity has never faced an existential catastrophe. If one happened, it would be completely new. This makes it very hard to predict. We can't look at past events to guess how likely they are. This is because if a civilization was wiped out, there would be no one left to observe it.

To understand how a global civilization might collapse, we can look at past local collapses, like the Roman Empire. However, history also shows that societies can be quite strong. For example, Medieval Europe survived the Black Death, even though it lost 25% to 50% of its people.

Why We Don't Invest Enough

There are economic reasons why not much effort goes into reducing existential risks. It's a "global public good". This means everyone benefits, but no single country or person wants to pay for all of it. Even if a big country invests in preventing a disaster, it only gets a small part of the benefit. Most of the benefits would go to future generations, and there's no way for them to pay for it now.

Thinking Traps

Many cognitive biases (thinking traps) can affect how people judge these risks. For example, scope insensitivity means people don't always understand how bad something is when the numbers are very large. People might care about saving 2,000 birds as much as 200,000 birds.

Eliezer Yudkowsky says that when people hear about huge numbers, like 500 million deaths, or the extinction of humanity, they might think differently. They might even say, "Maybe the human species doesn't deserve to survive."

All past predictions of human extinction have been wrong. This can make new warnings seem less believable. But Nick Bostrom argues that just because it hasn't happened yet doesn't mean it won't.

How to Prepare and Prevent

Layers of Defense

We can think of preparing for these risks like building layers of defense:

- Prevention: Stopping a catastrophe from happening in the first place. For example, preventing new infectious diseases from spreading.

- Response: Stopping a small disaster from becoming a global one. For example, preventing a small nuclear fight from turning into a full nuclear war.

- Resilience: Making humanity strong enough to survive global catastrophes. For example, having enough food during a "nuclear winter."

Human extinction is most likely when all three defenses are weak.

Funding Research

Some experts say that not enough money is spent on researching existential risks. Nick Bostrom pointed out that more research has been done on Star Trek or snowboarding than on these huge dangers.

Survival Planning

Some experts suggest building self-sufficient settlements in remote places on Earth. These would be designed to survive a global disaster. Economist Robin Hanson thinks a refuge for just 100 people could greatly improve humanity's chances.

Storing food globally has been suggested, but it would be very expensive. The Svalbard Global Seed Vault is a famous example. It's buried inside a mountain in the Arctic and holds billions of seeds from over 100 countries. It's a backup plan to save the world's crops.

If society keeps working and Earth remains livable, we might even be able to feed everyone during a long period without sunlight. This would need a lot of planning. Ideas include growing mushrooms on dead plants or turning natural gas into food using bacteria.

Global Governance and Cooperation

Not having enough global governance (ways for countries to work together) creates social and political risks. Governments, businesses, and people are worried that we don't have good ways to deal with these risks. Countries can work together to understand, prevent, and prepare for global catastrophes.

Climate Emergency Plans

In 2018, the Club of Rome called for more action on climate change. They proposed a plan to limit global temperature rise. They later published a wider "Planetary Emergency Plan."

Space Colonization

Space colonization is another idea to help humanity survive. By living on other planets, we could improve our chances if something terrible happened on Earth.

Astrophysicist Stephen Hawking believed we should colonize other planets. He thought this would help humanity survive planet-wide events like nuclear war.

Billionaire Elon Musk also believes humanity must become a "multiplanetary species" to avoid extinction. His company, SpaceX, is working on technology to colonize Mars.

Moving the Earth

In a few billion years, the Sun will grow into a red giant and swallow Earth. We could avoid this by moving Earth farther from the Sun. This could be done by carefully using comets and asteroids to push Earth into a wider orbit. Since the Sun's expansion is slow, one such push every 6,000 years would be enough.

Organizations Working on Global Risks

Many groups are working to understand and reduce global catastrophic risks.

The Bulletin of the Atomic Scientists (started in 1945) is one of the oldest. It studies risks from nuclear war and energy. It's famous for its Doomsday Clock. The Foresight Institute (started in 1986) looks at risks from nanotechnology.

After 2000, more scientists, thinkers, and wealthy people started groups to study global risks.

Independent groups include:

- The Machine Intelligence Research Institute (started 2000) aims to reduce risks from artificial intelligence.

- The Nuclear Threat Initiative (started 2001) works to reduce nuclear, biological, and chemical threats.

- The Lifeboat Foundation (started 2009) funds research to prevent technological disasters.

- The Global Catastrophic Risk Institute (started 2011) researches AI, nuclear war, climate change, and asteroid impacts.

- The Global Challenges Foundation (started 2012) releases yearly reports on global risks.

- The Future of Life Institute (started 2014) works to reduce extreme risks from new technologies.

University groups include:

- The Future of Humanity Institute (started 2005) at Oxford University, which studied humanity's long-term future.

- The Centre for the Study of Existential Risk (started 2012) at Cambridge University, which studies AI, biotechnology, global warming, and warfare. Stephen Hawking was an adviser there.

- The Millennium Alliance for Humanity and the Biosphere at Stanford University, which brings together experts on global catastrophe issues.

- The Center for International Security and Cooperation at Stanford, focusing on political cooperation to reduce risks.

- The Center for Security and Emerging Technology at Georgetown University, which researches policy for new technologies like AI.

Other groups are part of governments:

- The World Health Organization (WHO) has a division called Global Alert and Response (GAR) that monitors and responds to worldwide disease outbreaks.

- The United States Agency for International Development (USAID) has a program to prevent and contain natural pandemics.

- The Lawrence Livermore National Laboratory researches issues like biosecurity and counter-terrorism for the US government.

See also

- Apocalyptic and post-apocalyptic fiction

- Artificial intelligence arms race

- Community resilience

- Doomsday cult

- Extreme risk

- Failed state

- Fermi paradox

- Future of Earth

- Future of the Solar System

- Geoengineering

- Global Risks Report

- Great Filter

- Holocene extinction

- Impact event

- List of global issues

- Nuclear proliferation

- Planetary boundaries

- Rare events

- The Sixth Extinction: An Unnatural History

- Societal collapse

- Survivalism

- Tail risk

- The Precipice: Existential Risk and the Future of Humanity

- Timeline of the far future

- Triple planetary crisis

- Ultimate fate of the universe

- World Scientists' Warning to Humanity

| Lonnie Johnson |

| Granville Woods |

| Lewis Howard Latimer |

| James West |