History of medicine in the United States facts for kids

The history of medicine in the United States tells the story of how people have cared for health in America. This goes all the way from the first settlers to today. Early medicine used old folk remedies. Now, modern medicine is very organized and uses science.

Contents

Colonial Era Medicine

When settlers first arrived in the United States, a common medical idea was humoral theory. This meant people thought diseases were caused by an imbalance of body fluids. Settlers first wanted to use only medicines from England. They believed these were best for English bodies.

But settlers faced new diseases. They also found it hard to get English plants and herbs. So, they started using local plants and Native American remedies. Native American medicine often combined herbal treatments with rituals and prayer. Europeans, especially the Spanish, did not like this spiritual part. They saw it as against their religion. Any Native American medical ideas that did not fit humoral theory were called wrong. Tribal healers were sometimes called witches.

In English colonies, settlers often sought help from Native American healers. However, their medical knowledge was still looked down upon. People thought Native Americans did not understand why their treatments worked.

Colonial Disease Challenges

Many new arrivals to the colonies got sick and died. Children also faced high death rates. Malaria was very deadly, especially in the Southern colonies. Children born in the colonies had some protection. They often got milder forms of malaria but survived. For example, over a quarter of Anglican missionaries died within five years in the Carolinas.

Many babies and young children died from diseases like diphtheria, yellow fever, and malaria. Most sick people turned to local healers and folk remedies. Some used minister-physicians, barber-surgeons, or midwives. A few saw colonial doctors trained in Britain or through apprenticeships. There was little government control over medical care. Public health was not a big focus.

By the 1700s, colonial doctors in cities began to use modern medicine. They followed models from England and Scotland. This led to progress in vaccination, pathology, anatomy, and pharmacology.

There was a big difference in diseases between Native Americans and Europeans. Some viruses, like smallpox, only affect humans. They seemed to have been new to North America before 1492. Native Americans had no natural protection against these new infections. They suffered terribly from smallpox, measles, malaria, and tuberculosis. Many died even before European settlers arrived in their areas. This happened from contact with trappers.

Medical Organization in Colonies

The city of New Orleans, Louisiana opened two hospitals in the early 1700s. The Royal Hospital opened in 1722 as a small military clinic. It became important when the Ursuline Sisters took over in 1727. They made it a major public hospital. A new, larger building was built in 1734.

The Charity Hospital opened in 1736. It had many of the same staff. It helped the poorer people who could not afford care at the Royal Hospital.

In most American colonies, medicine was basic for the first few generations. Few skilled British doctors moved to the colonies. The first medical society started in Boston in 1735. In the 1700s, 117 wealthy Americans studied medicine in Edinburgh, Scotland. But most doctors learned by working with other doctors in the colonies.

The Medical College of Philadelphia was founded in 1765. It later joined the university in 1791. In New York, the medical department of King's College started in 1767. In 1770, it gave out the first American M.D. degree.

Smallpox inoculation was used in America from 1716 to 1766. This was before it was widely accepted in Europe. The first medical schools opened in Philadelphia (1765) and New York (1768). The first medical textbook appeared in 1775. Doctors could also easily get British textbooks. The first book of medicines (pharmacopoeia) came out in 1778.

Europeans had some protection from smallpox due to past exposure. But Native Americans did not. Their death rates were so high that one outbreak could destroy a small tribe.

Doctors in port cities knew they needed to keep sick sailors and passengers separate. Special "pest houses" were built for them. These were in Boston (1717), Philadelphia (1742), Charleston (1752), and New York (1757). The first general hospital opened in Philadelphia in 1752.

19th Century Medicine

In the early 1800s, America wanted to be different from Britain. This included medical practices. European treatments at the time included things like blistering and bloodletting. People wanted less harmful options.

Samuel Thompson created his own medical system, Thomsonianism, in the early 1800s. It became very popular in New England. Thompson said his system was all his own. But it actually combined humoral and Native American ideas. Thomsonianism focused on keeping the body warm. It used various herbal treatments to do this. His most used herb was Indian Tobacco. This was a common Native American medicinal plant. Thompson said he found the herb himself. But he also said an old lady in his village introduced him to it. Some historians think this lady was Native American. Thompson might have hidden this due to the negative views of Native Americans at the time.

Civil War Medicine

During the American Civil War (1861–65), more soldiers died from disease than from battle. Even more were temporarily unable to fight due to wounds, illness, and accidents. Conditions were very bad in the Confederacy. Doctors and medical supplies were hard to find there. The war greatly changed American medicine. It affected surgery, hospitals, nursing, and research.

Hygiene in army camps was poor, especially early in the war. Men who had rarely left home were suddenly living with thousands of strangers. This led to outbreaks of childhood diseases. These included chicken pox, mumps, whooping cough, and especially measles. Fighting in the South brought new and dangerous diseases. Soldiers got diarrhea, dysentery, typhoid fever, and malaria. People often did not know how diseases spread. Surgeons gave coffee, whiskey, and quinine. Bad weather, dirty water, poor winter shelters, and unclean camp hospitals caused many illnesses.

This was common in wars for a long time. Conditions for the Confederate army were even worse. The Union army built hospitals in every state. What was new for the Union was the rise of skilled medical leaders. They took action, especially in the larger United States Army Medical Department. The United States Sanitary Commission, a new private group, also helped. Many other new groups helped soldiers with their health and spirits. These included the United States Christian Commission. Smaller private groups like the Women's Central Association of Relief also helped.

These groups asked the public for money. This raised awareness and millions of dollars. Thousands of volunteers worked in hospitals and rest homes. The famous poet Walt Whitman was one of them. Frederick Law Olmsted, a well-known landscape architect, was a very effective leader of the Sanitary Commission.

States could use their own money to support their troops. Ohio did this. After the terrible battle of Shiloh in April 1862, Ohio sent three steamboats. These were floating hospitals with doctors, nurses, and supplies. Ohio's fleet grew to eleven hospital ships. The state also set up 12 offices in major travel hubs. These helped Ohio soldiers moving around. The U.S. Army learned many lessons. In 1886, it created the Hospital Corps.

The Sanitary Commission collected huge amounts of health data. This showed the need for better ways to store and search for information. John Shaw Billings (1838-1913) was a pioneer in this. He was a senior surgeon in the war. Billings built the Library of the Surgeon General's Office. This is now the United States National Library of Medicine. It is key to modern medical information systems. Billings found a way to analyze medical data. He turned it into numbers and punched them onto cardboard cards. His assistant Herman Hollerith developed this system. This was the start of the computer punch card system. It was used for statistical data until the 1970s.

Modern Medicine Advances

After 1870, the Nightingale way of training nurses became popular. Linda Richards (1841 – 1930) studied in London. She became the first professionally trained American nurse. She started nursing programs in the U.S. and Japan. She also created the first system for keeping medical records for hospital patients.

After the American Revolution, the U.S. was slow to use new European medical ideas. But in the late 1800s, it adopted germ theory and science-based practices. This happened as medical education changed. Historian Elaine G. Breslaw said early American medical schools were like "diploma mills." She credits a large gift in 1889 to Johns Hopkins Hospital. This allowed it to lead the change to science-based medicine. Johns Hopkins started several modern hospital practices. These include residency (training for new doctors) and rounds (when doctors discuss patients).

In 1910, the Flexner Report was published. This report studied medical education. It called for stricter standards for medical schools. It said they should use the scientific approach like universities, including Johns Hopkins.

World War II and Nursing

Nursing in WWII

The nursing profession changed a lot during World War II. Many nurses wanted to join the Army and Navy. A higher percentage of nurses volunteered than any other job in America.

The public saw nurses as heroes during the war. Hollywood movies like Cry "Havoc" showed them bravely helping under enemy fire. Some nurses were captured by the Japanese. But most were kept safe, working on the home front. The medical services were huge. There were over 600,000 soldiers and ten enlisted men for every nurse. Almost all doctors were men. Women doctors were only allowed to examine patients from the Women's Army Corps.

Women in Medicine

In the colonial era, women played a big role in healthcare. This was especially true for midwives and childbirth. Local women healers used herbs and folk remedies to treat friends and neighbors. Housekeeping guides often included medical advice. Nursing was seen as a woman's job. Babies were born at home without a doctor until well into the 1900s. This made the midwife a very important person in healthcare.

As medicine became more professional in the early 1800s, there were efforts to limit women's roles. Untrained women were kept out of new places like hospitals and medical schools.

Women Doctors

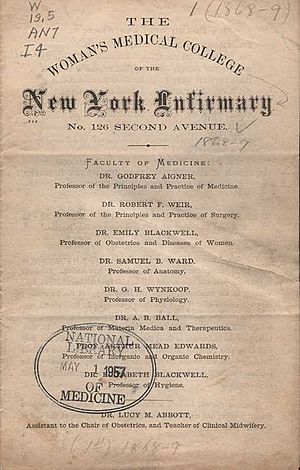

In 1849, Elizabeth Blackwell (1821–1910) became the first female doctor in America. She was an immigrant from England. She graduated at the top of her class from Geneva Medical College in New York. In 1857, she and her sister Emily, with Marie Zakrzewska, started the New York Infirmary for Women and Children. This was the first American hospital run by women. It was also the first hospital just for women and children.

Blackwell believed medicine could help improve society. A younger pioneer, Mary Putnam Jacobi (1842-1906), focused on curing diseases. Blackwell felt women would succeed in medicine because of their caring nature. But Jacobi believed women should be equal to men in all medical fields. In 1982, kidney doctor Leah Lowenstein became the first woman dean of a medical school that taught both men and women. This was at Jefferson Medical College.

Nursing as a Profession

Nursing became a professional career in the late 1800s. This opened up a new middle-class job for talented young women. They came from all backgrounds. The School of Nursing at Detroit's Harper Hospital, started in 1884, was a national leader. Its graduates worked in hospitals, public health, and as private nurses. They also volunteered in military hospitals during wars.

Major religious groups helped set up hospitals in many cities. Several Catholic groups of nuns focused on nursing. Most lay women (non-nuns) got married and stopped working. Or they became private nurses for wealthy families. But Catholic sisters had lifelong careers in hospitals. This helped hospitals like St. Vincent's Hospital in New York. Nurses from the Sisters of Charity started there in 1849. Patients of all backgrounds were welcome. But most came from the lower-income Catholic community.