Speech synthesis facts for kids

Speech synthesis is when a computer creates sounds that sound like human speech. A computer system that does this is called a speech synthesizer. It can be a special program (software) or a physical device (hardware). A text-to-speech (TTS) system takes normal written words and turns them into spoken words. Other systems can turn special sound symbols into speech. The opposite of this is speech recognition, where computers understand what people say.

Synthesized speech can be made by putting together small pieces of recorded speech. These pieces are stored in a computer's memory. Some systems store very small sounds, like single speech sounds (called phones) or pairs of sounds (called diphones). These systems can make many different words, but sometimes the speech might not sound very clear. For special uses, like announcements, storing whole words or sentences can make the speech sound very good. Another way to make speech is by using a computer model of the human vocal tract (like your throat and mouth). This method creates a completely "synthetic" voice.

We judge how good a speech synthesizer is by how much it sounds like a real human voice. We also check how easy it is to understand. A good text-to-speech program helps people who cannot see well or have reading difficulties to listen to written words on a computer. Many computer systems have had speech synthesizers since the early 1990s.

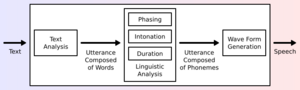

A text-to-speech system has two main parts: a front-end and a back-end. The front-end does two big jobs. First, it changes raw text with numbers or abbreviations into full words. This is like turning "123" into "one hundred twenty-three." This step is often called text normalization. Second, the front-end figures out the sounds for each word. It also breaks the text into natural speaking parts, like phrases or sentences. This process of finding the sounds for words is called text-to-phoneme conversion. The sounds and speaking rhythm information are then sent to the back-end. The back-end, also called the synthesizer, turns these sound instructions into actual sound.

Contents

History of Talking Machines

Long before electronic devices, people tried to build machines that could talk like humans. There were old stories about "Brazen Heads" that could speak, involving people like Pope Silvester II and Roger Bacon.

In 1779, a German-Danish scientist named Christian Gottlieb Kratzenstein won a prize. He built models of the human vocal tract that could make five long vowel sounds. Later, in 1791, Wolfgang von Kempelen from Hungary described his "acoustic-mechanical speech machine." This machine used bellows and had models of the tongue and lips. It could make both consonant and vowel sounds. Other inventors like Charles Wheatstone and Joseph Faber also built similar "speaking machines."

In the 1930s, Bell Labs created the vocoder. This machine could break down speech into its basic tones. From this work, Homer Dudley made a voice synthesizer called The Voder. He showed it at the 1939 New York World's Fair. You could play it like a keyboard to make speech.

In the late 1940s, Franklin S. Cooper and his team at Haskins Laboratories built the Pattern playback. This machine could turn pictures of speech sounds (called spectrograms) back into actual sound. Using this device, scientists learned how we hear different speech sounds.

Early Electronic Talking Devices

The first computer-based speech systems appeared in the late 1950s. In 1968, Noriko Umeda and others in Japan made the first general English text-to-speech system. A famous moment happened in 1961 at Bell Labs. Physicist John Larry Kelly, Jr and Louis Gerstman used an IBM 704 computer to make speech. Kelly's synthesizer sang the song "Daisy Bell" with music. Arthur C. Clarke, who wrote 2001: A Space Odyssey, was there. He was so amazed that he used this idea in his movie. The computer HAL 9000 sings the same song as it is turned off.

In 1978, the Texas Instruments LPC Speech Chips were used in the Speak & Spell toys. These toys helped children learn to spell by speaking words.

In 1975, a system called MUSA was released. It was one of the first speech synthesis systems. It was a computer that could read Italian. A later version could even sing Italian without music.

In the 1980s and 1990s, systems like DECtalk and the Bell Labs system became popular. The Bell Labs system was one of the first that could work with many languages.

Talking electronic devices became available in the 1970s. One of the first was the Speech+ calculator for blind people in 1976. Educational toys like the Texas Instruments Speak & Spell came out in 1978. In 1979, Fidelity released a talking electronic chess computer. The first video game with speech synthesis was Stratovox in 1980. The game Berzerk also had speech in 1980. The Milton Bradley Company made the first multi-player electronic game with voice, Milton, in the same year.

Early electronic speech synthesizers often sounded like robots and were hard to understand. The quality has gotten much better, but even today, computer voices usually sound different from real human speech.

Most synthesized voices sounded male until 1990. That's when Ann Syrdal at AT&T Bell Laboratories created a female voice.

How Voices Are Made (Synthesizer Technologies)

The most important things about a speech synthesis system are how natural it sounds and how intelligible (easy to understand) it is. Naturalness means how much it sounds like a real human. Intelligibility means how clearly you can understand the words. The best speech synthesizer is both natural and easy to understand.

There are two main ways to create synthetic speech sounds: concatenative synthesis and formant synthesis. Each method has its good and bad points. The way a system will be used often decides which method is chosen.

Concatenation Synthesis

Concatenative synthesis works by putting together (concatenating) pieces of recorded speech. Generally, this method makes the most natural-sounding speech. However, sometimes there can be small, noticeable glitches where the pieces are joined. There are three main types of concatenative synthesis.

Unit Selection Synthesis

Unit selection synthesis uses very large collections of recorded speech. When the system is made, each recorded sound is broken down into small parts. These parts can be single sounds, pairs of sounds, syllables, words, or even whole sentences. The computer then creates an index of all these sound units. This index includes details like the pitch and how long each sound lasts. When the system needs to say something, it picks the best chain of sound units from its collection.

Unit selection sounds the most natural because it changes the recorded speech very little. The best unit-selection systems can sound almost like real human voices. But, to sound very natural, these systems need huge amounts of recorded speech, sometimes many hours long.

Diphone Synthesis

Diphone synthesis uses a smaller collection of speech. It only stores all the diphones (sound transitions) in a language. For example, Spanish has about 800 diphones, and German has about 2500. The system only keeps one example of each diphone. When it needs to speak, it adds the right prosody (like pitch and rhythm) to these small sound units. Diphone synthesis can have some sound glitches and might sound a bit robotic.

Domain-Specific Synthesis

Domain-specific synthesis puts together pre-recorded words and phrases. This is used for systems where the things they need to say are limited to a specific area. Good examples are announcements for public transport or weather reports. This technology is simple to use and has been around for a long time. You can find it in talking clocks and calculators. The speech can sound very natural because the types of sentences are limited.

However, these systems can only say the words and phrases they have been pre-programmed with. They cannot say anything new. Also, how words blend together in natural speech can be a problem. For example, in English, the "r" sound in "clear" is often only said if the next word starts with a vowel (like "clear out"). Simple word-joining systems cannot do this unless they are made more complex.

Formant Synthesis

Formant synthesis does not use recorded human speech. Instead, it creates speech sounds using a computer model of how sound is made. It changes things like fundamental frequency (pitch) and noise levels over time to make artificial speech. This method often creates speech that sounds artificial or robotic.

However, formant synthesis has its benefits. It can be understood very clearly, even when speaking very fast. This is helpful for people with visual impairments who use screen readers to quickly listen to computer text. Formant synthesizers are usually smaller programs because they don't need a large database of speech samples. This means they can be used in small devices with limited memory. Because these systems control every part of the sound, they can create many different speaking styles, like asking questions, making statements, or even showing emotions.

Early examples of formant synthesis include the Texas Instruments toy Speak & Spell and many Sega and Atari, Inc. arcade games from the early 1980s.

Articulatory Synthesis

Articulatory synthesis creates speech based on models of the human vocal tract and how we move our mouth, tongue, and lips to make sounds. The first such synthesizer for lab experiments was made at Haskins Laboratories in the mid-1970s.

Recently, some synthesizers have included models of how vocal cords work and how sound waves travel through the mouth and nose. These are full systems that simulate the physics of speech.

HMM-based Synthesis

HMM-based synthesis uses hidden Markov models. In this system, the sound qualities (like the voice's unique sound), pitch, and length of sounds are all modeled at the same time. Speech sounds are then created from these models.

Deep Learning-based Synthesis

Deep learning speech synthesis uses deep neural networks (DNNs) to create artificial speech. These networks are trained using lots of recorded speech. DNN-based synthesizers are getting very close to sounding like real human voices.

Audio Deepfakes

Audio deepfakes are fake audio recordings that sound like a real person. This technology uses deep learning to create speech that can sound almost exactly like anyone, even from just a 5-second sample of their voice.

By 2019, this technology started being used in some bad ways. This adds to worries about false information. It's similar to how computer-generated images of humans have become very realistic. Also, video techniques can now change facial expressions in real-time. In 2017, researchers showed a digital version of Barack Obama that could be controlled just by an audio track.

In March 2020, a free online tool called 15.ai was released. It creates high-quality voices of fictional characters from games and shows, like GLaDOS from Portal or Twilight Sparkle from My Little Pony: Friendship Is Magic.

Challenges in Speech Synthesis

Understanding Text

Making computers understand text is not always easy. Texts have many words that are spelled the same but pronounced differently depending on the meaning. For example, "My latest project is to learn how to better project my voice." The word "project" is pronounced differently in each case.

Most text-to-speech (TTS) systems don't fully understand the meaning of the text. So, they use clever tricks to guess the right pronunciation. They might look at words nearby or use statistics to see how often a word is used in a certain way.

Recently, TTS systems have started using special models (HMMs) to figure out the "parts of speech" (like noun, verb) of words. This helps them guess correctly most of the time.

Numbers are another challenge. "1325" could be "one thousand three hundred twenty-five," "one three two five," or "thirteen twenty-five." A TTS system often tries to guess how to say a number based on the words around it.

Abbreviations can also be tricky. "in" could mean "inches" or the word "in." "St." could mean "Saint" or "Street." Smart TTS systems try to make good guesses, but sometimes they get it wrong, leading to funny results.

Text-to-Phoneme Challenges

Speech synthesis systems use two main ways to figure out how to pronounce a word from its spelling. This is called text-to-phoneme conversion (a phoneme is a basic sound in a language).

The simplest way is to use a dictionary. The program stores a huge dictionary with all the words and their correct pronunciations. To say a word, it just looks it up. The other way is rule-based. This is like "sounding out" words. The system applies rules to figure out the pronunciation.

Both ways have pros and cons. The dictionary method is fast and accurate, but it fails if it finds a word not in its dictionary. The rule-based method works on any word, but the rules can become very complex for irregular spellings. (Think about the word "of" in English, where the "f" sounds like a "v.") So, most systems use a mix of both.

Languages with very regular spelling systems (where words are spelled mostly as they sound) use more rule-based methods. For languages like English, which have very irregular spelling, systems rely more on dictionaries. They use rules only for unusual words or words not in their dictionary.

Prosody and Emotions

It's hard to make synthesized speech sound natural and emotional. A study found that listeners could tell if a speaker was smiling just by their voice. Scientists think that understanding these vocal clues can help make computer voices sound more natural. For example, the pitch of a sentence changes if it's a question, a statement, or an exclamation.

Special Hardware for Speech

- Icophone

- General Instrument SP0256-AL2

- National Semiconductor DT1050 Digitalker

- Texas Instruments LPC Speech Chips

Hardware and Software Systems

Many popular systems have speech synthesis built-in.

Texas Instruments

In the early 1980s, TI was famous for speech synthesis. They had a popular speech synthesizer that plugged into their TI-99/4 and 4A computers. Many TI video games used speech, like Alpiner and Parsec.

Mattel

The Mattel Intellivision game console had the Intellivoice Voice Synthesis module in 1982. It used a special chip to create speech. This chip had a small memory to store common words that could be combined to make phrases in games.

SAM

Released in 1982, Software Automatic Mouth (SAM) was the first commercial speech synthesis program that ran entirely on software. It was later used for Macintalk. SAM worked on Apple II, Atari, and Commodore 64 computers.

Atari

The Atari, Inc. 1400XL/1450XL personal computers, designed in 1983, had a speech system built into their operating system. They used a special chip to turn text into speech.

Apple

The first speech system built into an operating system that was widely sold was Apple Computer's MacInTalk. It was shown during the launch of the Macintosh computer in 1984. In the early 1990s, Apple added more speech features. With faster computers, they included higher quality voices. Apple also added speech recognition. Today, Apple's speech system, PlainTalk, helps people with vision problems. VoiceOver, first in Mac OS X Tiger (2005), offers many realistic-sounding voices.

Amazon

Amazon uses speech synthesis in Alexa and as a cloud service (AWS) since 2017.

AmigaOS

The AmigaOS operating system, released in 1985, also had advanced speech synthesis. It could create both male and female voices for American English. It had a system that turned English text into phonetic codes, and then a device that made the sounds.

Microsoft Windows

Modern Windows computers use SAPI 5 to support speech synthesis. Windows 2000 added Narrator, a tool that reads text aloud for people with visual impairment. Other programs like JAWS for Windows and Non-visual Desktop Access can read text from websites, emails, or documents.

Microsoft Speech Server is a program for businesses that uses voice synthesis and recognition for things like web applications and call centers.

Votrax

From 1971 to 1996, Votrax made many commercial speech synthesizer parts. A Votrax synthesizer was used in the first Kurzweil Reading Machine for the Blind.

Text-to-Speech Systems

Text-to-speech (TTS) means computers can read text aloud. A TTS system changes written text into sounds, then turns those sounds into audio. You can find TTS systems with different languages, accents, and special words.

Android

Version 1.6 of Android (a mobile operating system) added support for speech synthesis (TTS).

Internet

Many online programs, plugins, and tools can read messages from e-mail clients and web pages from a web browser. Some can even read news feeds (RSS-feeds). This makes it easier for people to listen to news or convert it into podcasts.

A growing area is web-based assistive technology. For example, 'Browsealoud' and Readspeaker offer TTS features to anyone with a web browser. The non-profit project Pediaphon was created in 2006 to provide a web-based TTS for Wikipedia articles.

Open Source

Some free and open-source speech synthesis systems are available:

- eSpeak: Supports many languages.

- Festival Speech Synthesis System: Uses different synthesis methods, including modern ones that sound better.

- gnuspeech: Uses articulatory synthesis.

Other Uses

- Some e-book readers, like the Amazon Kindle, can read books aloud.

- The BBC Micro computer had a Texas Instruments speech synthesis chip.

- Some GPS navigation units use speech synthesis to give directions.

- Yamaha made a music synthesizer in 1999, the Yamaha FS1R, which could create speech-like sounds.

Digital Sound-Alikes

At a conference in 2018, researchers from Google showed how they could make text-to-speech sound almost exactly like anyone, using just a 5-second voice sample. Researchers from Baidu Research also showed a similar voice cloning system.

Speech Synthesis Markup Languages

Special computer languages have been made to help computers read text as speech. The most common one is Speech Synthesis Markup Language (SSML), which became a standard in 2004. These languages help control how the speech sounds, like its pitch and speed.

Applications

Speech synthesis is a very important tool for helping people with disabilities. It helps remove barriers for people with many different challenges. It has been used for a long time in screen readers for people who cannot see well. Now, text-to-speech systems are also used by people with dyslexia and other reading difficulties. They are also used by young children who are learning to read. People with severe speech impairment often use special devices that speak for them. There is also work being done to make a synthetic voice sound more like a person's own voice. A famous example was the Kurzweil Reading Machine for the Blind, which used text-to-speech software.

Speech synthesis is also used in entertainment, like games and animations. In 2007, a company called Animo Limited made software for the entertainment industry. It could create narration and dialogue for characters. In 2008, NEC Biglobe offered a web service that let users create phrases using the voices of characters from the Japanese anime series Code Geass: Lelouch of the Rebellion R2.

Text-to-speech is also finding new uses. For example, combining speech synthesis with speech recognition allows you to talk to mobile devices using natural language.

Text-to-speech is also used for learning new languages. Voki is an educational tool that lets users create talking avatars with different accents. These can be shared online. Another use is in creating AI videos with talking characters. Tools like Elai.io let users make video content with AI avatars that speak using text-to-speech.

Speech synthesis is also a helpful tool for studying and understanding speech problems. A special voice quality synthesizer can imitate the sound of voices with different speech disorders.

Singing Synthesis

Singing synthesis is a technology that creates singing voices using computers.

See also

In Spanish: Síntesis de habla para niños

In Spanish: Síntesis de habla para niños

- 15.ai

- Chinese speech synthesis

- Comparison of screen readers

- Comparison of speech synthesizers

- Euphonia (device)

- Orca (assistive technology)

- Paperless office

- Speech processing

- Speech-generating device

- Silent speech interface

- Text to speech in digital television

Images for kids

| Claudette Colvin |

| Myrlie Evers-Williams |

| Alberta Odell Jones |