Western world facts for kids

The Western world is a term that has changed its meaning a lot over time. It generally refers to countries and cultures that have been strongly influenced by European history and traditions.

Long ago, the "Western world" might have meant Ancient Greece and the lands around the Aegean Sea. Later, during the time of the Roman Empire, it referred to the western part of that empire, which stretched from what is now Croatia all the way to Britain.

Sometimes, the term simply meant Western Europe or even all of Europe. During the Middle Ages, it often referred to Christendom, which was the part of the world where Christianity was the main religion.

More recently, during the Cold War (a period of tension between the United States and the Soviet Union), the "Western world" often meant the democratic countries, especially those allied with the NATO powers.

Today, the "Western world" usually describes places with a strong European cultural background. This culture is a mix of ideas from:

- Judeo-Christian beliefs (from Judaism and Christianity).

- Classical Greek and Roman ways of thinking (like philosophy and law).

- The traditions of early European peoples, sometimes called "barbarians" by the Romans.

Strictly speaking, this includes places like North America (Canada and the United States), Australia, New Zealand, and Western and Central Europe. Other places like Latin America, Eastern Europe, the Philippines, Singapore, Israel, and South Africa are sometimes debated because they have a mix of both Western and non-Western cultures.

Images for kids

-

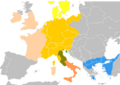

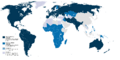

In dark blue is the Western world (Australia, Canada, Catholic and Protestant Europe, New Zealand, plus the United States), based-on Samuel P. Huntington's 1996 Clash of Civilizations. In turquoise are Orthodox Europe and Latin America. For Huntington, Orthodox Europe is the eastern part of the Western world, while Latin America was part of the Western world or a descendant civilization that was twinned with it.

-

Gold and garnet cloisonné (and mud), military fitting from the Staffordshire Hoard before cleaning

-

The School of Athens depicts a fictional gathering of the most prominent thinkers of classical antiquity. Fresco by Raphael, 1510–1511

-

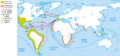

Map with the main travels of the Age of Discovery (began in 15th century).

-

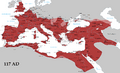

The Roman Empire in AD 117. During 350 years the Roman Republic turned into an Empire expanding up to twenty-five times its area

-

The religious distribution after the East-West Schism of AD 1054.

-

Map of the Byzantine Empire in AD 1180 on the eve of the Latin Fourth Crusade.

-

Portuguese discoveries and explorations since 1336: first arrival places and dates; main Portuguese spice trade routes in the Indian Ocean (blue); territories claimed by King John III of Portugal (c. 1536) (green).

-

The Industrial Revolution, which began in Great Britain in 1760s and was preceded by the Agricultural and Scientific revolutions in the 1600s, forever modified the economy worldwide.

-

New York City has been a dominant global financial center since the 1900s.

-

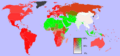

The Western world based-on Samuel P. Huntington's 1996 Clash of Civilizations. Latin America, depicted in turquoise, could be considered a sub-civilization within Western civilization, or a distinct civilization intimately related to the West and descended from it. For political consequences, the second option is the most adequate.

-

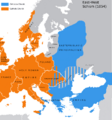

Division of the Roman Empire after 395 into western and eastern part. The geopolitical divisions in Europe that created a concept of East and West originated in the Roman Empire.

-

Countries with 50% or more Christians are colored purple while countries with 10% to 50% Christians are colored pink

-

Human language families.

-

Western Palearctic, a part of the Palearctic realm, one of the eight biogeographic realms dividing the Earth's surface.

-

Relative geographic prevalence of Christianity versus the second most prevalent religion Islam and lack of either religion, in 2006.

See also

In Spanish: Occidente para niños

In Spanish: Occidente para niños