History of computing hardware (1960s–present) facts for kids

The history of computing hardware from 1960 onwards shows a big change. Computers moved from using old-fashioned vacuum tubes to smaller, more reliable parts. These new parts included transistors and then tiny integrated circuit (IC) chips.

Around the mid-1950s, transistors became good enough and cheap enough to replace vacuum tubes. This made computers much better. Later, in the 1960s, a new technology called MOS (Metal-Oxide-Semiconductor) led to semiconductor memory. This memory was much smaller and faster. Then, in the early 1970s, the microprocessor was invented.

These inventions made computer memory change from magnetic-core memory to solid-state memory. This change made computers much cheaper, smaller, and use less power. These improvements led to the tiny personal computer (PC) in the 1970s. First came home computers and desktop computers, then laptops, and finally mobile computers in the following decades.

Contents

Computers Get Smaller: The Second Generation

The "second generation" of computers means those that used individual transistors. Even if some companies called them "third-generation," for us, they are second-gen. By 1960, transistor computers were replacing the older vacuum tube machines. They were cheaper, faster, and used less electricity.

Big companies like IBM and others such as Burroughs, UNIVAC, NCR, Control Data Corporation, Honeywell, General Electric, and RCA ruled the computer market. But some smaller companies also made important contributions. Towards the end of this period, Digital Equipment Corporation (DEC) became a strong competitor for small and medium-sized computers.

Early second-generation computers used different ways to store numbers. Some used character-based decimal systems, while others used binary (base-2) systems. With the arrival of the IBM System/360, a system called "two's complement" became the standard for new computers.

Most powerful binary computers used 36 or 48 bits for their "words" (a group of bits processed together). Smaller machines used fewer bits, like 12 or 18 bits. Most computers had special ways to handle input/output (I/O) and interrupts.

By 1960, magnetic core was the main type of computer memory. However, some new machines in the 1960s still used older drums and delay lines. Newer technologies like magnetic thin film and rod memory were tried. But improvements in magnetic core memory kept them from becoming popular until semiconductor memory arrived.

In the first generation, computers usually had one main place to do calculations, called an accumulator. In the second generation, computers started having multiple accumulators. On some computers, these same areas could also be used as index registers, which helped find data in memory. This was an early step towards what we now call general-purpose registers.

The second generation also saw many new ways to access memory, called address modes. For example, some computers could automatically increase an index register's value. While index registers existed in the first generation, they became much more common in the second. Also, indirect addressing became more popular. This allowed a memory address to point to another memory address.

Computers in this era also improved how they handled subroutines (small programs within a larger program). Special instructions were added to make it easier to jump to a subroutine and then return to the main program.

The second generation also introduced features to help computers do many tasks at once (multiprogramming) or use multiple processors (multiprocessing). These features included different operating modes (like master/slave), memory protection, and atomic instructions (operations that can't be interrupted).

The Rise of Tiny Chips: Third Generation

The use of computers really took off with the "Third Generation," starting around 1966. These computers mainly used early integrated circuit (IC) technology. These ICs had fewer than 1000 transistors on them. The third generation ended when microprocessors became common in the fourth generation.

In 1958, Jack Kilby at Texas Instruments created the first hybrid integrated circuit. This chip needed wires connected from the outside, which made it hard to make many of them. In 1959, Robert Noyce at Fairchild Semiconductor invented the monolithic integrated circuit (IC) chip. This chip was made of silicon, unlike Kilby's, which used germanium. Noyce's chip used a special "planar process" that allowed circuits to be printed onto the silicon, much like printed circuits. This process was based on earlier work by Jean Hoerni and Mohamed M. Atalla.

Computers using these new IC chips started appearing in the early 1960s. For example, in 1961, Texas Instruments built a computer called the Semiconductor Network Computer for the United States Air Force. It was one of the first general-purpose computers to use a single integrated circuit.

Early uses of ICs were in special embedded systems. NASA used them in the Apollo Guidance Computer for moon missions. The military used them in missiles and fighter jets like the F-14A Tomcat.

One of the first commercial computers to use ICs was the 1965 SDS 92. IBM started using ICs for some parts of its IBM System/360 in 1969. Then, their IBM_System/370 series, which started shipping in 1971, used ICs much more widely.

The integrated circuit made it possible to build much smaller computers. The minicomputer was a big step forward in the 1960s and 1970s. It made computing power available to more people. This was because minicomputers were smaller and also because more companies started making them. Digital Equipment Corporation (DEC) became the second-largest computer company after IBM. They made popular PDP and VAX computer systems. Smaller, cheaper hardware also helped create important new operating systems like Unix.

In 1966, Hewlett-Packard introduced the 2116A minicomputer. It was one of the first commercial 16-bit computers. It used integrated circuits from Fairchild Semiconductor.

In 1969, Data General released the Data General Nova. They sold 50,000 of them for about $8,000 each. The popularity of 16-bit computers like the Nova helped make word lengths (how many bits a computer processes at once) often a multiple of 8 bits. The Nova was the first to use "medium-scale integration" (MSI) circuits. Later models used "large-scale integrated" (LSI) circuits. It was also special because its entire central processor fit on one 15-inch printed circuit board.

Large mainframe computers also used ICs to get more storage and processing power. The 1965 IBM System/360 family of mainframes sometimes gets called third-generation. However, their main logic used "hybrid circuits" with separate transistors. IBM's 1971 System/370 used true ICs for its logic.

By 1971, the Illiac IV supercomputer was the fastest in the world. It used about a quarter-million small ECL logic gate integrated circuits. These chips made up its sixty-four parallel data processors.

Third-generation computers were still sold into the 1990s. For example, the Cray T90 supercomputer, released in 1995, used this technology.

The Personal Computer Era: Fourth Generation

The third generation's minicomputers were like smaller versions of mainframe computers. But the fourth generation started differently. The main idea behind the fourth generation is the microprocessor. This is a computer processor that fits on a single large-scale integration (LSI) MOS chip.

Early microprocessor computers were not very powerful or fast. They weren't trying to shrink minicomputers. Instead, they were made for a completely different market.

Since the 1970s, computer power and storage have grown incredibly. But the basic technology, using LSI or very-large-scale integration (VLSI) microchips, has stayed the same. So, most computers today are still considered part of the fourth generation.

Better Memory: Semiconductor Memory

The MOSFET (metal-oxide-semiconductor field-effect transistor) was invented in 1959 by Mohamed M. Atalla and Dawon Kahng at Bell Labs. Besides helping with data processing, the MOSFET made it possible to use MOS transistors as memory cells. Before this, magnetic cores were used for memory. Semiconductor memory, also called MOS memory, was cheaper and used less power than magnetic core memory.

MOS random-access memory (RAM) was developed in different forms. static RAM (SRAM) was created by John Schmidt in 1964. In 1966, Robert Dennard developed dynamic RAM (DRAM). In 1967, Dawon Kahng and Simon Sze developed the floating-gate MOSFET. This was the basis for non-volatile memory like flash memory, which keeps data even when the power is off.

The Brains of the Computer: Microprocessors

The microprocessor came from the MOS integrated circuit (MOS IC) chip. These chips were first made by Fred Heiman and Steven Hofstein in 1962. Because MOSFETs could be made smaller and smaller, MOS IC chips quickly became more complex. This led to "large-scale integration" (LSI) chips with hundreds of transistors by the late 1960s. Engineers then realized that a whole computer processor could fit on a single MOS LSI chip. This was the start of the first microprocessors.

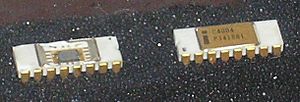

The first microprocessors used several MOS LSI chips, like the Four-Phase Systems AL1 (1969) and Garrett AiResearch MP944 (1970). On November 15, 1971, Intel released the world's first single-chip microprocessor, the 4004. Its development was led by Federico Faggin and others. It was first made for a Japanese calculator company. But soon, computers were built around it, with most of their processing power coming from this one small chip.

The dynamic RAM (DRAM) chip, based on Robert Dennard's work, offered thousands of bits of memory on one chip. Intel combined the RAM chip with the microprocessor. This allowed fourth-generation computers to be smaller and faster than older ones. The 4004 could only do 60,000 instructions per second. But its newer versions, like the Intel 8008, 8080, and the 8086/8088 family, brought much more speed and power. (Modern PCs still use processors that can work with older 8086 programs.) Other companies also made microprocessors that were widely used in small computers.

Supercomputers: The Fastest Machines

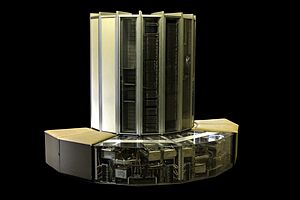

At the other end of the computing world were the powerful supercomputers. They also used integrated circuit technology. In 1976, the Cray-1 was developed by Seymour Cray. This machine was the first supercomputer to make "vector processing" practical. It had a special horseshoe shape to make processing faster by shortening the paths for electrical signals.

Vector processing means using one instruction to do the same job on many pieces of data at once. This has been a key method for supercomputers ever since. The Cray-1 could do 150 million calculations per second (150 megaflops). About 85 of these machines were sold, each costing $5 million. The Cray-1's main processor used many small and medium-sized integrated circuits.

Mainframes and Minicomputers: Before PCs

Before the microprocessor came out in the early 1970s, computers were usually very big and expensive. Only large organizations like companies, universities, and government agencies owned them. The people who used these computers were specialists. They didn't usually work directly with the machine. Instead, they prepared tasks for the computer offline, for example, by punching holes in cards.

Many tasks would be collected and run together in "batch mode." After the computer finished, users would get their printed results or punched cards back. In some places, it could take hours or even days to get results after submitting a job.

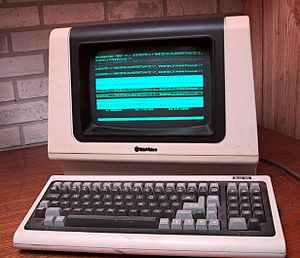

By the mid-1960s, a more interactive way of using computers developed. This was called a time-sharing system. Multiple terminals (like typewriters with screens) allowed many people to share one mainframe computer processor at the same time. This was common in businesses, science, and engineering.

Another type of computer use was seen in early, experimental computers. Here, one user had the computer all to themselves. Some of the first computers that could be called "personal" were early minicomputers like the LINC and PDP-8. Later came the VAX and larger minicomputers from companies like Digital Equipment Corporation and Data General.

These minicomputers started as helper processors for mainframes. They took on simple tasks, freeing up the mainframe for more complex calculations. By today's standards, they were still big (about the size of a refrigerator) and expensive (tens of thousands of dollars). So, individuals rarely bought them. However, they were much smaller, cheaper, and easier to use than mainframes. This made them affordable for individual labs and research projects. Minicomputers helped these groups avoid the slow "batch processing" of big computing centers.

Minicomputers were also more interactive than mainframes and soon had their own operating systems. The Xerox Alto minicomputer (1973) was a very important step towards personal computers. It had a graphical user interface (GUI), a high-resolution screen, lots of memory, a mouse, and special software.

Microcomputers: Computers for Everyone

Microprocessors Make Computers Affordable

In the minicomputers that came before modern personal computers, the processing was done by many parts spread across several large printed circuit boards. This made minicomputers big and expensive to build. But once the "computer-on-a-chip" (the microprocessor) became available, the cost of making a computer dropped a lot.

The parts that did arithmetic, logic, and control, which used to take up many expensive circuit boards, were now on one integrated circuit. This chip was very expensive to design but cheap to make in large numbers. At the same time, new solid state memory replaced the big, costly, and power-hungry magnetic core memory of older computers.

The Micral N: An Early Personal Computer

In France, a company called R2E introduced the Micral N in February 1973. It was a microcomputer based on the Intel 8008 chip. It was designed to help with measurements for a research institute. The Micral N cost about $1300, which was a fifth of the price of a PDP-8 minicomputer.

The Intel 8008 chip in the Micral N ran at 500 kHz, and it had 16 kilobytes of memory. It used a special connection system called Pluribus, which allowed up to 14 different circuit boards to be connected. R2E offered various boards for input/output, memory, and floppy disks.

The Altair 8800 and IMSAI 8080

The invention of the single-chip microprocessor was a huge step in making computers cheap and easy to use for individuals. The Altair 8800, shown in Popular Electronics magazine in January 1975, set a new low price for a computer. This made computer ownership possible for a small group of people in the 1970s. The IMSAI 8080 followed, with similar features.

These early machines were like smaller minicomputers and were not complete. To connect a keyboard or teleprinter, you needed expensive "peripherals." Both the Altair and IMSAI had a front panel with switches and lights. To program them, you had to enter a "bootstrap loader" program by flipping switches up or down for each binary byte. This program was hundreds of bytes long. After that, you could load a BASIC interpreter from a paper tape. Then, the computer could run BASIC programs.

The MITS Altair was the first successful microprocessor kit. It was the first mass-produced personal computer kit and the first to use an Intel 8080 processor. It sold well, with 10,000 Altairs shipped. The Altair also inspired Paul Allen and Bill Gates to create a BASIC interpreter for it. This led them to form Microsoft.

The MITS Altair 8800 created a new industry of microcomputers and computer kits. Many others followed, especially small business computers in the late 1970s. These used Intel 8080, Zilog Z80, and Intel 8085 microprocessors. Most of them ran the CP/M-80 operating system. CP/M-80 was the first popular microcomputer operating system used by many different hardware makers. Many software programs, like WordStar and dBase II, were written for it.

Many computer fans in the mid-1970s designed their own systems. Sometimes, they worked together to make it easier. This led to groups like the Homebrew Computer Club. Here, hobbyists met to share what they had built, exchange designs, and show off their systems. Many people built their own computers from published plans. For example, thousands built the Galaksija home computer in the early 1980s.

The Altair computer helped start Apple and Microsoft. Microsoft's first product was the Altair BASIC programming language interpreter. The second wave of microcomputers, appearing in the late 1970s, were often called home computers. These were sparked by the unexpected demand for computer kits from hobbyist clubs.

For business, these early home computers were less powerful than the large business computers of the time. They were designed more for fun and learning, not so much for serious work. While you could use some simple office programs on them, they were mostly used by computer fans for learning to program and playing games. They were also used by technical hobbyists to connect to external devices, like controlling model railroads.

The Personal Computer Emerges

The invention of the microprocessor and solid-state memory made home computing affordable. Early hobby microcomputer systems like the Altair 8800 and Apple I, released around 1975, used low-cost 8-bit processor chips. These chips had enough power to interest hobbyists and experimenters.

By 1977, pre-assembled systems like the Apple II, Commodore PET, and TRS-80 (later called the "1977 Trinity" by Byte Magazine) started the era of mass-market home computers. It took much less effort to get these computers working. Applications like games, word processing, and spreadsheets began to spread widely.

Separate from computers used in homes, small business systems usually ran on CP/M. This changed when IBM introduced the IBM PC, which was quickly adopted. The PC was heavily cloned (copied by other companies). This led to mass production and lower costs throughout the 1980s. This expanded the PC's presence in homes, replacing the home computer category in the 1990s. This led to the current monoculture of personal computers that are all very similar in design.

ca:Ordinadors digitals de programa emmagatzemat

See also

In Spanish: Historia del hardware de computadora (1960-presente) para niños

In Spanish: Historia del hardware de computadora (1960-presente) para niños