Computer facts for kids

A computer is a smart machine that takes in information (input), works with it using special instructions (programs), and then gives you new information (output). Many computers can also save and find information using things like hard drives. Computers can connect to each other to form networks. This lets them share information and talk to each other.

Contents

- What Computers Do

- Computer Parts: Hardware and Software

- How We Control Computers

- Computer Programs

- History of Computers

- Kinds of Computers

- Common Uses of Home Computers

- Common Uses of Computers at Work

- How Computers Work

- Networking and the Internet

- Computers and Waste

- Main Hardware Parts

- Largest Computer Companies

- The Future of Computers

- Images for kids

- See also

What Computers Do

Computers can follow a set of specific rules very well. They can also run a list of instructions called a program.

There are four main steps in how a computer works:

- Inputting: Getting information.

- Storage: Saving information.

- Outputting: Showing information.

- Processing: Working with information.

Modern computers can do billions of calculations every second. This amazing speed lets them do many tasks at the same time. This is called multi-tasking. Computers help with many jobs where automation (doing things automatically) is useful. For example, they control traffic lights, vehicles, security systems, washing machines, and digital televisions.

Computers are designed to do almost anything with information. They control machines that humans used to control. People use computers for things like math, listening to music, reading, and writing.

Computer Parts: Hardware and Software

Modern computers are made of electronic computer hardware. This hardware does math very quickly. But computers don't really "think" on their own. They just follow the instructions in their software programs. The software uses the hardware when you tell it what to do. Then it gives you useful results.

How We Control Computers

Humans control computers using user interfaces. These are ways we interact with the computer. Input devices include keyboards, computer mice, buttons, and touch screens. Some computers can even be controlled with voice commands or hand gestures. Special devices can even use brain signals.

Computer Programs

Computer programs are made by computer programmers. Some programmers write programs in the computer's own language, called machine code. But most programs are written using a programming language. Examples are C, C++, and Java. These languages are more like everyday human language. A special program called a compiler translates these instructions into binary code. Binary code is the machine code that the computer understands and uses.

What are Bugs?

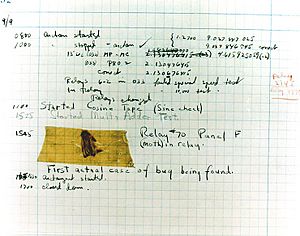

Mistakes in computer programs are called "bugs". Sometimes, bugs don't cause big problems. Other times, they can make a program or the whole computer stop working. This is called "hanging" or "crashing".

Bugs are usually not the computer's fault. Computers just follow the instructions they are given. So, bugs almost always happen because of a mistake by the programmer. Admiral Grace Hopper, a famous computer scientist, first used the term "bugs" in computing. This was after a dead moth caused a problem in the Harvard Mark II computer in 1947.

History of Computers

Early Automation

Most people find complex math hard to do in their heads. Imagine trying to calculate 584 × 3,220 without a calculator! People created tools to help them with math problems.

Also, people often had to do the same math problem over and over. A cashier had to figure out change many times a day. This took time and led to mistakes. So, people made calculators to do these repeated tasks. This part of computer history is about "machines that make it easy to do the same math problem many times without mistakes."

Some early examples of these machines include the abacus, the slide rule, and the Antikythera mechanism. The Antikythera mechanism was made around 150-100 BC.

The Idea of Programming

People didn't just want machines that did the same thing repeatedly. For example, a music box plays the same song over and over. Some people wanted to tell their machines to do different things. They wanted to "program" the machine. This means giving the machine different orders. This part of history is about "machines that I can order to do different things if I know how to speak their language."

One of the first examples was built by Hero of Alexandria (around 10–70 AD). He made a mechanical theater that performed a 10-minute play. It used a complex system of ropes and drums. These ropes and drums were like the machine's language. They told it what to do and when. Some people say this was the first programmable machine.

Historians don't always agree on which early machines were "computers." Many say the "castle clock," an astronomical clock made by Al-Jazari in 1206, was the first known programmable analog computer. It could adjust for the changing length of day and night throughout the year. Some consider this daily adjustment a form of computer programming.

Others say the first computer was made by Charles Babbage. Ada Lovelace is often called the first programmer.

The Modern Computing Era

By the end of the Middle Ages, math and engineering became more important. In 1623, Wilhelm Schickard made a mechanical calculator. Other Europeans made more calculators. These were not modern computers because they could only add, subtract, and multiply. You couldn't change them to do something like play Tetris. So, they were not programmable. Today, engineers use computers for design and planning.

In 1801, Joseph Marie Jacquard used punched paper cards to tell his textile loom what pattern to weave. He could change the punch cards to program the loom to weave different patterns. This made the loom programmable.

Charles Babbage wanted to build a similar machine that could calculate. He called it "The Analytical Engine." Babbage never finished his Analytical Engine. He didn't have enough money and kept changing his design.

As time went on, computers were used more and more. People get bored doing the same thing repeatedly. In 1890, the U.S. Census Bureau had hundreds of people writing information on index cards. This was slow and expensive. Then, Herman Hollerith invented a machine that could automatically add up census information. The Computing Tabulating Recording Corporation (which later became IBM) made his machines. They rented these machines instead of selling them. This company was known for its great tech support.

Because of machines like these, new ways of talking to machines were invented. New types of machines were also created. Eventually, this led to the computer as we know it today.

Analog and Digital Computers

In the first half of the 20th century, scientists started using computers. They had a lot of math to do, especially for things like launching a rocket ship. They wanted to spend more time on science questions and less time adding numbers. So, they built computers.

These analog computers used analog circuits. They were very hard to program. In the 1930s, digital computers were invented. These soon became much easier to program.

Powerful Computers Emerge

Scientists learned how to build and use digital computers in the 1930s and 1940s. As they made more digital computers, they learned how to use them for complex tasks.

- Konrad Zuse's "Z machines." The Z3 (1941) was the first working machine that used binary arithmetic. Binary arithmetic uses "Yes" and "No" (1s and 0s) to add numbers. You could also program it. The Z3 was later shown to be Turing complete. This means it could do anything mathematically possible for a computer. It is considered the world's first modern computer.

- The non-programmable Atanasoff–Berry Computer (1941) used vacuum tubes to store "yes" and "no" answers.

- The Harvard Mark I (1944) was a large computer that could be programmed to some extent.

- The U.S. Army's ENIAC (1946) could add numbers like people do (using 0 through 9). It is sometimes called the first general-purpose electronic computer.

Almost all modern computers use the stored-program design. This design is key to what a modern computer is. The technology used to build computers has changed a lot since the 1940s. But many current computers still use the von-Neumann architecture.

In the 1950s, computers were mostly built with vacuum tubes.

Transistors replaced vacuum tubes in the 1960s. They were smaller, cheaper, used less power, and broke down less often.

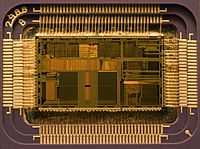

In the 1970s, computers used integrated circuits. Microprocessors, like the Intel 4004, made computers smaller, cheaper, faster, and more reliable.

By the 1980s, microcontrollers became small and cheap enough to replace mechanical controls. They were used in things like washing machines. The 1980s also saw home computers and personal computers become popular. With the growth of the Internet, personal computers are now as common as televisions and telephones in homes.

In 2005, Nokia started calling some of its mobile phones "multimedia computers." After the Apple iPhone launched in 2007, many people started calling smartphones "real" computers too.

Kinds of Computers

There are many types of computers:

- personal computers (like desktops and laptops)

- workstations (powerful computers for special tasks)

- mainframes (very large, powerful computers)

- servers (computers that provide services to others)

- minicomputers (smaller than mainframes, larger than personal computers)

- supercomputers (extremely fast computers for complex calculations)

- embedded systems (computers built into other devices)

- tablet computers

A "desktop computer" is a small machine that usually sits on a desk. It has a separate screen. "Laptop computers" are small enough to fit on your lap. This makes them easy to carry. Both laptops and desktops are called personal computers. One person usually uses them for things like playing music, browsing the web, or playing video games.

There are bigger computers that many people can use at once. These are called "Mainframes." They do all the things that make the internet work. Think of a personal computer as your skin. You see it, and it helps you feel the world. A mainframe is more like your internal organs. You don't see them, but if they stopped working, you'd have big problems!

An embedded computer, also called an embedded system, does one thing very well. For example, an alarm clock is an embedded computer. It tells the time. Unlike your personal computer, you can't use your clock to play Tetris. This is because embedded computers usually cannot be programmed with new software. Some mobile phones, automatic teller machines, microwave ovens, CD players, and cars use embedded computers.

All-in-one PCs

All-in-one computers are desktop computers where all the computer's parts are built into the same case as the monitor. Apple has made popular all-in-one computers, like the original Macintosh from the 1980s and the iMac from the late 1990s and 2000s.

Common Uses of Home Computers

People use home computers for many things, such as:

- Playing computer games

- Writing documents

- Solving math problems

- Watching videos

- Listening to music and audio

- Editing audio, video, and photos

- Creating sound or video

- Communicating with other people

- Using The Internet

- Online shopping

- Drawing pictures

- Paying bills online

Common Uses of Computers at Work

At work, computers are used for:

- Word processing (writing documents)

- Spreadsheets (organizing numbers)

- Making presentations

- Photo Editing

- Video editing and making videos

- Audio recording

- Managing computer systems

- Website Development

- Software Development

How Computers Work

Computers store information and instructions as numbers. They do this because they can work with numbers very quickly. This data is stored as binary symbols (1s and 0s). A 1 or a 0 is called a bit. Computers use many bits together to represent instructions and data. A list of instructions is called a program. Programs are saved on the computer's hard disk.

Computers run programs using a central processing unit (CPU). They use fast memory called RAM (Random Access Memory) to hold instructions and data while working. When the computer needs to save results for later, it uses the hard disk. Information on a hard disk stays there even after the computer is turned off.

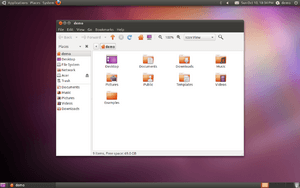

An operating system tells the computer how to understand tasks, how to do them, and how to show results. Millions of computers might use the same operating system. But each computer can have its own application programs for what its user needs. Using the same operating systems makes it easier to learn new things on computers. Operating systems can have simple text commands or a user-friendly GUI (Graphical User Interface).

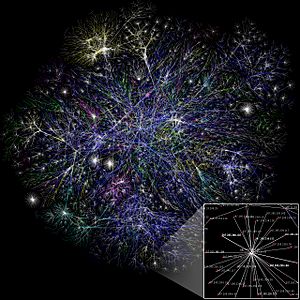

Networking and the Internet

Computers have been used to share information between different places since the 1950s. The U.S. military's SAGE system was an early example. This led to special commercial systems like Sabre. In the 1970s, engineers at research places in the United States started linking their computers. They used telecommunications technology. This project was funded by ARPA (now DARPA). The computer network they created was called the ARPANET. The technology behind Arpanet grew and spread.

Over time, this network went beyond schools and military bases. It became known as the Internet. The rise of networking changed what a computer was. Computer operating systems and programs were changed. They could now use resources from other computers on the network. This included things like printers, saved information, and more.

At first, only people in high-tech jobs could use these features. But in the 1990s, things like e-mail and the World Wide Web became popular. Cheap and fast networking like Ethernet and ADSL also developed. This made computer networking very common. A huge number of personal computers now connect to the Internet regularly. They use it to communicate and get information. "Wireless" networking, often using mobile phone networks, means you can connect to the internet almost anywhere.

Computers and Waste

A computer is almost always an electronic device. It contains materials that become electronic waste when thrown away. In some places, when you buy a new computer, you also pay for its waste management. This is called product stewardship.

Computers can become old very quickly. This depends on the programs you use. Often, they are thrown away within two or three years. This is because newer programs need a more powerful computer. This makes the waste problem worse. So, computer recycling is very important. Many projects send working computers to developing nations. This way, they can be reused and don't become waste as fast. Most people in these countries don't need to run the newest programs.

Some computer parts, like hard drives, can break easily. When these parts end up in a landfill, they can release poisonous chemicals like lead into the ground. Hard drives can also hold secret information, like credit card numbers. If a hard drive isn't erased before being thrown away, someone could steal that information. They could use it to steal money, even if the drive doesn't work.

Main Hardware Parts

Computers come in different shapes, but most have a similar design.

- All computers have a CPU.

- All computers have some kind of data bus. This lets them get information in or send information out.

- All computers have some form of memory. These are usually chips that can hold information.

- Many computers have sensors to get input from their surroundings.

- Many computers have a display device to show output. They might also have other peripheral devices connected.

A computer has several main parts. Think of a computer like a human body:

- The CPU is like the brain. It does most of the thinking and tells the rest of the computer what to do.

- The Motherboard is like the skeleton. It holds all the other parts and has the "nerves" that connect them to each other and the CPU.

- The motherboard connects to a power supply. This gives electricity to the whole computer.

- The various drives (like CD drives, floppy drives, and USB flash drives) are like eyes, ears, and fingers. They let the computer read different types of storage.

- The hard drive is like a human's memory. It saves all the information on the computer.

- Most computers have a sound card or another way to make sound. This is like vocal cords or a voice box.

- Speakers are connected to the sound card. They are like a mouth, where the sound comes out.

- Computers might also have a graphics card. This helps the computer create visual effects, like 3D worlds or more realistic colors. Powerful graphics cards can make more detailed images, like a skilled artist.

Largest Computer Companies

| Company name | Sales (US$ billion) |

|---|---|

| 220,000 | |

| 212,680 | |

| 132,070 | |

| 112,300 | |

| 99,750 | |

| 87,510 | |

| 86,830 | |

| 74,450 | |

| 72,340 | |

| 70,830 | |

| 59,820 | |

| 56,940 | |

| 56,200 | |

| 54,750 | |

| 52,700 |

The Future of Computers

Scientists are actively working on new types of computers. These include optical computers, DNA computers, neural computers, and quantum computers. Most computers today are "universal." This means they can calculate almost any function. They are only limited by how much memory they have and how fast they work. However, different computer designs can be much better for certain problems. For example, quantum computers might be able to break some modern encryption very quickly.

Artificial Intelligence

Computer programs that can learn and adapt are part of artificial intelligence (AI) and machine learning.

AI and machine learning are used in many important apps today. These include:

- Search engines like Google Search.

- Showing you online ads that you might like.

- Recommendation systems from Netflix, YouTube, or Amazon.

- Helping to direct internet traffic.

- Targeted advertising (like AdSense and Facebook).

- virtual assistants such as Siri or Alexa.

- autonomous vehicles like drones and self-driving cars.

- Automatic language translation (like Microsoft Translator and Google Translate).

- Facial recognition (like Apple's Face ID and Google's FaceNet).

- Image labeling (used by Facebook, Apple's iPhoto, and TikTok).

There are also thousands of successful AI programs that solve specific problems for businesses. For example, AI is used in energy storage, medical diagnosis, and predicting court decisions.

Game playing programs have been used since the 1950s to test AI. Deep Blue was the first computer to beat a world chess champion, Garry Kasparov, in 1997. In 2011, IBM's question answering system, Watson, won a Jeopardy! quiz show against the two greatest champions.

In March 2016, AlphaGo won 4 out of 5 games of Go against champion Lee Sedol. It was the first computer Go system to beat a professional player without help. Then, in 2017, it defeated Ke Jie, who was the world's top Go player. Other programs can play games with imperfect information, like poker, at a very high level. DeepMind in the 2010s created a "general AI" that could learn many different Atari games on its own.

In the early 2020s, generative AI became very popular. ChatGPT, based on GPT-3, and other large language models were used by many people. AI-based text-to-image generators like Midjourney, DALL-E, and Stable Diffusion created many viral AI-generated photos.

AlphaFold 2 (2020) showed it could figure out the 3D structure of a protein in hours, instead of months.

Images for kids

-

The Ishango bone, a bone tool from prehistoric Africa.

-

The Chinese suanpan (算盘). The number shown on this abacus is 6,302,715,408.

-

The Antikythera mechanism, from ancient Greece (circa 150–100 BC), is an early analog computing device.

-

A slide rule.

-

A portion of Babbage's Difference engine.

-

Sir William Thomson's third tide-predicting machine design, 1879–81.

-

Replica of Konrad Zuse's Z3, the first fully automatic, digital (electromechanical) computer.

-

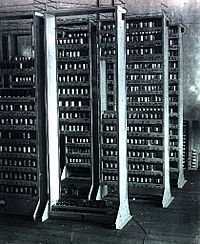

Colossus, the first electronic digital programmable computing device, used to break German codes during World War II. It is seen here in use at Bletchley Park in 1943.

-

ENIAC was the first electronic, Turing-complete device. It calculated ballistics for the United States Army.

-

A section of the Manchester Baby, the first electronic stored-program computer.

-

Bipolar junction transistor (BJT).

-

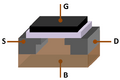

MOSFET (MOS transistor), showing gate (G), body (B), source (S) and drain (D) terminals. The gate is separated from the body by an insulating layer (pink).

-

Magnetic-core memory (using magnetic cores) was the computer memory of choice in the 1960s, until it was replaced by semiconductor memory (using MOS memory cells).

-

Hard disk drives are common storage devices used with computers.

-

Cray designed many supercomputers that used multiprocessing heavily.

-

Replica of the Manchester Baby, the world's first electronic stored-program computer, at the Museum of Science and Industry in Manchester, England.

-

A 1970s punched card containing one line from a Fortran program. The card reads: "Z(1) = Y + W(1)" and is labeled "PROJ039" for identification purposes.

See also

In Spanish: Computadora para niños

In Spanish: Computadora para niños