History of computer animation facts for kids

The history of computer animation started a long time ago, in the 1940s and 1950s. People like John Whitney began playing with computer graphics. By the early 1960s, digital computers became more common. This opened up new ways to create amazing computer graphics.

At first, computers were used mostly for science and engineering. But by the mid-1960s, artists like Dr. Thomas Calvert started experimenting. By the mid-1970s, computer graphics began appearing in public media. Much of this early work was 2-D. As computers got more powerful, people focused on making realistic 3-D images. By the late 1980s, super realistic 3-D animation appeared in movies. By the mid-1990s, it was good enough for entire feature films.

Contents

- Early Days: 1940s to Mid-1960s

- Mid-1960s to Mid-1970s: The Rise of 3-D

- The University of Utah: A Graphics Hub

- Evans and Sutherland: Hardware for Graphics

- First Computer-Animated Character

- Ohio State: Art and Computers

- Cybernetic Serendipity Exhibition

- Scanimate: Real-Time TV Animation

- National Film Board of Canada: Early Experiments

- Atlas Computer Laboratory and Antics Software

- First Digital Animation in a Feature Film

- SIGGRAPH: A Meeting Place

- Towards 3-D: Mid-1970s into the 1980s

- The 1980s: New Horizons

- Silicon Graphics, Inc (SGI): Powerful Workstations

- Quantel: TV Graphics Revolution

- Osaka University: Supercomputing for Graphics

- 3-D Animated Films at the University of Montreal

- Sun Microsystems: Powering Animation

- National Film Board of Canada: CGI Studio

- First Turnkey Broadcast Animation System

- First Solid 3-D CGI in Movies

- Inbetweening and Morphing: Smooth Transitions

- 3-D Inbetweening

- The Abyss: Seamless CGI

- Walt Disney and CAPS: Digital Animation for Cartoons

- 3-D Animation Software in the 1980s

- CGI in the 1990s: Explosion of Animation

- 3-D Animation Software in the 1990s

- CGI in the 2000s

- 3-D Animation Software in the 2000s

- CGI in the 2010s

- Future Developments

Early Days: 1940s to Mid-1960s

John Whitney: A Pioneer

John Whitney Sr. (1917–1995) was an American artist and inventor. Many call him one of the fathers of computer animation. In the 1940s and 1950s, he and his brother James made experimental films. They used a special machine built from old military computers. This machine controlled lights and objects, creating the first motion control photography.

One of Whitney's famous works was the animated title sequence for the 1958 film Vertigo. He also helped with the special effects for Stanley Kubrick's 1968 film 2001: A Space Odyssey.

The First Digital Picture

The SEAC computer started working in 1950 in the USA. In 1957, computer expert Russell Kirsch and his team created the first digital image. They scanned a photo of Kirsch's three-month-old son. This picture had only 176x176 pixels. They used the computer to draw lines, count objects, and show images on a screen. This was a huge step for all future computer imaging. Life magazine even called it one of the "100 Photographs That Changed the World."

By the late 1950s and early 1960s, large computers were common in universities. They often had screens and plotters for graphics. This opened up a new world for experiments.

First Computer-Drawn Movie

In 1960, a short 49-second animation was made in Sweden. It showed a car driving on a highway. It was created on the BESK computer at the Royal Institute of Technology. A special camera filmed images from a screen. It took a picture every twenty meters of the virtual road. The animation showed a fictional trip at 110 km/h. This short film was even shown on national TV in 1961.

Bell Labs: Creative Computers

Bell Labs in New Jersey was a leader in computer graphics and animation in the 1960s. Researchers like Edward Zajac, Michael Noll, and Ken Knowlton became early computer artists.

Edward Zajac made one of the first computer-generated films in 1963. It showed how a satellite could stay stable in orbit.

Ken Knowlton created the Beflix (Bell Flicks) animation system in 1963. It used simple commands like "draw a line" or "fill an area." Artists used Beflix to make many creative films.

In 1965, Michael Noll made 3-D movies with stick figures dancing. He also used 4-D animation for movie titles.

Boeing and the "Boeing Man"

In the 1960s, William Fetter worked as a graphic designer for Boeing. He is often credited with coining the term "Computer Graphics." Fetter created the first 3-D animated wire-frame human figures in 1964. These figures were used to study how people fit into different environments. They became famous as the "Boeing Man."

Ivan Sutherland: Interactive Graphics

Ivan Sutherland is known as the creator of interactive computer graphics. In 1962, he developed a program called Sketchpad I. This program let users draw directly on the screen. It was the first Graphical User Interface (GUI) and a very important computer program.

Mid-1960s to Mid-1970s: The Rise of 3-D

The University of Utah: A Graphics Hub

The University of Utah became a major center for computer animation. In the early 1970s, many basic 3-D computer graphics techniques were developed here. This included ways to make surfaces look smooth (shading), hide parts of objects, and create realistic images. Many important people in computer graphics either studied or worked at the University of Utah.

Evans and Sutherland: Hardware for Graphics

In 1968, Ivan Sutherland and David Evans started the company Evans & Sutherland. They were both professors at the University of Utah. Their company made new hardware to run the graphics systems developed at the university. Many of their ideas led to important inventions like the Head-mounted display and Flight simulators. Famous people like Ed Catmull (who co-founded Pixar) and John Warnock (who co-founded Adobe Systems) worked there.

First Computer-Animated Character

In 1968, a group of Soviet scientists led by N. Konstantinov created a mathematical model for a cat's movement. They used a BESM-4 computer to animate a walking cat. The computer printed hundreds of frames using alphabet symbols. These were then filmed to create the first computer animation of a character.

Ohio State: Art and Computers

Charles Csuri, an artist at Ohio State University (OSU), began using computer graphics for art in 1963. His work led to a big research lab for computer graphics. They studied animation languages, creating complex models, and how to make human and creature movements.

Cybernetic Serendipity Exhibition

In 1968, an art journal published a special issue called Cybernetic Serendipity. It showed many examples of computer art from around the world. This exhibition was a big moment for computer art. Famous works included Charles Csuri's Chaos to Order (the Hummingbird) and Masao Komura's Running Cola is Africa.

Scanimate: Real-Time TV Animation

Scanimate was an analog computer animation system. It was one of the first machines to get a lot of public attention. From 1969, Scanimate systems were used to make many TV animations. This included commercials and show titles. It could create animations in real time, which was a big advantage over digital systems back then.

National Film Board of Canada: Early Experiments

The National Film Board of Canada also started experimenting with computer techniques in 1969. Artist Peter Foldes made Metadata in 1971. This film used "interpolating" (also called "inbetweening" or "morphing") to smoothly change one image into another. In 1974, Foldes made Hunger / La Faim. This was one of the first films to show solid-filled (raster scanned) rendering. It won an award at the 1974 Cannes Film Festival.

Atlas Computer Laboratory and Antics Software

The Atlas Computer Laboratory in Britain was important for computer animation. Tony Pritchett made The Flexipede in 1968. In 1972, Colin Emmett and Alan Kitching developed solid-filled color rendering for TV shows.

In 1973, Kitching created "Antics" software. It let users create animation without needing to program. It worked like traditional "cel" animation but with many digital tools. Antics could produce full-color output using the Technicolor process. It was used for many animations, including the documentary Finite Elements in 1975.

From the early 1970s, computer animation focused on making more realistic 3-D images and visual effects for movies.

First Digital Animation in a Feature Film

The 1973 film Westworld was the first feature film to use digital image processing. John Whitney, Jr., and Gary Demos processed film footage to make it look pixelized. This showed the robot's point of view. They used a special process to convert film frames into rectangular blocks of color.

SIGGRAPH: A Meeting Place

In 1967, Sam Matsa helped form SIGGRAPH (Special Interest Committee on Computer Graphics). In 1974, the first SIGGRAPH conference on computer graphics was held. This yearly conference quickly became the main place to show new ideas in the field.

Towards 3-D: Mid-1970s into the 1980s

Early 3-D Animation in Movies

The first time 3-D wireframe images were used in a major movie was in Futureworld (1976). This film showed a computer-generated hand and face. These were created by University of Utah students Edwin Catmull and Fred Parke. The 1975 animated short film Great also had a brief wireframe model.

Star Wars (1977) also used wireframe images for things like the Death Star plans and targeting computers.

The Walt Disney film The Black Hole (1979) used wireframe rendering for the black hole. Alien (1979) also used wire-frame graphics for spaceship monitors.

Nelson Max: Scientific Visualization

Lawrence Livermore Labs made big advances in computer animation. Nelson Max joined in 1971. His 1976 film Turning a sphere inside out is a classic. He also made realistic animations of molecules. This showed how computer graphics could help visualize science.

NYIT: A Hub of Innovation

In 1974, Alex Schure started the Computer Graphics Laboratory (CGL) at the New York Institute of Technology (NYIT). He gathered top experts and artists, including Ed Catmull and Alvy Ray Smith. They created new ways to render images and developed important software like Tween and Paint.

Many famous videos came from NYIT, like Sunstone and Inside a Quark. The Works was meant to be the first full-length CGI film, but it was never finished. NYIT's lab was seen as the best computer animation research group in the world.

George Lucas, who created Star Wars, was impressed by NYIT's work. In 1979, he hired top talent from NYIT, including Catmull and Smith. They started his computer graphics division, which later became Pixar in 1986.

Framebuffer: The Digital Canvas

A framebuffer (or framestore) is a special memory that holds data for a complete screen image. It's like a digital canvas. Before framebuffers, computer displays only drew lines. In 1969, A. Michael Noll at Bell Labs designed an early framebuffer.

The development of new memory chips made framebuffers practical. In 1972–1973, Richard Shoup at Xerox PARC developed the SuperPaint system. It used a framebuffer with 640x480 pixels and 256 colors. SuperPaint had all the basic tools of modern paint programs. It was the first complete computer system for painting and editing images.

The first commercial framebuffer was made by Evans & Sutherland in 1974. It cost about $15,000. NYIT later created the first full-color 24-bit RGB framebuffer.

By the late 1970s, even personal computers like the Apple II had low-color framebuffers. By the 1990s, framebuffers became standard for all personal computers.

Fractals: Nature's Geometry

A big step towards realistic 3-D animation came with "fractals." Mathematician Benoit Mandelbrot coined the term in 1975. Fractals describe complex, self-repeating patterns found in nature.

In 1979–80, Loren Carpenter made the first film using fractals. Titled Vol Libre, it showed a flight over a fractal landscape. Carpenter later joined Pixar to create the fractal planet in Star Trek II: The Wrath of Khan (1982).

JPL and Jim Blinn: Space Animations

NASA's Jet Propulsion Laboratory (JPL) started a Computer Graphics Lab in 1977. They visualized data from NASA missions. Jim Blinn joined JPL and created many famous "fly-by" simulations. These showed spacecraft like Voyager and Galileo flying past planets and moons. He also made animations for Carl Sagan's TV series Cosmos: A Personal Voyage. Blinn developed new modeling techniques, like environment mapping and simulating wrinkled surfaces.

Later, Blinn made CGI animations for the TV series The Mechanical Universe. This show explained physics and math concepts.

Motion Control Photography: Precise Camera Moves

Motion control photography uses computers to record and repeat camera movements. This helps combine filmed objects with CGI elements perfectly. Early forms were used in 2001: A Space Odyssey (1968) and Star Wars Episode IV: A New Hope (1977). Industrial Light & Magic (ILM) created the Dykstraflex camera for Star Wars. This camera could perform complex, repeatable motions. The first commercial computer-based motion control system was developed in the UK in 1981.

3-D Software for Home Computers

3D computer graphics software started appearing for home computers in the late 1970s. One of the earliest was 3D Art Graphics for the Apple II in 1978.

The 1980s: New Horizons

The 1980s saw many new developments in computer hardware. Framebuffer technology became common in graphic workstations. Computers also became more powerful and affordable.

Silicon Graphics, Inc (SGI): Powerful Workstations

Silicon Graphics, Inc (SGI) made high-performance computers. Jim Clark founded SGI in 1981. His idea was to create special chips called the Geometry Engine. These chips would speed up image creation. SGI's first product in 1984 was the IRIS. It was a powerful workstation for 3D graphics. SGI computers were a favorite choice for CGI companies in film and TV for many years.

Quantel: TV Graphics Revolution

In 1981, Quantel released the "Paintbox." This was the first system designed for creating TV video and graphics. It was easy to use and quickly became popular for news, weather, and commercials. It revolutionized TV graphics.

In 1982, Quantel released the "Quantel Mirage." This was a real-time 3D video effects processor. It could warp live video onto 3D shapes. In 1985, Quantel made "Harry," the first all-digital non-linear editing system.

Osaka University: Supercomputing for Graphics

In 1982, Japan's Osaka University developed the LINKS-1 Computer Graphics System. This supercomputer used many microprocessors to create realistic 3D images. It was used to make the world's first 3D planetarium-like video of the entire sky. This video was shown at the 1985 International Exposition in Japan. The LINKS-1 was the most powerful computer in the world in 1984.

3-D Animated Films at the University of Montreal

The University of Montreal was a leader in computer animation in the 1980s. They made three successful short 3-D animated films with 3-D characters.

In 1983, Philippe Bergeron, Nadia Magnenat Thalmann, and Daniel Thalmann directed Dream Flight. It's considered the first 3-D film to tell a story. It won several awards.

In 1985, a team directed Tony de Peltrie. This film showed the first animated human character to express emotion through facial expressions. It deeply moved the audience.

In 1987, Nadia Magnenat Thalmann and Daniel Thalmann created Rendez-vous in Montreal. This short movie showed Marilyn Monroe and Humphrey Bogart meeting in a cafe. It was shown worldwide.

Sun Microsystems: Powering Animation

The Sun Microsystems company was founded in 1982. Their computers were popular for CAD and later for rendering 3-D CGI films. For example, Disney-Pixar's 1995 movie Toy Story used a render farm of 117 Sun workstations. Sun was a big supporter of open source software.

National Film Board of Canada: CGI Studio

In 1980, the NFB's French animation studio opened its Centre d'animatique. This team of computer graphics specialists created CGI sequences for the NFB's 3-D IMAX film Transitions for Expo 86. Daniel Langlois, who later founded Softimage, worked there.

First Turnkey Broadcast Animation System

In 1982, the Japanese company Nippon Univac Kaisha (NUK) made the first complete system for broadcast animation. It used the Antics 2-D computer animation software. This system was sold to broadcasters and animation companies across Japan. Later, Antics was developed for SGI and Apple Mac computers, reaching a wider audience.

First Solid 3-D CGI in Movies

The first movie to use a lot of solid 3-D CGI was Walt Disney's Tron in 1982. Less than twenty minutes of animation were used, mainly for digital "terrain" and vehicles like Light Cycles. Disney worked with four leading computer graphics firms to create the CGI scenes. Tron was a box office success.

In 1984, The Last Starfighter used extensive CGI for its starships and battle scenes. This was a big step forward compared to other films that still used physical models. Digital Productions rendered the graphics on a Cray X-MP supercomputer. They produced 27 minutes of CGI footage, which was a huge amount at the time. The company estimated that CGI was faster and cheaper than traditional visual effects.

Inbetweening and Morphing: Smooth Transitions

Inbetweening and morphing mean creating a sequence of images where one image smoothly changes into another. In traditional animation, "inbetweening" fills the gaps between key drawings. In computer animation, it was used from the beginning, often called "interpolation."

The term "morphing" became popular in the late 1980s. It specifically referred to computer inbetweening with photographic images, like transforming one face into another. This technique uses grids to track key features and smoothly blend images. Early examples of photographic image distortion were done by NASA in the 1960s. Texture mapping was defined in 1976.

The first full demonstration of morphing was at the 1982 SIGGRAPH conference. Tom Brigham showed a woman transforming into a lynx. The first movie to use morphing was Willow (1988).

3-D Inbetweening

With 3-D CGI, inbetweening of realistic computer models can also look like morphing. Nelson Max's 1977 film Turning a sphere inside out is an early example. The 1986 film Star Trek IV: The Voyage Home used this technique. Industrial Light & Magic (ILM) digitized the actors' heads using new 3D scanning technology. This allowed their faces to smoothly transform into one another.

The Abyss: Seamless CGI

In 1989, James Cameron's movie The Abyss was released. It was one of the first films to seamlessly blend realistic CGI into live-action scenes. ILM created a five-minute sequence with an animated water tentacle. This successful mix of CGI and live-action was a big step for future developments.

Walt Disney and CAPS: Digital Animation for Cartoons

The Great Mouse Detective (1986) was the first Disney film to use computer animation extensively. CGI was used for a two-minute climax scene on the Big Ben.

In the late 1980s, Disney and Pixar developed the "Computer Animation Production System" (CAPS). This system computerized the "ink-and-paint" and post-production parts of traditional animation. It scanned animators' drawings and painted them digitally. CAPS also allowed combining hand-drawn art with 3-D CGI.

CAPS was first used in The Little Mermaid (1989). The first full-scale use was for The Rescuers Down Under (1990). This made it the first traditionally animated film to be entirely produced on computer.

3-D Animation Software in the 1980s

The 1980s saw many new commercial software products:

- 1982: Autodesk Inc was founded. Their first full 3-D animation software was 3-D Studio for DOS in 1990.

- 1983: Alias Research was founded in Canada. Their software Alias-1 shipped in 1985. Alias was used for the pseudopod in The Abyss. This led to PowerAnimator in 1990.

- 1984: Wavefront was founded. They developed Preview and later Personal Visualiser.

- 1986: Softimage was founded by Daniel Langlois. Their Softimage Creative Environment (later Softimage 3D) integrated all 3-D processes.

- 1987: Side Effects Software was established. They developed PRISMS into Houdini, a powerful 3D package.

CGI in the 1990s: Explosion of Animation

Computer Animation Expands in Film and TV

The 1990s saw the first computer-animated TV series. Quarxs (1991) was an early CGI series with a real story. VeggieTales was also one of the first computer-animated series for children.

1991 was a "breakout year" for CGI in movies.

- James Cameron's Terminator 2: Judgment Day brought CGI to widespread public attention. ILM used CGI to animate the shapeshifting "T-1000" robot.

- Disney's Beauty and the Beast was the second 2-D animated film made entirely with CAPS. It combined hand-drawn art with 3-D CGI, like in the "waltz sequence." It was the first animated film nominated for a Best Picture Academy Award.

Another big step came in 1993 with Steven Spielberg's Jurassic Park. Realistic 3-D CGI dinosaurs were combined with life-sized animatronic models. Spielberg chose the CGI dinosaurs in a test. George Lucas said, "a major gap had been crossed, and things were never going to be the same."

Warner Bros' 1999 The Iron Giant was the first traditionally-animated film where a main character was fully CGI.

Flocking is when a group of animals move together. Craig Reynolds simulated this in 1986. Jurassic Park famously featured flocking dinosaurs. Other early uses were bats in Batman Returns (1992) and wildebeests in The Lion King (1994).

CGI techniques quickly became popular in film and TV. In 1993, Babylon 5 became the first major TV series to use CGI as its main visual effects. SeaQuest DSV followed later that year.

Also in 1993, the French company Studio Fantome produced Insektors, the first full-length completely computer-animated TV series. In 1994, the Canadian CGI series ReBoot aired.

In 1995, the first fully computer-animated feature film, Disney-Pixar's Toy Story, was a huge success. Directed by John Lasseter, it followed Pixar's earlier short films like Luxo Jr. (1986) and Tin Toy (1988).

After Toy Story, many new studios started using digital animation. By the early 2000s, computer-generated imagery became the main form of special effects.

Motion-Capture: Recording Movement

Motion-capture, or "Mo-cap", records the movement of people or objects. It's used in medicine, sports, and animation. Before computers, animators used Rotoscoping. They filmed an actor and then traced their movements frame by frame.

Computer-based motion-capture started in the 1970s. A performer wears markers near their joints. These markers are tracked by a computer. The data is then used to animate digital characters. Some systems even capture face and finger movements. This is called "performance-capture." In the 1990s, motion-capture became widely used for visual effects.

Video games also started using motion-capture. In 1988, Vixen used an early form of mo-cap for its 2-D character. In 1994, Virtua Fighter 2 used it for 3-D characters.

A big breakthrough was using motion-capture for hundreds of digital characters in Titanic (1997). It was also used extensively for Jar-Jar Binks in Star Wars: Episode I – The Phantom Menace (1999).

Match Moving: Adding CGI to Live Footage

Match moving (also called motion tracking or camera tracking) is different from motion capture. It uses software to track points in existing live-action video. This allows CGI elements to be added to the shot with the correct position, size, and movement.

The software identifies features like bright spots or edges. It tracks these features across frames. This creates 2-D paths. These paths are then used to calculate 3-D information about the camera's movement. This lets a virtual camera in an animation program move exactly like the real camera. New animated elements can then be perfectly composited into the live-action shot.

In the 1990s, this technology improved. It became possible to add virtual stunt doubles and crowds. Match moving is now an essential visual effects tool.

Virtual Studio: Blending Real and Digital

In TV, a virtual studio (or virtual set) combines real people with computer-generated environments in real time. The 3-D CGI environment automatically follows the live camera's movements. This uses camera tracking data and real-time CGI rendering. The two streams are then combined, often using chroma key (green screen). Virtual sets became common in TV in the 1990s. The first practical system was Synthevision by NHK in Japan in 1991.

Virtual studio techniques are also used in filmmaking. As computers became more powerful, many virtual film sets could be generated in real time.

Machinima: Movies from Games

Machinima uses real-time 3-D computer graphics engines, often from video games, to create movies. The Academy of Machinima Arts & Sciences defines it as "animated filmmaking within a real-time virtual 3-D environment." This practice grew from animated software introductions and recordings of gameplay in games like Doom and Quake.

3-D Animation Software in the 1990s

The 1990s saw many changes in the 3-D software industry.

- Wavefront released Dynamation in 1992. In 1995, Wavefront was bought by Silicon Graphics and merged with Alias.

- Alias Research continued with PowerAnimator for movies like Terminator 2: Judgment Day and Jurassic Park. In 1993, they started developing Maya. Alias products were used by major studios like Industrial Light & Magic and Pixar. In 1995, SGI bought both Alias Research and Wavefront, forming Alias Wavefront.

- Alias Wavefront focused on advanced digital content tools. PowerAnimator was used for Toy Story and Casper. In 1998, Alias|Wavefront launched Maya as its new flagship 3-D product. Maya combined features from several earlier packages. In 2006, Autodesk bought Alias.

- Softimage added features like inverse kinematics and flock animation. Softimage was used for hundreds of major films and games. In 1994, Microsoft acquired Softimage. In 2008, Autodesk acquired Softimage's animation assets.

- Autodesk Inc's 3D Studio was updated to 3D Studio Max for Windows NT in 1996. It was more affordable than competitors. 3D Studio Max is used widely in film, games, and design. In 2006, Autodesk acquired Alias, bringing Maya and 3D Studio Max under one company.

- Side Effects Software's PRISMS was used for visual effects in films like Twister and Titanic. In 1996, Side Effects Software introduced Houdini, a new 3D package. Houdini is used for cutting-edge 3D animation in film, broadcast, and gaming.

CGI in the 2000s

Capturing the Human Face

In 2000, a team led by Paul Debevec found a way to capture the reflectance field of a human face. This was the last piece needed to create realistic digital look-alikes of actors.

Motion-Capture, Photorealism, and the Uncanny Valley

The first mainstream film fully made with motion-capture was the 2001 Japanese-American film Final Fantasy: The Spirits Within. It was also the first to use photorealistic CGI characters. Some people thought the CGI characters looked a bit "off," falling into the "uncanny valley."

In 2002, Peter Jackson's The Lord of the Rings: The Two Towers used a real-time motion-capture system. This allowed actor Andy Serkis's movements to directly control the 3-D CGI model of Gollum.

Some people believe motion capture replaces animators' skills. However, animators often adjust the motion-capture data. In 2010, the US Film Academy decided that motion-capture films would no longer be eligible for "Best Animated Feature Film" Oscars, saying it's not animation by itself.

Virtual Cinematography: Digital Actors and Cameras

The early 2000s saw virtual cinematography. This means creating and filming scenes entirely in a computer. The Matrix Reloaded and The Matrix Revolutions (2003) were the first films to show this. Their digital look-alikes were so convincing that it was hard to tell if an image was a real actor or a digital one. Scenes like the "Burly brawl" would have been too difficult to make with traditional methods.

3-D Animation Software in the 2000s

- Blender (software) is a free, open-source virtual cinematography package.

- Poser is a 3-D graphics program for animating soft objects.

- Pointstream Software is a professional program that tracks pixels from multiple cameras.

- Adobe Substance allows artists to create 3-D assets, models, materials, and lighting.

CGI in the 2010s

At SIGGRAPH 2013, Activision and USC showed a real-time digital face look-alike called "Ira." It looked very realistic. Techniques once only for high-end films are now moving into video games and other applications.

Future Developments

New developments in computer animation are shared every year at SIGGRAPH, a big conference on computer graphics. They are also presented at Eurographics and other conferences worldwide.

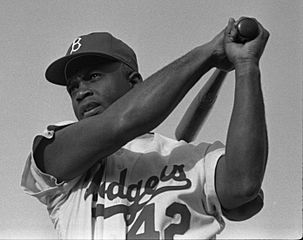

| Jackie Robinson |

| Jack Johnson |

| Althea Gibson |

| Arthur Ashe |

| Muhammad Ali |