History of computer science facts for kids

The history of computer science is a fascinating journey that started long before modern computers existed. It began with ideas from mathematics and physics. Over centuries, these ideas and inventions slowly led to what we now call computer science. This journey, from simple tools and mathematical theories to modern computers, created a huge new field of study, amazing technological progress, and the basis for a massive worldwide industry and culture.

Contents

- Early Days

- Binary Logic and Early Machines

- The Computer Science Field Begins

- Charles Babbage and Ada Lovelace

- Early Designs After Babbage

- Electrical Switching Circuits

- Alan Turing and the Turing Machine

- Kathleen Booth and Early Programming

- Early Computer Hardware

- Shannon and Information Theory

- Wiener and Cybernetics

- John von Neumann and Computer Architecture

- John McCarthy, Marvin Minsky, and Artificial Intelligence

- See also

Early Days

The very first tool for counting was the abacus, invented around 2700 to 2300 BCE in Sumer. The early abacus was a table with columns drawn in sand, using pebbles to represent numbers. More modern abaci are still used today, like the Chinese abacus.

In ancient India, around 500 BC, a grammarian named Pāṇini created a very detailed and technical grammar for the Sanskrit language. He used special rules, transformations, and repeating patterns, which are similar to ideas in computer programming today.

The Antikythera mechanism is thought to be an early mechanical analog computer. It was designed to figure out the positions of stars and planets. It was found in 1901 in a shipwreck near a Greek island and is about 2,100 years old.

Mechanical analog computers appeared again much later in the Islamic Golden Age. Muslim astronomers developed tools like the geared astrolabe and the torquetum. Muslim mathematicians also made big steps in cryptography (code-breaking), including frequency analysis. Even programmable machines were invented by Muslim engineers, like an automatic flute player.

Later, in the 14th century, complex mechanical astronomical clocks appeared in Europe.

In the early 1600s, John Napier discovered logarithms, which made calculations easier. This led to many inventors creating new calculating tools. In 1623, Wilhelm Schickard designed a "Calculating Clock," but it was destroyed before he could finish it. Around 1640, the French mathematician Blaise Pascal built a mechanical adding machine. Then, in 1672, Gottfried Wilhelm Leibniz invented the Stepped Reckoner.

In 1837, Charles Babbage first described his Analytical Engine. This is seen as the first design for a modern computer. It would have had memory, a part for doing math, and could follow a programming language with loops and conditions. Even though it was never built, its design was very advanced for its time.

Binary Logic and Early Machines

In 1702, Gottfried Wilhelm Leibniz developed logic using the binary number system (which uses only 0s and 1s). He showed how logical ideas like "and," "or," and "not" could be expressed mathematically. He even thought about ideas similar to modern computer programs. Later, in 1961, Norbert Wiener said that Leibniz should be seen as the "patron saint" of cybernetics (the study of control and communication in machines and living things).

However, it took more than a century for George Boole to publish his Boolean algebra in 1854. This was a complete system that allowed computer processes to be modeled mathematically.

Around this time, the first mechanical devices driven by binary patterns were invented. The Industrial Revolution led to machines doing many tasks, including weaving. In 1801, Joseph Marie Jacquard's loom used punched cards. A hole in the card meant a binary "one," and no hole meant a binary "zero." Jacquard's loom wasn't a computer, but it showed that machines could be controlled by binary systems and store binary information.

The Computer Science Field Begins

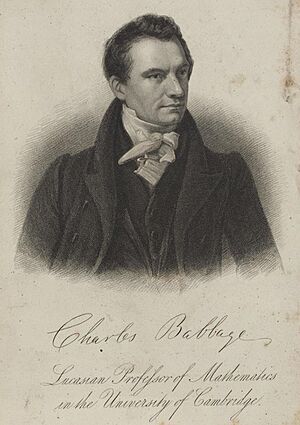

Charles Babbage and Ada Lovelace

Charles Babbage is often called one of the first pioneers of computing. In the 1810s, he dreamed of machines that could calculate numbers and tables. He designed a calculator that could handle numbers up to 8 decimal places. He then worked on a machine for numbers with up to 20 decimal places. By the 1830s, Babbage planned a machine that could use punched cards to do math operations. This machine, called the "Analytical Engine," would store numbers in memory and follow a sequence of steps. It was the first real idea of what a modern computer would be like.

Ada Lovelace (Augusta Ada Byron) is known as the first computer programmer and was a brilliant mathematician. She worked with Charles Babbage on his "Analytical Engine." During this time, Ada Lovelace designed what is considered the first computer algorithm, which could calculate Bernoulli numbers. She also predicted that future computers would not only do math but also work with symbols and other kinds of information. Even though the "Analytical Engine" was never built in her lifetime, her work from the 1840s was very important.

Early Designs After Babbage

After Babbage, others continued to explore computer designs. Percy Ludgate, a clerk from Ireland, independently designed a programmable mechanical computer, which he described in 1909.

Two other inventors, Leonardo Torres Quevedo and Vannevar Bush, also built on Babbage's ideas. In 1914, Torres designed an electromechanical machine controlled by a program and introduced the idea of floating-point arithmetic (a way to represent numbers with decimal points). In 1920, he showed an electromechanical calculator that could connect to a typewriter to receive commands and print results. Bush discussed using existing IBM punch card machines to build Babbage's design.

Electrical Switching Circuits

In 1886, Charles Sanders Peirce explained how electrical switches could perform logical operations. He showed that certain basic logic gates (like NOR or NAND) could create all other logic functions. This work was not published until much later.

Eventually, vacuum tubes replaced older relays for logic operations. In 1924, Walther Bothe invented the first modern electronic AND gate. Konrad Zuse designed and built electromechanical logic gates for his computer, the Z1, in the late 1930s.

By the 1930s, engineers could build electronic circuits to solve math and logic problems. But they often did it without a clear theory. This changed with switching circuit theory. From 1934 to 1936, Akira Nakashima, Claude Shannon, and Viktor Shetakov published papers showing that Boolean algebra could describe how switching circuits work. This idea—using electrical switches to do logic—is the basic concept behind all electronic digital computers.

Claude Shannon, inspired by George Boole's work, realized that Boolean algebra could be used to arrange electromechanical relays (used in telephone switches) to solve logic problems. His ideas became the foundation for designing practical digital circuits.

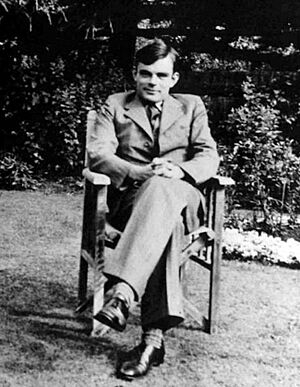

Alan Turing and the Turing Machine

Before the 1920s, "computers" were actually human clerks who did calculations. Many thousands of these human computers, often women, worked in businesses, government, and research. They did tasks like calculating astronomical positions for calendars or ballistic tables for the military.

After the 1920s, the term "computing machine" referred to any machine that did the work of a human computer. Machines that calculated with continuous values (like angles or electrical differences) were called "analog" computers. Digital machines, however, could store each individual digit of a number. They used difference engines or relays before faster memory devices were invented.

The phrase "computing machine" slowly changed to just "computer" after the late 1940s, as electronic digital machines became common. These new computers could do the calculations that human clerks used to perform.

Since digital machines stored values as numbers, not physical properties, a logical computer based on digital equipment could do anything that could be described as "purely mechanical." The theoretical Turing Machine, created by Alan Turing, is a hypothetical device used to study what computers can and cannot do.

The mathematical foundations of modern computer science were laid by Kurt Gödel with his incompleteness theorem in 1931. This theorem showed that there were limits to what could be proven or disproven within a formal system.

In 1936, Alan Turing and Alonzo Church independently introduced the formal idea of an algorithm, defining what can be computed and a "purely mechanical" way of computing. This became the Church–Turing thesis. It suggests that any calculation that is possible can be done by an algorithm running on a computer, as long as there's enough time and storage.

Also in 1936, Alan Turing published his important work on Turing machines, an abstract digital computing machine. This machine introduced the idea of the stored program concept, which almost all modern computers use. These theoretical machines were designed to figure out, mathematically, what can be computed, considering limits on computing ability. If a Turing machine can complete a task, it's considered Turing computable.

Stanley Frankel, a physicist, described how important Turing's 1936 paper was to John von Neumann:

I know that in or about 1943 or ‘44 von Neumann was well aware of the fundamental importance of Turing's paper of 1936… Von Neumann introduced me to that paper and at his urging I studied it with care. Many people have acclaimed von Neumann as the "father of the computer" (in a modern sense of the term) but I am sure that he would never have made that mistake himself. He might well be called the midwife, perhaps, but he firmly emphasized to me, and to others I am sure, that the fundamental conception is owing to Turing...

Kathleen Booth and Early Programming

Kathleen Booth wrote the first assembly language and designed the tools to use it for the Automatic Relay Calculator (ARC) in London. She also helped design two other early computers, the SEC and APE(X)C.

Early Computer Hardware

The world's first electronic digital computer, the Atanasoff–Berry computer, was built at Iowa State University from 1939 to 1942 by professor John Vincent Atanasoff and student Clifford Berry.

In 1941, Konrad Zuse developed the world's first working program-controlled computer, the Z3. It was later shown to be able to do any computation a Turing machine could. Zuse also created the S2, considered the first process control computer. He started one of the first computer businesses in 1941, producing the Z4, which became the world's first commercial computer. In 1946, he designed the first high-level programming language, Plankalkül.

In 1948, the Manchester Baby was finished. It was the world's first electronic digital computer that could run programs stored in its own memory, just like almost all modern computers. Alan Turing's ideas were very important to its successful development.

In 1950, Britain's National Physical Laboratory completed Pilot ACE, a smaller programmable computer based on Turing's ideas. For a while, it was the fastest computer in the world. Turing's design for ACE was very advanced and had much in common with today's fast, efficient computer designs.

Later in the 1950s, the first operating system, called GM-NAA I/O, was developed. It allowed many jobs to be run one after another with less human help.

In 1969, two research teams at UCLA and Stanford tried to connect two computers to create a network. This was a huge step towards the Internet, even though the system crashed during the first try.

The first actual computer bug was a moth. It got stuck between the relays (switches) on the Harvard Mark II computer. The term "bug" for a computer problem is often wrongly credited to Grace Hopper, but the incident with the moth happened on September 9, 1947, when operators noted, "First actual case of bug being found."

Shannon and Information Theory

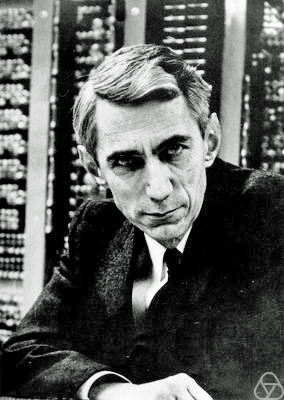

Claude Shannon started the field of information theory with his 1948 paper, "A Mathematical Theory of Communication." This paper used probability theory to figure out the best way to encode information for sending. This work is a key foundation for many areas of study, including data compression and cryptography.

Wiener and Cybernetics

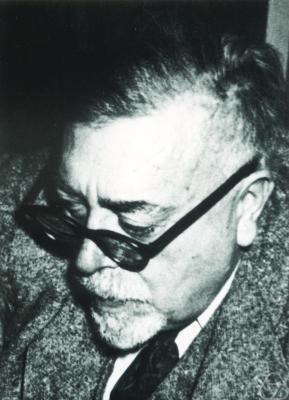

From his work on anti-aircraft systems that used radar to find enemy planes, Norbert Wiener created the term cybernetics. It comes from a Greek word meaning "steersman." He published his book "Cybernetics" in 1948, which influenced the field of artificial intelligence. Wiener also compared how computers work, including their memory, to how the human brain functions.

John von Neumann and Computer Architecture

In 1946, a new model for computer design was introduced, known as the Von Neumann architecture. Since 1950, this model has been used in almost all computer designs. The von Neumann architecture was groundbreaking because it allowed computer instructions and data to share the same memory space.

The von Neumann model has three main parts: the arithmetic logic unit (ALU), which does math; the memory, which stores information; and the instruction processing unit (IPU), which handles instructions. In this design, the IPU tells the memory what to get. If it's an instruction, it goes back to the IPU. If it's data, it goes to the ALU.

Von Neumann's design uses a RISC (Reduced Instruction Set Computing) approach. This means it uses a smaller set of simple instructions to do all tasks. Operations can be simple math (like adding or subtracting), conditional branches (like "if" statements or "while" loops that tell the computer where to go next), and moving data between different parts of the machine. The architecture also uses a program counter to keep track of where the computer is in the program.

John McCarthy, Marvin Minsky, and Artificial Intelligence

The term "artificial intelligence" (AI) was created by John McCarthy to describe the research for a project at Dartmouth College in 1955. This naming also marked the start of a new field in computer science. The official project began in 1956 with McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon. They wanted to understand what makes up artificial intelligence.

McCarthy and his team believed that if a machine could complete a task, a computer should also be able to do it by running a program. They also found that the human brain was too complex to copy perfectly with a program at that time.

They looked at how humans understand language and form sentences, with different meanings and rules, and compared it to how machines process information. Computers understand a very basic language of 1s and 0s (binary). This language must be written in a specific way to tell the computer's hardware what to do.

Minsky explored how artificial neural networks could be arranged to act like the human brain. However, he only got partial results and needed more research.

McCarthy and Shannon wanted to use complex problems to measure how efficient a machine was, using mathematical theory and computations. They also only got partial results.

The idea of "self-improvement" was about how a machine could use self-modifying code to make itself smarter. This would allow a machine to grow in intelligence and calculate faster. The group thought they could study this if a machine could improve how it completed a task.

The group believed that research in AI could be broken down into smaller parts, including how machines handle sensory information. Abstractions in computer science can refer to math and programming languages.

Their idea of computational creativity was about whether a program or machine could think in ways similar to humans. They wanted to see if a machine could take incomplete information and fill in the missing details, just like the human mind can. If a machine could do this, they needed to figure out how it reached its conclusions.

See also

In Spanish: Historia de las ciencias de la computación para niños

In Spanish: Historia de las ciencias de la computación para niños

- Computer museum

- List of computer term etymologies, the origins of computer science words

- List of pioneers in computer science

- History of computing

- History of computing hardware

- History of software

- History of personal computers

- Timeline of algorithms

- Timeline of women in computing

- Timeline of computing 2020–present

| William L. Dawson |

| W. E. B. Du Bois |

| Harry Belafonte |