History of computing hardware facts for kids

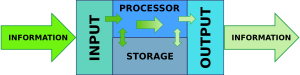

The history of computing hardware is all about how devices that help us calculate have changed, from very simple tools to the powerful computers we use today.

At first, people used simple mechanical tools to help with math. You had to set them up and move parts to get an answer. Later, some computers used physical things like distances or voltages to represent numbers. But then, digital computers came along. They used digits (like 0s and 1s) and could be much more precise.

Huge breakthroughs happened with the invention of transistors and then integrated circuits (tiny chips). This led to digital computers mostly replacing older analog computers. In the 1970s, MOS technology allowed for semiconductor memory and the microprocessor. This made personal computers (PCs) possible. By the 1990s, PCs were everywhere, and in the 2000s, mobile computers like smartphones and tablets became super common.

Contents

Early Computing Tools

Ancient and Medieval Times

For thousands of years, people have used devices to help them count. Often, they used a system where one object stood for one item, like using fingers. The very first counting tool was probably a tally stick, which is a stick with notches cut into it. The Lebombo bone, found in Africa, is one of the oldest known math tools, dating back 35,000 years. It has 29 notches.

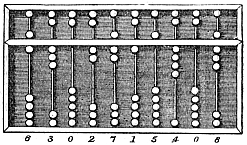

Later, people in the Fertile Crescent used clay shapes to count things like animals or grain. The abacus was also used early on for math. The Roman abacus was used in Babylonia as far back as 2700–2300 BC. In medieval Europe, people used checkered cloths on tables with markers to help calculate money.

Ancient and medieval times also saw the creation of several analog computers. These machines used physical movements to solve math problems, especially for astronomy. Examples include the astrolabe and the amazing Antikythera mechanism from ancient Greece (around 150–100 BC). In Roman Egypt, Hero of Alexandria made mechanical devices, including a programmable cart. Other early mechanical calculators came from Islamic astronomers and engineers, like the astronomical clock tower by Su Song (1094) in China. The castle clock by Ismail al-Jazari in 1206 was a water-powered mechanical clock that could be programmed.

Renaissance Calculating Tools

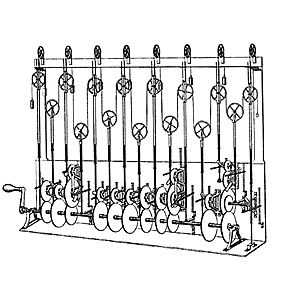

The Scottish mathematician John Napier found a way to turn multiplication and division into addition and subtraction using logarithms. To create his first logarithm tables, he had to do many long multiplications. So, he invented 'Napier's bones', a tool like an abacus that made these calculations much easier.

Since numbers can be shown as distances on a line, the slide rule was invented in the 1620s. This tool allowed people to multiply and divide much faster than before. Edmund Gunter built a device with a single logarithmic scale. William Oughtred improved this in 1630 with his circular slide rule, and then the modern slide rule in 1632. Slide rules were used by engineers and scientists for generations until pocket calculators came along.

Mechanical Calculators

In 1609, Guidobaldo del Monte created a mechanical multiplier using gears to calculate fractions. This was the first time gears were documented for mechanical calculation.

Wilhelm Schickard, a German inventor, designed a calculating machine in 1623. It combined Napier's rods with the world's first mechanical adding machine. However, its carry mechanism could get stuck. A fire destroyed one machine, and Schickard didn't build another.

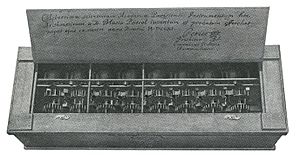

In 1642, a teenager named Blaise Pascal started working on calculating machines. After three years and 50 tries, he invented a mechanical calculator called the Pascaline. He built twenty of these machines.

Gottfried Wilhelm Leibniz invented the stepped reckoner around 1672. He wanted a machine that could not only add and subtract but also multiply and divide using a movable part. Leibniz also described the binary numeral system, which is key to all modern computers. However, many machines until the 1940s, including Charles Babbage's and even ENIAC, used the decimal system.

Around 1820, Charles Xavier Thomas de Colmar created the Thomas Arithmometer. This became the first successful, mass-produced mechanical calculator. It could add, subtract, multiply, and divide. Mechanical calculators were used until the 1970s.

Punched-Card Data Processing

In 1804, Joseph Marie Jacquard, a French weaver, developed a loom that used punched cards to control the patterns it wove. This was a huge step in making machines programmable. The paper tape could be changed without rebuilding the loom.

In the late 1880s, the American Herman Hollerith invented a way to store data on punched cards that machines could read. He created the tabulating machine and the keypunch machine for this. His machines used electric switches and counters. Hollerith's method was used for the 1890 United States Census, which was processed two years faster than the previous one. Hollerith's company later became IBM.

By 1920, these machines could add, subtract, and print totals. Their functions were controlled by plugging wires into removable panels. When the U.S. started Social Security in 1935, IBM's punched-card systems processed records for 26 million workers. Punched cards became very common in businesses and government for accounting.

Calculators

By the 20th century, mechanical calculators, cash registers, and accounting machines were updated to use electric motors. The word "computer" was actually a job title for people, mostly women, who used these calculators to do math.

Companies like Friden, Marchant, and Monroe made desktop mechanical calculators in the 1930s that could add, subtract, multiply, and divide. In 1948, the Curta was introduced. It was a small, hand-cranked mechanical calculator.

The world's first all-electronic desktop calculator was the British Bell Punch ANITA, released in 1961. It used vacuum tubes and was silent and fast. In June 1963, the U.S. Friden EC-130 came out. It was all-transistor and had a screen that showed numbers.

First General-Purpose Computing Device

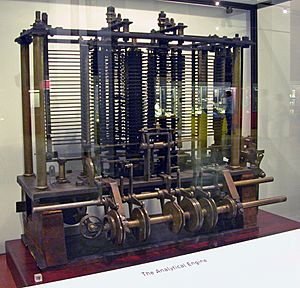

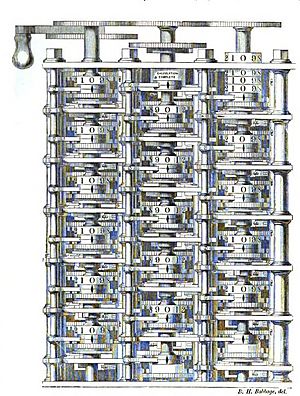

Charles Babbage, an English engineer, came up with the idea of a programmable computer. He is often called the "father of the computer." In the early 1800s, he designed the first mechanical computer. After working on his difference engine, which helped with navigation math, he realized in 1833 that a much more general machine, an Analytical Engine, was possible.

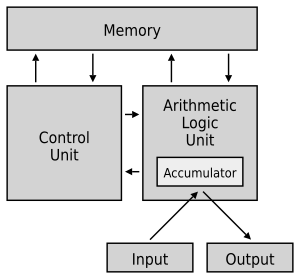

This machine would take programs and data using punched cards, similar to how Jacquard looms worked. For output, it would have a printer, a plotter, and a bell. It could also punch numbers onto cards for later use. It used regular decimal numbers.

The Analytical Engine had parts like a modern computer's arithmetic logic unit (for calculations), control flow (for making decisions and repeating steps), and memory. This made it the first design for a general-purpose computer that could do any calculation if given the right instructions.

It was planned to have a memory that could hold 1,000 numbers, each 40 digits long (about 16.7 KB). A part called the "mill" would do all four basic math operations, comparisons, and square roots. The mill would use its own internal instructions, like microcode in modern CPUs, stored on rotating drums called "barrels."

The programming language for users was like today's assembly languages. It could do loops and conditional branching, meaning it could repeat actions or make choices based on conditions. Three types of punch cards were used: one for math operations, one for numbers, and one for moving numbers to and from memory.

This machine was about a century ahead of its time. However, it was never fully built because of problems with funding and making the thousands of parts by hand. Ada Lovelace translated and added notes to a description of the Analytical Engine. Her notes are considered the first published description of programming, so she is often called the first computer programmer.

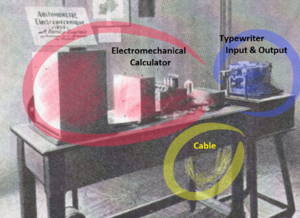

After Babbage, Percy Ludgate in Ireland independently designed a programmable mechanical computer, which he described in 1909. Other inventors like Leonardo Torres Quevedo and Vannevar Bush also worked on ideas based on Babbage's concepts. Torres designed an electromechanical analytical machine in 1913 and built a prototype in 1920 using relays for calculations.

Analog Computers

In the first half of the 20th century, analog computers were thought to be the future of computing. These devices used changing physical things, like electricity, mechanics, or water flow, to solve problems. Unlike digital computers, which use exact numbers, analog computers used continuous values. This meant they couldn't always repeat processes with perfect accuracy.

The first modern analog computer was a tide-predicting machine, invented by Sir William Thomson in 1872. It used pulleys and wires to calculate tide levels, which was very helpful for navigation.

The differential analyser, a mechanical analog computer designed to solve complex math problems, was thought up by James Thomson in 1876.

An important step in analog computing was the development of fire-control systems for long-range ship guns. These systems calculated how to aim guns, considering factors like ship movement, target movement, wind, and the Earth's rotation. In 1912, British engineer Arthur Pollen developed the first electrically powered mechanical analog computer for this purpose, used by the Russian Navy in World War I.

Mechanical devices also helped with aerial bombing accuracy. The Drift Sight, developed in 1916, measured wind speed to calculate its effect on bombs. This system was improved during World War II with sights like the Mark XIV bomb sight and the Norden.

The most advanced mechanical analog computer was the differential analyzer, built by H. L. Hazen and Vannevar Bush at MIT starting in 1927. A dozen of these were built before digital computers made them old-fashioned.

By the 1950s, digital electronic computers became so successful that most analog computers were no longer used. However, hybrid analog computers, which used digital electronics to control them, were still used for specialized tasks into the 1960s.

Advent of the Digital Computer

The basic idea of the modern computer was first described by Alan Turing in his 1936 paper, On Computable Numbers. Turing imagined simple, hypothetical machines, now called Turing machines. He showed that such a machine could perform any mathematical calculation if it could be written as a set of steps (an algorithm). He also introduced the idea of a "universal machine" (a universal Turing machine), which could do the work of any other machine by running a program stored on tape. This meant the machine could be programmed. John von Neumann later said that the main idea of the modern computer came from this paper. Modern computers are considered "Turing-complete," meaning they can do anything a universal Turing machine can, within their memory limits.

Electromechanical Computers

The era of modern computing began before and during World War II. Most digital computers built then were electromechanical. They used electric switches to move mechanical relays to do calculations. These machines were slow and were later replaced by much faster all-electric computers that used vacuum tubes.

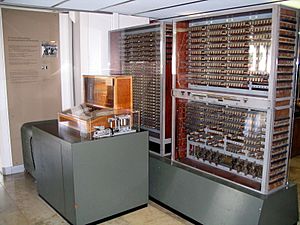

The Z2 was an early electromechanical relay computer, created by German engineer Konrad Zuse in 1940. It improved on his earlier Z1 by using electrical relay circuits for calculations.

In the same year, British codebreakers built electromechanical devices called bombes. These helped them break secret messages encrypted by the German Enigma machine during World War II. Alan Turing created the initial design in 1939.

In 1941, Zuse built the Z3, the world's first working electromechanical, programmable, fully automatic digital computer. The Z3 used 2000 relays and worked at about 5–10 Hz. Programs and data were stored on punched film. It was similar to modern machines in some ways, using floating-point numbers. Zuse's machines were easier to build and more reliable because they used the simpler binary system instead of the decimal system. The Z3 was proven to be a Turing-complete machine in 1998.

Zuse's work was largely unknown outside Germany until much later. In 1944, the Harvard Mark I was built at IBM. It was a similar general-purpose electromechanical computer, but not quite Turing-complete.

Digital Computation Basics

The term "digital" was first suggested by George Stibitz. It means that a signal, like a voltage, is used to represent a value by encoding it, rather than directly showing it. For example, a low signal might be 0, and a high signal might be 1. Modern computers mostly use binary logic (0s and 1s), but many early machines used decimal numbers.

The math behind digital computing is Boolean algebra, developed by George Boole in 1854. In the 1930s, Claude Shannon and Victor Shestakov independently showed that Boolean logic could be applied to electrical circuits, creating what we now call logic gates. This idea is fundamental to how digital circuits are designed today.

Electronic Data Processing

Soon, purely electronic parts replaced mechanical ones, and digital calculations replaced analog ones. Machines like the Z3, the Atanasoff–Berry Computer, the Colossus computers, and the ENIAC were built by hand. They used circuits with relays or vacuum tubes and often used punched cards or punched paper tape for input and storage.

Engineer Tommy Flowers explored using electronics for telephone exchanges in the 1930s. By 1939, his experimental equipment was converting part of the telephone network into an electronic data processing system using thousands of vacuum tubes.

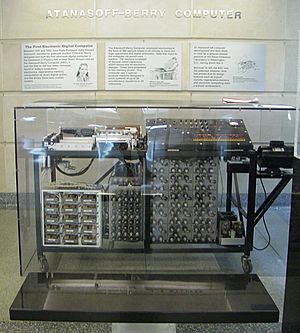

In the U.S., in 1940, Arthur Dickinson (IBM) invented the first digital electronic computer. This device was fully electronic for control, calculations, and output. John Vincent Atanasoff and Clifford E. Berry developed the Atanasoff–Berry Computer (ABC) in 1942. It was the first binary electronic digital calculating device, using about 300 vacuum tubes for calculations. However, it was a special-purpose machine and couldn't be easily reprogrammed like modern computers.

Computers built mainly with vacuum tubes are called "first generation computers."

The Electronic Programmable Computer

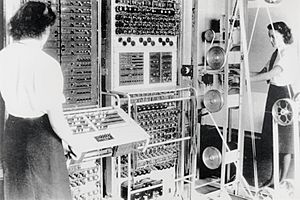

During World War II, British codebreakers at Bletchley Park successfully broke German military codes. The German Enigma machine was attacked with electromechanical bombes, often operated by women.

The Germans also used a different encryption system called "Tunny." To break Tunny, Max Newman and his team developed the Heath Robinson. Tommy Flowers then designed and built the more flexible Colossus computer, which replaced the Heath Robinson. Colossus was shipped to Bletchley Park in January 1944 and started working on messages in February.

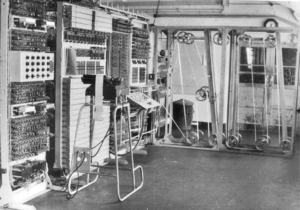

Colossus was the world's first electronic digital programmable computer. It used many vacuum tubes. It took input from paper tape and could be set up to do various logical operations, but it wasn't a "universal" computer like modern ones. Data was fed into Colossus by reading a paper tape loop at 5,000 characters per second. Colossus Mark 1 had 1500 vacuum tubes, while Mark 2 had 2400 tubes and was five times faster. The first Mark 2 Colossus was ready on June 1, 1944, just in time for D-Day.

Colossus was mainly used to figure out the starting positions of the German code machine's rotors. It was programmable using switches and plug panels. Ten Mark 2 Colossi were working by the end of the war.

These machines provided very important information to the Allies during the war. Details about them were kept secret until the 1970s. Because of this secrecy, they were not included in many early histories of computing. A working copy of a Colossus machine is now on display at Bletchley Park.

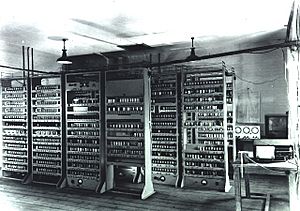

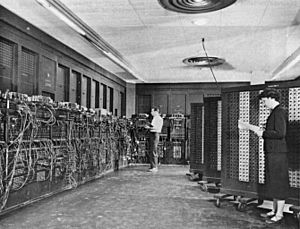

The U.S.-built ENIAC (Electronic Numerical Integrator and Computer) was the first electronic programmable computer in the U.S. It was similar to Colossus but much faster and more flexible. It could do any problem that fit into its memory. Like Colossus, its "program" was set by connecting cables and switches. The programmers of ENIAC were women mathematicians.

ENIAC combined the speed of electronics with the ability to be programmed for many complex problems. It could add or subtract 5000 times a second, a thousand times faster than any other machine. It weighed 30 tons, used 200 kilowatts of power, and had over 18,000 vacuum tubes. It was used almost constantly for ten years.

Stored-Program Computer

Early computers had programs that were set by changing physical connections with switches or cables. This "reprogramming" was hard and took a lot of time. Stored-program computers, however, were designed to keep their instructions (the program) in memory, usually the same memory used for data.

The Idea of Stored Programs

The idea for the stored-program computer was first suggested by Alan Turing in 1936. In 1945, Turing joined the National Physical Laboratory and started working on an electronic stored-program digital computer. His 1945 report was the first detailed plan for such a device.

Meanwhile, John von Neumann at the Moore School of Electrical Engineering wrote his First Draft of a Report on the EDVAC in 1945. This computer design became known as the "von Neumann architecture." Turing later presented a more detailed paper in 1946 for a device he called the Automatic Computing Engine (ACE).

Turing believed that speed and memory size were very important. He suggested a fast memory of 25 KB, accessed at 1 MHz. The ACE could use subroutines (smaller programs within a main program) and had an early form of programming language.

Manchester Baby

The Manchester Baby (Small Scale Experimental Machine, SSEM) was the world's first electronic stored-program computer. It was built at the Victoria University of Manchester and ran its first program on June 21, 1948.

The Baby wasn't meant to be a practical computer. It was built to test the Williams tube, the first random-access digital storage device. The Williams tube, invented by Freddie Williams and Tom Kilburn, used a cathode-ray tube to temporarily store binary data.

Even though it was small and simple, the Baby was the first working machine to have all the key parts of a modern electronic computer. After it proved its design worked, a project started to turn it into a more useful computer, the Manchester Mark 1. The Mark 1 then became the prototype for the Ferranti Mark 1, the world's first commercially available general-purpose computer.

The Baby had a 32-bit word length and a memory of 32 words. It was designed to be as simple as possible, so only subtraction and negation were built into its hardware. Other math operations were done by software. The first program for the machine found the highest proper divisor of 218 (262,144). This calculation was known to take a long time, proving the computer's reliability. It ran for 52 minutes and performed 3.5 million operations.

Manchester Mark 1

The SSEM led to the Manchester Mark 1. Work began in August 1948, and the first version was ready by April 1949. A program to search for Mersenne primes ran without errors for nine hours. The computer's success was widely reported in the British press, which called it an "electronic brain."

This computer is important because it was one of the first to include index registers. This made it easier for programs to read through lists of numbers in memory. Many ideas from its design were used in later commercial computers like the IBM 701 and Ferranti Mark 1. The main designers, Frederic C. Williams and Tom Kilburn, realized that computers would be used more for science than just pure math.

EDSAC

Another early modern digital stored-program computer was the EDSAC, designed and built by Maurice Wilkes and his team at the University of Cambridge in England in 1949. This machine was inspired by John von Neumann's report and was one of the first useful electronic digital stored-program computers.

EDSAC ran its first programs on May 6, 1949, calculating a table of squares and a list of prime numbers. EDSAC also became the basis for the first commercially used computer, the LEO I, used by the food company J. Lyons & Co. Ltd. EDSAC 1 was shut down in 1958.

The "brain" [computer] may one day come down to our level [of the common people] and help with our income-tax and book-keeping calculations. But this is speculation and there is no sign of it so far.

EDVAC

ENIAC inventors John Mauchly and J. Presper Eckert suggested building the EDVAC in August 1944. Design work started before ENIAC was even fully working. The design included important improvements and a fast memory. However, Eckert and Mauchly left the project, and its construction struggled.

It was finally delivered to the U.S. Army in August 1949, but due to problems, it only started working in 1951, and then only partly.

Commercial Computers

The first commercial computer was the Ferranti Mark 1, built by Ferranti and delivered to the University of Manchester in February 1951. It was based on the Manchester Mark 1. It had a larger main memory, a faster multiplier, and more instructions. The multiplier alone used almost a quarter of the machine's 4,050 vacuum tubes. At least seven more of these machines were delivered between 1953 and 1957.

In October 1947, the directors of J. Lyons & Company, a British catering company, decided to help develop computers for business. Their LEO I computer started working in April 1951 and ran the world's first regular office computer job. On November 17, 1951, J. Lyons began weekly calculations for bakery valuations on the LEO – the first business program to run on a stored-program computer.

In June 1951, the UNIVAC I (Universal Automatic Computer) was delivered to the U.S. Census Bureau. Remington Rand eventually sold 46 of these machines for over $1 million each. UNIVAC was the first "mass produced" computer. It used 5,200 vacuum tubes and used 125 kilowatts of power. Its main memory could store 1,000 words.

IBM introduced a smaller, more affordable computer in 1954 that became very popular. The IBM 650 weighed over 900 kg, and its power supply weighed around 1350 kg. The system cost $500,000 or could be rented for $3,500 a month. Its memory was originally 2,000 ten-digit words, later expanded to 4,000. Memory limits like these affected programming for decades.

Microprogramming

In 1951, British scientist Maurice Wilkes developed the idea of microprogramming. He realized that a computer's main processing unit (CPU) could be controlled by a tiny, special program stored in fast ROM. This idea made CPU development much simpler. He first described this in 1951 and published it in 1955.

This method was widely used in the CPUs of mainframe and other computers. It was first used in EDSAC 2.

Magnetic Memory

Magnetic drum memories were developed for the U.S. Navy during World War II. The first mass-produced computer, the IBM 650, announced in 1953, had about 8.5 kilobytes of drum memory.

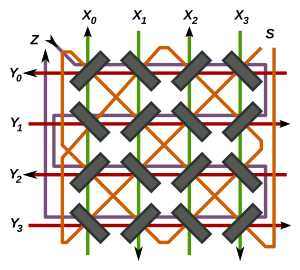

Magnetic core memory was patented in 1949 and first used in the Whirlwind computer in August 1953. It quickly became popular. Magnetic core memory was used in the IBM 704 (1955) and the Ferranti Mercury (1957). It was the main type of memory until the 1970s when semiconductor memory replaced it.

Transistor Computers

The transistor was invented in 1947. From 1955 onward, transistors replaced vacuum tubes in computer designs, leading to the "second generation" of computers. Transistors were smaller, used less power, and gave off less heat than vacuum tubes. They were also more reliable and lasted longer. Transistorized computers could have tens of thousands of circuits in a small space. This greatly reduced the size, cost, and running expenses of computers.

At the University of Manchester, a team led by Tom Kilburn built a machine using the new transistors instead of tubes. Their first transistorized computer, and the first in the world, was working by 1953. A second version was finished in April 1955. The 1955 version used 200 transistors and 1,300 solid-state diodes, using 150 watts of power. However, it still used some vacuum tubes for its clock and memory.

The first completely transistorized computer was the Harwell CADET of 1955. It used 324 transistors and had a low clock speed to avoid needing vacuum tubes. CADET started offering a regular computing service in August 1956 and often ran for 80 hours or more continuously.

The Manchester University Transistor Computer's design was used by Metropolitan-Vickers for their Metrovick 950, the first commercial transistor computer. Six Metrovick 950s were built, with the first finished in 1956. They were used for about five years. The IBM 1401, a second-generation computer, became very popular, with over ten thousand installed between 1960 and 1964.

Transistor Peripherals

Transistor electronics improved not only the CPU (Central Processing Unit) but also other devices connected to the computer. Second-generation disk data storage units could store millions of letters and numbers. Besides fixed disk storage, there were removable disk units that could be easily swapped. Magnetic tape provided a cheaper way to store large amounts of data for long-term keeping.

Many second-generation CPUs used a separate processor to handle communications with other devices. For example, while the communication processor read and punched cards, the main CPU did calculations. This allowed the computer to do more tasks at once.

During the second generation, remote terminals (like Teleprinters) became much more common. Telephone connections allowed these terminals to be hundreds of kilometers away from the main computer. Eventually, these separate computer networks grew into the interconnected "network of networks" we know as the Internet.

Transistor Supercomputers

The early 1960s saw the rise of supercomputing. The Atlas was a joint project between the University of Manchester, Ferranti, and Plessey. It was installed at Manchester University in 1962 and was considered one of the world's first supercomputers, and the most powerful at the time. It was a second-generation machine, using individual transistors. Atlas also introduced the "Atlas Supervisor," which many consider the first modern operating system.

In the U.S., Seymour Cray designed a series of computers at Control Data Corporation (CDC) that used new designs to achieve very high performance. The CDC 6600, released in 1964, is generally seen as the first supercomputer. It was about three times faster than its predecessor and was the world's fastest computer from 1964 to 1969.

Integrated Circuit Computers

The "third-generation" of digital electronic computers used integrated circuit (IC) chips for their logic. An integrated circuit is a tiny chip that contains many electronic components.

The idea of an integrated circuit was thought up by Geoffrey Dummer in the UK.

The first working integrated circuits were invented by Jack Kilby at Texas Instruments and Robert Noyce at Fairchild Semiconductor. Kilby showed his first working IC in September 1958. Noyce came up with his own idea of an integrated circuit half a year later. Noyce's invention was a single-chip IC, which solved many practical problems and was easier to mass-produce. It was made of silicon, while Kilby's was made of germanium.

Third-generation computers appeared in the early 1960s for government use and then in commercial computers starting in the mid-1960s. The first silicon IC computer was the Apollo Guidance Computer (AGC). It was not the most powerful, but it had to be very small and dense because of the strict size and weight limits on the Apollo spacecraft. Each lunar landing mission carried two AGCs.

Semiconductor Memory

The MOSFET (metal–oxide–semiconductor field-effect transistor) was invented by Mohamed M. Atalla and Dawon Kahng at Bell Labs in 1959. Besides processing data, the MOSFET made it practical to use MOS transistors as memory cell storage elements, replacing older magnetic-core memory. Semiconductor memory, also known as MOS memory, was cheaper and used less power. MOS random-access memory (RAM), like static RAM (SRAM), was developed in 1964. In 1966, Robert Dennard developed MOS dynamic RAM (DRAM). In 1967, Dawon Kahng and Simon Sze developed the floating-gate MOSFET, which is the basis for non-volatile memory like flash memory (used in USB drives and phones).

Microprocessor Computers

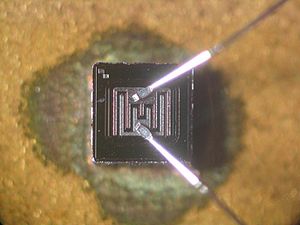

The "fourth-generation" of digital electronic computers used microprocessors. A microprocessor is a computer processor on a single tiny chip. The microprocessor came from the MOS integrated circuit (MOS IC) chip. As MOS IC chips became more complex, engineers realized that a whole computer processor could fit on one chip.

There's some debate about which device was the very first microprocessor. Early multi-chip microprocessors included the Four-Phase Systems AL-1 (1969) and Garrett AiResearch MP944 (1970). The first single-chip microprocessor was the Intel 4004, released in 1971. It was designed by Ted Hoff, Federico Faggin, Masatoshi Shima, and Stanley Mazor at Intel.

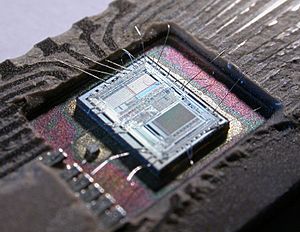

While the first microprocessors only contained the CPU, they quickly developed into chips that held most or all of a computer's internal electronic parts. For example, the Intel 8742 chip shown here is an 8-bit microcontroller that includes a CPU, RAM, EPROM, and input/output all on the same chip.

The microprocessor led to the development of microcomputers. These were small, low-cost computers that individuals and small businesses could own. Microcomputers first appeared in the 1970s and became very common in the 1980s and beyond.

One of the earliest microcomputer systems was R2E's Micral N, launched in early 1973, using the Intel 8008. The first commercially available microcomputer kit was the Intel 8080-based Altair 8800, announced in January 1975. The Altair 8800 was very limited at first, with only 256 bytes of memory and no easy way to input or output data. Despite this, it was surprisingly popular.

In April 1975, Olivetti presented the P6060, the world's first complete, pre-assembled personal computer system. It had floppy disk drives, a display, a printer, and 48 KB of RAM. It weighed 40 kg.

From 1975 to 1977, most microcomputers, like the MOS Technology KIM-1 and the Altair 8800, were sold as kits for people to build themselves. Pre-assembled systems didn't become popular until 1977, with the introduction of the Apple II, the Tandy TRS-80, and the Commodore PET. Today, microcomputer designs are dominant in most computer markets.

A NeXT Computer and its development tools were used by Tim Berners-Lee and Robert Cailliau at CERN to create the world's first web server software and the first web browser, WorldWideWeb.

Computers, especially complex ones, need to be very reliable. ENIAC ran continuously from 1947 to 1955, for eight years, without being shut down. If a vacuum tube failed, it could be replaced without stopping the whole system. By never shutting down ENIAC, failures were greatly reduced. Today, hot-pluggable hard disks continue this idea of repairing parts while the system is still running.

In the 21st century, multi-core CPUs became common. These CPUs have multiple processing units on one chip. Content-addressable memory (CAM) has become cheap enough for use in networking and in cache memory in modern microprocessors.

CMOS circuits, developed in the 1980s, allowed computer power consumption to drop dramatically. Unlike older circuits that constantly used power, CMOS circuits only use significant power when they change between states.

CMOS circuits have made computing very common. Computers are now everywhere, from greeting cards and telephones to satellites. The amount of heat a computer gives off (thermal design power) has become as important as its speed. The SoC (system on a chip) has put even more parts onto a single chip, allowing phones and PCs to become single, handheld wireless devices.

Quantum computing is a new and exciting technology. In 2017, IBM created a 50-qubit computer. Google researchers have found ways to make quantum states last longer. These developments are pushing the boundaries of what computers can do.

Epilogue

The field of computing has developed incredibly fast. By the time someone could write down how a new computer worked, it was often already outdated. After 1945, people read John von Neumann's report on the EDVAC and immediately started building their own systems. This rapid pace of development has continued worldwide ever since.

See also

In Spanish: Historia del hardware para niños

In Spanish: Historia del hardware para niños

- Antikythera mechanism

- History of computing

- History of computing hardware (1960s–present)

- History of laptops

- History of personal computers

- History of software

- Information Age

- IT History Society

- Retrocomputing

- Timeline of computing

- List of pioneers in computer science

- Vacuum-tube computer

| Precious Adams |

| Lauren Anderson |

| Janet Collins |