History of artificial intelligence facts for kids

The history of artificial intelligence (AI) started long ago, even in ancient times. People told myths and stories about artificial beings that seemed to have intelligence or consciousness. These ideas were like the first seeds for modern AI. Philosophers then tried to explain human thinking as a mechanical process of moving symbols around. This led to the invention of the programmable digital computer in the 1940s. This machine, based on mathematical ideas, inspired scientists to think about building an "electronic brain".

The official field of AI research began at a special meeting called the Dartmouth workshop in 1956. This meeting happened at Dartmouth College, USA. The people who attended became the main leaders of AI research for many years. Many of them believed that a machine as smart as a human would exist very soon, maybe in just one generation! They received lots of money to make this dream come true.

But it turned out that building AI was much harder than they thought. In 1974, after some criticism and pressure from governments, the U.S. and British Governments stopped funding general AI research. The tough years that followed were called an "AI winter". Seven years later, the Japanese Government started a big project that inspired other governments and companies to invest billions in AI again. However, by the late 1980s, investors became disappointed and stopped funding once more.

Interest and money for AI grew a lot in the 2020s. This happened because machine learning became very successful. New methods, powerful computers, and huge amounts of data helped solve many problems in schools and businesses.

Contents

Early Ideas About AI

Myths, Stories, and Robots

Ancient Myths and Legends

In ancient Greek stories, Talos was a giant made of bronze. He guarded the island of Crete, throwing rocks at enemy ships. The god Hephaestus supposedly made Talos as a gift. In another story, Jason and his Argonauts defeated Talos by removing a plug near his foot, which let out his life force.

Pygmalion was a legendary Greek king and sculptor. He is famous for a story where he created a statue that came to life.

Medieval Legends of Artificial Beings

The Swiss alchemist Paracelsus wrote about how to create an "artificial man" in his book Of the Nature of Things.

One of the first written accounts of making a Golem comes from the early 1200s. During the Middle Ages, people believed a Golem could come to life if you put a piece of paper with God's name on it into its mouth. Unlike other legendary robots, a Golem could not speak.

The idea of Takwin, or creating life artificially, was often discussed in old Islamic alchemy books. Islamic alchemists tried to create many forms of life, from plants to animals.

In the play Faust: The Second Part of the Tragedy by Johann Wolfgang von Goethe, a tiny artificial human called a homunculus is made. It wants to become a full human, but its glass flask breaks, and it dies.

Modern Stories

By the 1800s, ideas about artificial people and thinking machines appeared in many stories. Examples include Mary Shelley's Frankenstein and Karel Čapek's play R.U.R. (Rossum's Universal Robots). AI is still a very common topic in science fiction today.

Automata: Moving Machines

Many skilled craftspeople from different civilizations built realistic humanoid automata (moving machines). These included Yan Shi, Hero of Alexandria, Al-Jazari, Pierre Jaquet-Droz, and Wolfgang von Kempelen.

The oldest known automata were sacred statues in ancient Egypt and Greece. People believed that craftsmen had put real minds into these figures, making them wise and emotional.

During the early modern period, people thought these legendary automata could answer questions. The medieval alchemist Roger Bacon was even said to have made a brazen head that could speak.

Thinking with Logic

Artificial intelligence is based on the idea that human thought can be made into a machine process. The study of mechanical, or "formal," thinking has a long history. Philosophers from China, India, and Greece developed structured ways of thinking logically thousands of years ago. Their ideas were built upon by thinkers like Aristotle (who studied syllogisms), Euclid (whose Elements showed perfect logical reasoning), and al-Khwārizmī (who invented algebra and gave us the word "algorithm").

The Spanish philosopher Ramon Llull (1232–1315) created several "logical machines." These machines were designed to produce knowledge by combining basic truths using simple logical steps. Llull's work greatly influenced Gottfried Leibniz.

In the 1600s, Leibniz, Thomas Hobbes, and René Descartes wondered if all logical thought could be as systematic as algebra. Hobbes famously wrote that "reason is nothing but reckoning." Leibniz imagined a universal language for reasoning, where arguments could be solved like math problems. These philosophers began to suggest that thinking could be done by manipulating symbols, which became a key idea in AI.

In the 1900s, the study of mathematical logic made AI seem possible. Important works by Boole and Frege set the stage. Building on this, Russell and Whitehead wrote Principia Mathematica in 1913, which formally described the foundations of mathematics. Inspired by this, David Hilbert asked if all mathematical reasoning could be made formal.

Gödel's incompleteness proof, Turing's machine, and Church's Lambda calculus answered Hilbert's question. Their answer was surprising: there were limits to what mathematical logic could do. But more importantly for AI, their work showed that within these limits, any mathematical reasoning could be done by a machine. The Church-Turing thesis suggested that a simple machine, just moving 0s and 1s, could imitate any mathematical deduction. The Turing machine was a simple idea that captured the essence of symbol manipulation. This inspired scientists to think about building thinking machines.

Computer Science

Calculating machines were designed and built throughout history by many people, including Gottfried Leibniz, Charles Babbage, and others. Ada Lovelace thought Babbage's machine might be a "thinking machine," but she also warned against overestimating its powers.

The first modern computers were huge machines built during World War II. Examples include Konrad Zuse's Z3, Alan Turing's Colossus, and the ENIAC at the University of Pennsylvania. The ENIAC, based on Alan Turing's ideas, was very important.

The Start of AI (1941-1956)

Early research into thinking machines was inspired by new ideas in the 1930s, 40s, and 50s. Neurology showed that the brain was an electrical network of neurons. Norbert Wiener's cybernetics described how electrical networks could control things. Claude Shannon's information theory explained digital signals. Alan Turing's theory of computation showed that any calculation could be described digitally. These ideas together suggested that an "electronic brain" might be possible.

In the 1940s and 50s, scientists from different fields explored ideas important for AI. Alan Turing was one of the first to seriously think about "machine intelligence." The field of "artificial intelligence research" officially started in 1956.

The Turing Test

Alan Turing was thinking about machine intelligence as early as 1941. In 1950, he published a famous paper called "Computing Machinery and Intelligence." In it, he wondered if machines could think and introduced his idea of the Turing test. He said that "thinking" is hard to define. So, he suggested that if a machine could have a conversation (using a teleprinter) that was so good you couldn't tell if it was a human or a machine, then it was reasonable to say the machine was "thinking." This simpler way of looking at the problem helped Turing argue that a "thinking machine" was possible. The Turing Test was the first serious idea in the philosophy of artificial intelligence.

Artificial Neural Networks

In 1943, Walter Pitts and Warren McCulloch studied networks of simple artificial neurons. They showed how these networks could perform basic logical tasks. They were the first to describe what later became known as a neural network. One student inspired by them was Marvin Minsky. In 1951, he built the first neural net machine, the SNARC. Minsky later became a very important leader in AI.

Cybernetic Robots

Experimental robots like W. Grey Walter's turtles and the Johns Hopkins Beast were built in the 1950s. These machines didn't use computers or digital electronics. They were controlled entirely by analog circuits.

AI in Games

In 1951, Christopher Strachey wrote a checkers program, and Dietrich Prinz wrote one for chess. These ran on the Ferranti Mark 1 computer. Arthur Samuel's checkers program, developed later, became good enough to challenge a skilled amateur player. Using Game AI to play games continued to be a way to measure AI progress.

Symbolic Reasoning and the Logic Theorist

When digital computers became available in the mid-1950s, some scientists realized that machines that could handle numbers could also handle symbols. They thought that manipulating symbols might be the core of human thought. This was a new way to try and create thinking machines.

In 1955, Allen Newell and Herbert A. Simon created the "Logic Theorist" program. This program eventually proved 38 of the first 52 theorems in Russell and Whitehead's Principia Mathematica. It even found new, simpler proofs for some of them. Simon believed they had "solved the venerable mind/body problem," explaining how a machine could have properties of a mind. This idea, that machines could have minds, became known as "Strong AI." The symbolic reasoning approach they started became very important in AI research for decades.

Dartmouth Workshop

The Dartmouth workshop in 1956 was a key event that officially started AI as a field of study. It was organized by Marvin Minsky, John McCarthy, and two senior scientists: Claude Shannon and Nat Rochester. The main goal was to explore how to create machines that could imitate human intelligence. The proposal for the meeting said they wanted to test if "every part of learning or any other feature of intelligence can be so precisely described that a machine can be made to simulate it."

The term "Artificial Intelligence" itself was first used by John McCarthy at this workshop. He chose this name to avoid connections with other fields like cybernetics.

Many important researchers attended, including Ray Solomonoff, Oliver Selfridge, Arthur Samuel, Allen Newell, and Herbert A. Simon. All of them would create important AI programs later. At the workshop, Newell and Simon showed off their "Logic Theorist" program.

The 1956 Dartmouth workshop gave AI its name, its goals, its first big success, and its key players. It is widely seen as the birth of AI.

The Cognitive Revolution

In late 1956, Newell and Simon also presented the Logic Theorist at a meeting at MIT. At the same meeting, Noam Chomsky talked about his ideas on language, and George Miller discussed his famous paper "The Magical Number Seven, Plus Or Minus Two" about human memory. Miller felt that experimental psychology, language studies, and computer simulations of thinking were all connected.

This meeting started the "cognitive revolution." This was a big shift in how people thought about psychology, philosophy, computer science, and neuroscience. It led to new areas like symbolic artificial intelligence, generative linguistics, cognitive science, and cognitive psychology. All these fields used similar tools to understand the mind.

The cognitive approach allowed researchers to study "mental objects" like thoughts, plans, goals, and memories. These were often seen as high-level symbols in networks. Before this, such objects were considered "unobservable" by other approaches like behaviorism. Symbolic mental objects became the main focus of AI research for many years.

Early Successes (1956-1974)

The computer programs developed after the Dartmouth Workshop were amazing to most people. Computers were solving algebra problems, proving geometry theorems, and learning to speak English. Few people at the time thought machines could show such "intelligent" behavior. Researchers were very hopeful, predicting that a fully intelligent machine would be built in less than 20 years. Government groups like DARPA poured money into the new field. AI labs were set up at many British and US universities in the late 1950s and early 1960s.

Different Approaches

Many successful programs and new ideas came out in the late 1950s and 1960s. Here are some of the most important:

Reasoning as Search

Many early AI programs used a similar basic algorithm. To reach a goal (like winning a game or proving a theorem), they moved step by step, like searching through a maze. If they hit a dead end, they would go back and try another path.

The main challenge was that for many problems, the number of possible paths was huge. This is called a "combinatorial explosion." Researchers tried to reduce the search space by using heuristics or "rules of thumb." These rules helped them avoid paths that were unlikely to lead to a solution.

Newell and Simon tried to create a general version of this algorithm in a program called the "General Problem Solver." Other "searching" programs did impressive things, like solving geometry and algebra problems. Examples include Herbert Gelernter's Geometry Theorem Prover (1958) and James Slagle's Symbolic Automatic Integrator (SAINT) (1961). Other programs searched through goals to plan actions, like the STRIPS system that controlled the robot Shakey.

Neural Networks

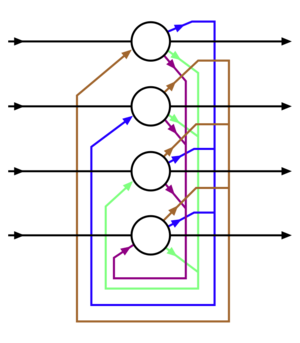

The paper by McCulloch and Pitts (1944) inspired people to build computer hardware that used the neural network idea for AI. Frank Rosenblatt led an important effort to build Perceptron machines (1957-1962). These machines had up to four layers and were funded by the Office of Naval Research. Bernard Widrow and his student Ted Hoff built ADALINE (1960) and MADALINE (1962), which had up to 1000 adjustable connections. A group at Stanford Research Institute built MINOS I (1960) and II (1963), which were funded by the U.S. Army Signal Corps. MINOS II had 6600 adjustable connections and could classify symbols on army maps.

Most neural network research back then involved building special hardware, not just running simulations on computers. The ways they built the adjustable connections were very different. Perceptron machines used potentiometers moved by electric motors. ADALINE used memistors. The MINOS machines used ferrite cores.

Even though there were multi-layered neural networks, most only had one layer of adjustable connections. Training more than one layer was difficult and often unsuccessful. The Backpropagation method for training neural networks didn't become popular until the 1980s.

Natural Language

A big goal for AI research is to let computers talk in natural languages like English. An early success was Daniel Bobrow's program STUDENT. It could solve high school algebra word problems.

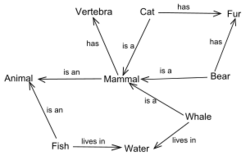

A semantic net shows concepts (like "house" or "door") as points and the relationships between them (like "has-a") as lines. The first AI program to use a semantic net was by Ross Quillian. A very successful version was Roger Schank's Conceptual dependency theory.

Joseph Weizenbaum's ELIZA program could have conversations that seemed so real that people sometimes thought they were talking to a human, not a computer (this is called the ELIZA effect). But ELIZA didn't actually understand what she was saying. She just gave a canned response or repeated what was said to her, changing it a bit with grammar rules. ELIZA was the first chatterbot.

Micro-worlds

In the late 1960s, Marvin Minsky and Seymour Papert at the MIT AI Lab suggested that AI research should focus on very simple situations called micro-worlds. They noted that in successful sciences like physics, basic rules are often best understood using simplified models. Much of their research focused on a "blocks world." This world had colored blocks of different shapes and sizes on a flat surface.

This idea led to new work in machine vision by Gerald Sussman, Adolfo Guzman, David Waltz, and Patrick Winston. At the same time, Minsky and Papert built a robot arm that could stack blocks, bringing the blocks world to life. The biggest achievement of the micro-world program was Terry Winograd's SHRDLU. It could talk in normal English sentences, plan actions, and carry them out.

Automata

In Japan, Waseda University started the WABOT project in 1967. In 1972, they finished WABOT-1, the world's first full-size "intelligent" humanoid robot, or android. It could walk, grip objects with its hands using touch sensors, and measure distances with its artificial eyes and ears. It could also talk to people in Japanese using an artificial mouth.

Great Hopes

The first group of AI researchers made these predictions about their work:

- 1958, H. A. Simon and Allen Newell: "within ten years a digital computer will be the world's chess champion" and "within ten years a digital computer will discover and prove an important new mathematical theorem."

- 1965, H. A. Simon: "machines will be capable, within twenty years, of doing any work a man can do."

- 1967, Marvin Minsky: "Within a generation ... the problem of creating 'artificial intelligence' will substantially be solved."

- 1970, Marvin Minsky (in Life Magazine): "In from three to eight years we will have a machine with the general intelligence of an average human being."

Funding for AI

In June 1963, MIT received a $2.2 million grant from the new Advanced Research Projects Agency (later called DARPA). This money funded project MAC, which included the "AI Group" started by Minsky and McCarthy five years earlier. DARPA continued to provide three million dollars a year until the 1970s.

DARPA gave similar grants to Newell and Simon's program at CMU and to the Stanford AI Project (started by John McCarthy in 1963). Another important AI lab was set up at Edinburgh University by Donald Michie in 1965. These four places became the main centers for AI research and funding in universities for many years.

The money came with few rules. J. C. R. Licklider, then the director of ARPA, believed his organization should "fund people, not projects!" This allowed researchers to explore whatever interested them. This created a free and creative atmosphere at MIT that led to the hacker culture. However, this "hands-off" approach didn't last.

First AI Winter (1974–1980)

In the 1970s, AI faced criticism and money problems. AI researchers had not understood how difficult their problems were. Their huge hopes had made the public expect too much. When the promised results didn't happen, funding for AI almost disappeared. At the same time, research into simple, single-layer artificial neural networks almost completely stopped for a decade. This was partly because of Marvin Minsky's book, Perceptrons, which highlighted the limits of what perceptrons could do.

Despite the public's doubts about AI in the late 1970s, new ideas were explored in logic programming, commonsense reasoning, and other areas.

Problems AI Faced

In the early 1970s, AI programs were limited. Even the most impressive ones could only handle very simple versions of problems. All the programs were, in a way, "toys." AI researchers started to hit several basic limits that they couldn't overcome in the 1970s. Some of these limits were solved later, but others are still challenges today.

- Limited Computer Power: There wasn't enough memory or processing speed to do anything truly useful. For example, Ross Quillian's work on natural language used a vocabulary of only twenty words because that's all that would fit in memory. Hans Moravec said in 1976 that computers were still millions of times too weak for intelligence. He used an analogy: AI needs computer power like airplanes need horsepower. Below a certain level, it's impossible, but as power increases, it could become easy.

- Intractability and the combinatorial explosion. In 1972, Richard Karp showed that many problems can only be solved in exponential time. This means finding the best solutions takes an unimaginable amount of computer time unless the problems are very simple. This meant that many of the "toy" solutions used by AI would probably never work for useful systems.

- Commonsense knowledge and reasoning. Many important AI applications like vision or natural language need huge amounts of information about the world. The program needs to know most of the same things a child does. Researchers soon found out this was a truly vast amount of information. No one in 1970 could build such a large database, and no one knew how a program could learn so much.

- Moravec's paradox: Proving theorems and solving geometry problems is relatively easy for computers. But a seemingly simple task like recognizing a face or walking across a room without bumping into things is extremely difficult. This helps explain why research in vision and robotics had made little progress by the mid-1970s.

- The frame and qualification problems. AI researchers who used logic found they couldn't represent everyday thinking that involved planning or making assumptions without changing logic itself. They developed new logics to try and solve these problems.

End of Funding

The groups that funded AI research (like the British government, DARPA, and NRC) became frustrated with the lack of progress. They eventually cut almost all funding for general AI research. This started as early as 1966 when a report criticized machine translation efforts. After spending $20 million, the NRC stopped all support.

In 1973, the Lighthill report in the UK criticized AI's failure to reach its "grand goals" and led to the end of AI research funding there. (The report specifically mentioned the combinatorial explosion problem as a reason for AI's failures.) DARPA was also disappointed with researchers working on the Speech Understanding Research program and canceled a $3 million annual grant. By 1974, it was very hard to find funding for AI projects.

Funding for neural network research ended even earlier, partly due to lack of results and competition from symbolic AI. The MINOS project ran out of money in 1966. Rosenblatt couldn't get continued funding in the 1960s.

Hans Moravec blamed the crisis on his colleagues' unrealistic predictions. "Many researchers were caught up in a web of increasing exaggeration." However, there was another issue: after 1969, DARPA was pressured to fund "mission-oriented direct research" rather than basic, undirected research. So, the money for creative exploration that happened in the 1960s stopped coming from DARPA. Instead, funds went to specific projects with clear goals, like autonomous tanks.

Criticism from Other Fields

Several philosophers strongly disagreed with the claims AI researchers were making. One of the first was John Lucas, who argued that Gödel's incompleteness theorem showed that a computer program could never see the truth of certain statements, while a human could. Hubert Dreyfus made fun of the broken promises of the 1960s. He argued that human reasoning involved very little "symbol processing" and a lot of instinctive, unconscious "know-how." John Searle's Chinese Room argument in 1980 tried to show that a program couldn't "understand" the symbols it used. If the symbols had no meaning for the machine, Searle argued, then the machine couldn't be described as "thinking."

AI researchers often didn't take these criticisms seriously. Problems like intractability and commonsense knowledge seemed much more urgent. It wasn't clear how "know-how" or "intentionality" affected an actual computer program. Minsky said of Dreyfus and Searle, "they misunderstand, and should be ignored." Dreyfus, who taught at MIT, was given the cold shoulder. He later said that AI researchers "dared not be seen having lunch with me." Joseph Weizenbaum, who created ELIZA, felt his colleagues' treatment of Dreyfus was unprofessional. Even though he disagreed with Dreyfus, he "deliberately made it plain that theirs was not the way to treat a human being."

Weizenbaum started having serious ethical doubts about AI when Kenneth Colby wrote a "computer program which can conduct psychotherapeutic dialogue" based on ELIZA. Weizenbaum was upset that Colby saw a mindless program as a serious therapy tool. A disagreement started, and it didn't help that Colby didn't give Weizenbaum credit. In 1976, Weizenbaum published Computer Power and Human Reason, arguing that misusing AI could make human life seem less valuable.

Perceptrons and Opposition to Connectionism

A perceptron was a type of neural network introduced in 1958 by Frank Rosenblatt. Like most AI researchers, he was hopeful about their power, predicting that "perceptron may eventually be able to learn, make decisions, and translate languages." Active research on perceptrons happened throughout the 1960s but suddenly stopped with the publication of Minsky and Papert's 1969 book Perceptrons. It suggested that perceptrons had serious limits and that Rosenblatt's predictions were greatly exaggerated. The book had a huge impact: almost no research in connectionism was funded for 10 years.

Of the main neural network efforts, Rosenblatt tried to get money to build bigger perceptron machines but died in a boating accident in 1971. Minsky (of SNARC) became a strong opponent of pure connectionist AI. Widrow (of ADALINE) turned to adaptive signal processing. The SRI group (of MINOS) turned to symbolic AI and robotics. The main problems were lack of funding and the inability to train multilayered networks (the backpropagation method was unknown). The competition for government funding ended with symbolic AI approaches winning.

Logic at Stanford, CMU, and Edinburgh

Logic was brought into AI research as early as 1959 by John McCarthy in his Advice Taker idea. In 1963, J. Alan Robinson found a simple way to make computers deduce things, using the resolution and unification algorithm. However, simple uses of this, like those tried by McCarthy and his students in the late 1960s, were very difficult: programs needed huge numbers of steps to prove simple theorems.

A more successful approach to logic was developed in the 1970s by Robert Kowalski at the University of Edinburgh. This led to a collaboration with French researchers who created the successful logic programming language Prolog. Prolog uses a simpler part of logic that allows for easier calculations. These "rules" continued to be important, forming the basis for Edward Feigenbaum's expert systems and the ongoing work by Allen Newell and Herbert A. Simon.

Critics of the logic approach noted that humans rarely used logic when solving problems. Psychologists showed this with experiments. McCarthy replied that what people do is not important. He argued that what is truly needed are machines that can solve problems, not machines that think exactly like people.

MIT's "Anti-Logic" Approach

Among the critics of McCarthy's approach were his colleagues at MIT. Marvin Minsky, Seymour Papert, and Roger Schank were trying to solve problems like "story understanding" and "object recognition." These problems required a machine to think more like a person. To use everyday concepts like "chair" or "restaurant," they had to make the same illogical assumptions that people normally make. Unfortunately, imprecise concepts like these are hard to represent in logic. Gerald Sussman noted that "using precise language to describe essentially imprecise concepts doesn't make them any more precise." Schank called their "anti-logic" approaches "scruffy," unlike the "neat" approaches used by McCarthy and others.

In 1975, in an important paper, Minsky noted that many of his fellow researchers were using a similar tool: a framework that holds all our common sense assumptions about something. For example, if we think of a bird, many facts immediately come to mind: it flies, eats worms, and so on. We know these facts aren't always true, and deductions using them won't be "logical." But these structured sets of assumptions are part of the context of everything we say and think. He called these structures "frames." Schank used a version of frames he called "scripts" to successfully answer questions about short stories in English.

New Logics Emerge

The logicians accepted the challenge. Pat Hayes said that "most of 'frames' is just a new way to write parts of first-order logic." But he noted that "there are one or two apparently minor details which give a lot of trouble, however, especially defaults." Meanwhile, Ray Reiter admitted that "conventional logics ... lack the power to properly represent the knowledge needed for reasoning by default." He suggested adding a closed world assumption to first-order logic. This assumption means a conclusion is true (by default) if its opposite cannot be proven. He showed how this matches the common sense assumption made when reasoning with frames. He also showed it has a "procedural equivalent" as negation as failure in Prolog.

The closed world assumption, as Reiter explained it, "is not a first-order notion." However, Keith Clark showed that negation as finite failure can be understood as reasoning with definitions in first-order logic.

During the late 1970s and throughout the 1980s, many different logics were developed for negation as failure in logic programming and for default reasoning. Together, these logics became known as non-monotonic logics.

AI Boom (1980–1987)

In the 1980s, a type of AI program called "expert systems" was used by companies worldwide. Knowledge became the main focus of AI research. In those same years, the Japanese government heavily funded AI with its fifth generation computer project. Another positive event in the early 1980s was the return of connectionism with the work of John Hopfield and David Rumelhart. Once again, AI was successful.

Rise of Expert Systems

An expert system is a program that answers questions or solves problems in a specific area of knowledge. It uses logical rules taken from experts. The first examples were developed by Edward Feigenbaum and his students. Dendral, started in 1965, identified chemical compounds from readings. MYCIN, developed in 1972, diagnosed infectious blood diseases. These showed that the approach could work.

Expert systems focused on a small area of specific knowledge (avoiding the commonsense knowledge problem). Their simple design made them relatively easy to build and change. Overall, the programs proved to be useful, which AI hadn't achieved before.

In 1980, an expert system called XCON was finished for the Digital Equipment Corporation. It was a huge success, saving the company $40 million each year by 1986. Companies worldwide began to develop and use expert systems. By 1985, they were spending over a billion dollars on AI, mostly on their own in-house AI teams. An industry grew to support them, including hardware companies like Symbolics and software companies like IntelliCorp.

The Knowledge Revolution

The power of expert systems came from the expert knowledge they held. This was part of a new direction in AI research that had been growing throughout the 1970s. "AI researchers were starting to think—even though it went against the scientific rule of simplicity—that intelligence might depend on using large amounts of different knowledge in different ways," wrote Pamela McCorduck. "The big lesson from the 1970s was that intelligent behavior depended a lot on dealing with knowledge, sometimes very detailed knowledge, of the area where a task was." Knowledge based systems and knowledge engineering became a major focus of AI research in the 1980s.

The 1980s also saw the start of Cyc. This was the first attempt to directly tackle the commonsense knowledge problem. It aimed to create a huge database with all the everyday facts that an average person knows. Douglas Lenat, who led the project, argued there was no shortcut. Machines could only learn the meaning of human concepts by being taught them one by one, by hand. The project was expected to take many decades to finish.

Chess programs HiTech and Deep Thought defeated chess masters in 1989. Both were developed by Carnegie Mellon University. Deep Thought's development paved the way for Deep Blue.

Money Returns: Fifth Generation Project

In 1981, the Japanese Ministry of International Trade and Industry set aside $850 million for the Fifth generation computer project. Their goals were to write programs and build machines that could have conversations, translate languages, understand pictures, and reason like humans. To the dismay of some researchers, they chose Prolog as the main computer language for the project.

Other countries responded with their own new programs. The UK started the £350 million Alvey project. A group of American companies formed the Microelectronics and Computer Technology Corporation (MCC) to fund large AI and information technology projects. DARPA also responded, starting the Strategic Computing Initiative and tripling its investment in AI between 1984 and 1988.

Neural Networks Come Back

In 1982, physicist John Hopfield proved that a type of neural network (now called a "Hopfield net") could learn and process information. It was a breakthrough because it was previously thought that complex networks would behave chaotically.

Around the same time, Geoffrey Hinton and David Rumelhart made a method for training neural networks called "backpropagation" popular. These two discoveries helped bring back research into artificial neural networks.

Starting with the 1986 book Parallel Distributed Processing, neural network research gained new energy. It became successful in business in the 1990s, used for things like optical character recognition and speech recognition.

The development of modern computer chips (MOS very-large-scale integration, or VLSI) made it possible to build practical artificial neural network technology in the 1980s. A key book in this field was Analog VLSI Implementation of Neural Systems (1989) by Carver A. Mead and Mohammed Ismail.

Second AI Winter (1987–1993)

The business world's interest in AI grew and then fell in the 1980s, like an economic bubble. As many companies failed, people thought the technology wasn't practical. However, the field kept making progress despite the criticism. Many researchers, including robotics developers Rodney Brooks and Hans Moravec, argued for a completely new way to approach AI.

AI Winter Returns

The term "AI winter" was created by researchers who had lived through the funding cuts of 1974. They worried that the excitement for expert systems had grown too much and that disappointment would surely follow. Their fears were right. In the late 1980s and early 1990s, AI faced a series of financial problems.

The first sign of trouble was the sudden collapse of the market for special AI hardware in 1987. Desktop computers from Apple and IBM were getting faster and more powerful. By 1987, they became more powerful than the more expensive Lisp machines made by Symbolics and others. There was no longer a good reason to buy the specialized machines. An entire industry worth half a billion dollars was destroyed overnight.

Eventually, the earliest successful expert systems, like XCON, became too expensive to keep running. They were hard to update, couldn't learn, and were "brittle" (meaning they could make huge mistakes with unusual inputs). They also ran into problems that had been identified years earlier. Expert systems were useful, but only in a few specific situations.

In the late 1980s, the Strategic Computing Initiative cut funding to AI "deeply and brutally." New leaders at DARPA decided that AI was not "the next big thing" and directed money towards projects that seemed more likely to produce immediate results.

By 1991, the impressive goals set in 1981 for Japan's Fifth Generation Project had not been met. In fact, some of them, like "carry on a casual conversation," hadn't been met even by 2010. As with other AI projects, expectations had been much higher than what was actually possible.

Over 300 AI companies had closed, gone bankrupt, or been bought by the end of 1993. This effectively ended the first business wave of AI. In 1994, HP Newquist wrote that "The immediate future of artificial intelligence—in its commercial form—seems to rest in part on the continued success of neural networks."

New AI and Embodied Reasoning

In the late 1980s, some researchers suggested a completely new way to approach artificial intelligence, based on robotics. They believed that for a machine to show real intelligence, it needed a body. It needed to sense, move, survive, and interact with the world. They argued that these physical skills are essential for higher-level skills like commonsense reasoning. They also thought that abstract reasoning was actually the least interesting or important human skill (see Moravec's paradox). They suggested building intelligence "from the bottom up."

This approach brought back ideas from cybernetics and control theory that had been unpopular since the 1960s. Another early influence was David Marr, who came to MIT in the late 1970s to lead the group studying vision. He rejected all symbolic approaches, arguing that AI needed to understand the physical workings of vision before any symbolic processing could happen. (Marr's work was cut short when he died in 1980.)

In his 1990 paper "Elephants Don't Play Chess," robotics researcher Rodney Brooks directly challenged the idea that thinking is just manipulating symbols. He argued that "the world is its own best model. It is always exactly up to date. It always has every detail there is to be known. The trick is to sense it appropriately and often enough." In the 1980s and 1990s, many cognitive scientists also rejected the idea that the mind only processes symbols. They argued that the body was essential for reasoning, a theory called the embodied mind thesis.

AI (1993–2011)

The field of AI, now more than 50 years old, finally achieved some of its oldest goals. It started to be used successfully throughout the technology industry, though often behind the scenes. Some of this success was due to more powerful computers. Some was achieved by focusing on specific, isolated problems and solving them with high scientific standards. Still, AI's reputation, especially in business, was not perfect. Within the field, there was little agreement on why AI hadn't reached the dream of human-level intelligence that had captured the world's imagination in the 1960s. All these factors helped break AI into different subfields, each focusing on particular problems or approaches. Sometimes, they even used new names to hide the "artificial intelligence" label, which had a bad reputation. AI became both more careful and more successful than ever before.

Big Achievements and Moore's Law

On May 11, 1997, Deep Blue became the first computer chess system to beat a reigning world chess champion, Garry Kasparov. This supercomputer was a special version of an IBM system. It could process twice as many moves per second as it had during the first match (which Deep Blue lost), reportedly 200,000,000 moves per second.

In 2005, a Stanford robot won the DARPA Grand Challenge by driving itself for 131 miles across a desert trail it hadn't seen before. Two years later, a team from CMU won the DARPA Urban Challenge by driving autonomously for 55 miles in a city, following traffic rules and avoiding dangers. In February 2011, in a Jeopardy! quiz show match, IBM's question answering system, Watson, defeated the two greatest Jeopardy! champions by a large margin.

These successes weren't due to some revolutionary new idea. They were mostly because of careful engineering and the huge increase in computer speed and capacity by the 1990s. In fact, Deep Blue's computer was 10 million times faster than the Ferranti Mark 1 that Christopher Strachey used to teach chess in 1951. This huge increase is measured by Moore's law, which says that the speed and memory of computers double every two years. The basic problem of "raw computer power" was slowly being overcome.

Intelligent Agents

A new idea called "intelligent agents" became widely accepted in the 1990s. Earlier researchers had suggested breaking AI problems into smaller parts. But the intelligent agent idea became modern when researchers like Judea Pearl, Allen Newell, and Leslie P. Kaelbling brought in concepts from decision theory and economics. When the economist's definition of a rational agent was combined with computer science's idea of an object or module, the intelligent agent idea was complete.

An intelligent agent is a system that senses its environment and takes actions that give it the best chance of success. By this definition, simple programs that solve specific problems are "intelligent agents," as are humans and groups of humans, like companies. The intelligent agent idea defines AI research as "the study of intelligent agents." This is a broader definition of AI. It goes beyond just studying human intelligence; it studies all kinds of intelligence.

This idea allowed researchers to study isolated problems and find solutions that were both provable and useful. It gave them a common language to describe problems and share solutions with each other and with other fields that also used ideas of abstract agents, like economics. It was hoped that a complete agent architecture (like Newell's SOAR) would one day allow researchers to build more flexible and intelligent systems from interacting intelligent agents.

Probabilistic Reasoning and More Rigor

AI researchers started to use advanced mathematical tools more than ever before. They realized that many problems AI needed to solve were already being worked on by researchers in fields like mathematics, electrical engineering, economics, or operations research. The shared mathematical language allowed for more collaboration with established fields. It also helped achieve results that were measurable and provable. AI had become a more strict "scientific" field.

Judea Pearl's important 1988 book brought probability and decision theory into AI. Many new tools were used, including Bayesian networks, hidden Markov models, information theory, and optimization. Precise mathematical descriptions were also developed for "computational intelligence" ideas like neural networks and evolutionary algorithms.

AI Behind the Scenes

Algorithms first developed by AI researchers started appearing as parts of larger systems. AI had solved many very difficult problems, and their solutions proved useful throughout the technology industry. This included things like data mining, industrial robotics, logistics, speech recognition, banking software, medical diagnosis, and Google's search engine.

The field of AI received little credit for these successes in the 1990s and early 2000s. Many of AI's greatest inventions became just another tool in computer science. Nick Bostrom explains, "A lot of cutting edge AI has filtered into general applications, often without being called AI because once something becomes useful enough and common enough it's not labeled AI anymore."

Many AI researchers in the 1990s purposely called their work by other names, such as informatics, knowledge-based systems, or computational intelligence. This was partly because they thought their field was different from AI. But the new names also helped them get funding. In the business world, at least, the failed promises of the AI Winter continued to affect AI research into the 2000s. As the New York Times reported in 2005: "Computer scientists and software engineers avoided the term artificial intelligence for fear of being viewed as wild-eyed dreamers."

Deep Learning, Big Data (2011–2020)

In the early 2000s, access to huge amounts of data (called "big data"), cheaper and faster computers, and advanced machine learning techniques were successfully used to solve many problems in the economy. In fact, a report estimated that by 2009, almost all parts of the US economy had at least 200 terabytes of stored data.

By 2016, the market for AI-related products, hardware, and software reached over $8 billion. The New York Times reported that interest in AI had reached a "frenzy." Big data applications also started reaching into other fields, like training models in ecology and for various uses in economics. Advances in deep learning (especially deep convolutional neural networks and recurrent neural networks) drove progress in image and video processing, text analysis, and even speech recognition.

The first global AI Safety Summit was held in Bletchley Park in November 2023. It discussed the short-term and long-term risks of AI and the possibility of rules for its use. 28 countries, including the United States, China, and the European Union, signed a declaration calling for international cooperation to manage AI's challenges and risks.

Deep Learning

Deep learning is a part of machine learning that creates complex models from data using many processing layers. While not strictly necessary for simple tasks, deep networks help avoid common problems like overfitting (where a model learns the training data too well and performs poorly on new data). Because of this, deep neural networks can create much more complex and realistic models than simpler networks.

However, deep learning has its own challenges. A common problem for recurrent neural networks is the vanishing gradient problem. This is when the signals passed between layers gradually shrink and disappear. Many methods have been developed to fix this, such as Long short-term memory units.

Modern deep neural network designs can sometimes even match human accuracy in areas like computer vision, such as recognizing traffic signs. Language processing engines powered by smart search engines can easily beat humans at answering general trivia questions (like IBM Watson). Recent deep learning developments have produced amazing results in competing with humans.

Big Data

Big data refers to collections of data that are so large they cannot be easily handled by traditional software tools within a certain time. It's a huge amount of information that can help with decision-making and improving processes. It requires new ways of processing. According to IBM, big data has five main characteristics: Volume (how much data), Velocity (how fast it's created), Variety (different types of data), Value (how useful it is), and Veracity (how accurate it is).

The real importance of big data technology isn't just having huge amounts of information. It's about being able to make sense of that meaningful data. In other words, if big data were an industry, the key to making money would be to improve the "process capability" of the data and add "value added" to it through "processing."

Large Language Models, AI Era (2020–Present)

The AI boom began with the creation of key designs and algorithms like the transformer architecture in 2017. This led to the development of large language models that show human-like qualities of reasoning, thinking, attention, and creativity. The "AI era" is said to have started around 2022-2023, with the public release of powerful large language models like ChatGPT.

Large Language Models

In 2017, Google researchers proposed the transformer architecture. It uses an attention mechanism and later became widely used in large language models.

Foundation models, which are large language models trained on huge amounts of unlabeled data, started to be developed in 2018. These models can be adapted to many different tasks.

Models like GPT-3, released by OpenAI in 2020, and Gato, released by DeepMind in 2022, have been called important achievements in machine learning.

In 2023, Microsoft Research tested the GPT-4 large language model with many different tasks. They concluded that it "could reasonably be viewed as an early (yet still incomplete) version of an artificial general intelligence (AGI) system."

See also

In Spanish: Historia de la inteligencia artificial para niños

In Spanish: Historia de la inteligencia artificial para niños

- History of artificial neural networks

- History of knowledge representation and reasoning

- History of natural language processing

- Outline of artificial intelligence

- Progress in artificial intelligence

- Timeline of artificial intelligence

- Timeline of machine learning

| Delilah Pierce |

| Gordon Parks |

| Augusta Savage |

| Charles Ethan Porter |